- 11 Dec 2024

- 4 Minutes to read

- Print

- DarkLight

- PDF

Snowflake Credit Management

- Updated on 11 Dec 2024

- 4 Minutes to read

- Print

- DarkLight

- PDF

This article applies to these versions of LandingLens:

| LandingLens | LandingLens on Snowflake |

| ✖ | ✓ |

Snowflake credits are used to pay for the consumption of resources on Snowflake. Like other apps and functions in Snowflake, running LandingLens on Snowflake consumes credits.

The LandingAI team has streamlined functionality to minimize credit consumption and is continuing to optimize LandingLens on Snowflake to use credits more efficiently.

To manage credit usage, use the LandingLens Configs section in Snowsight to update the CPU and GPU resources used by LandingLens. You can also suspend the LandingLens app if needed.

Manage the CPU and GPU Resources Used by the App

Manage the CPU and GPU Resources Used by the App

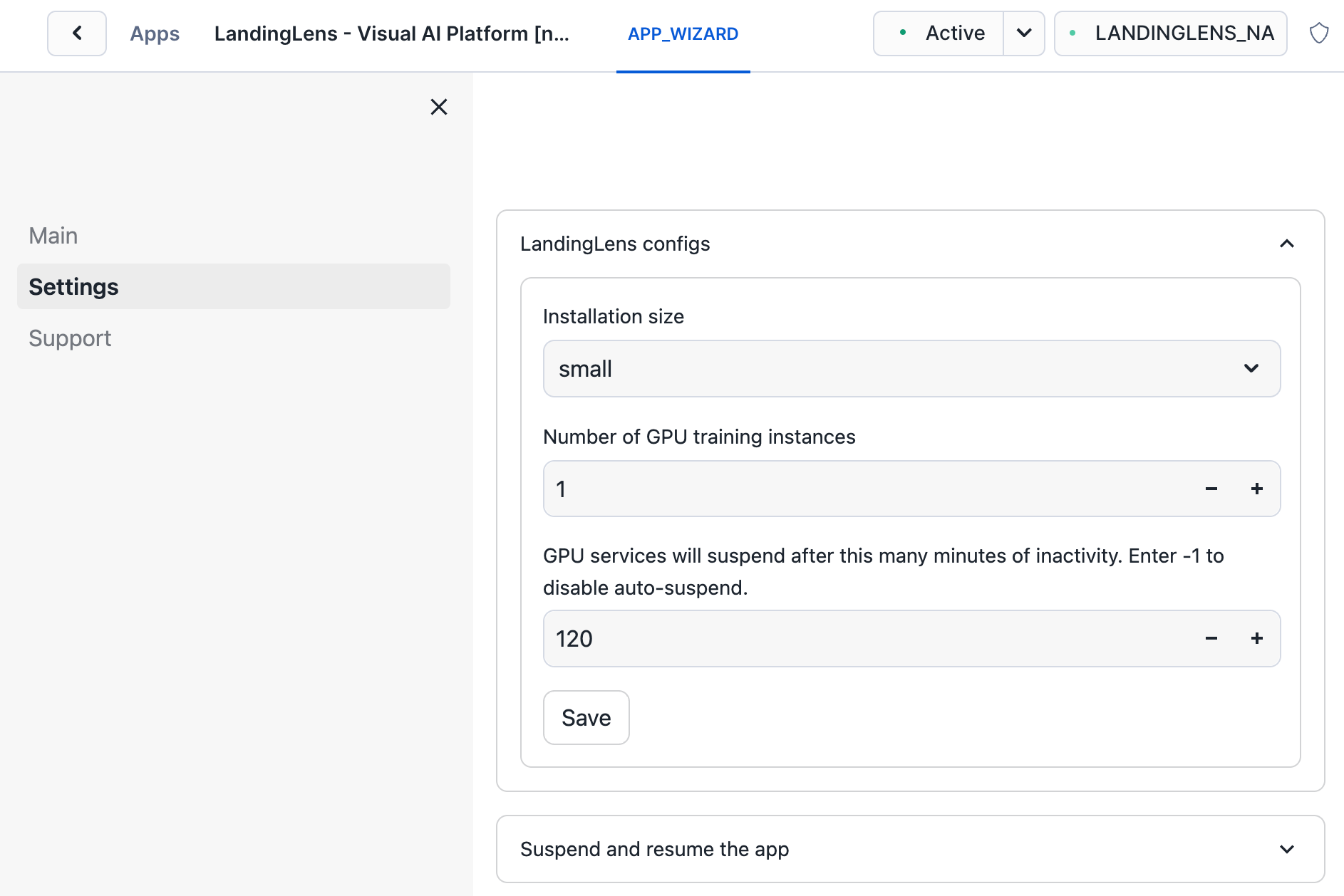

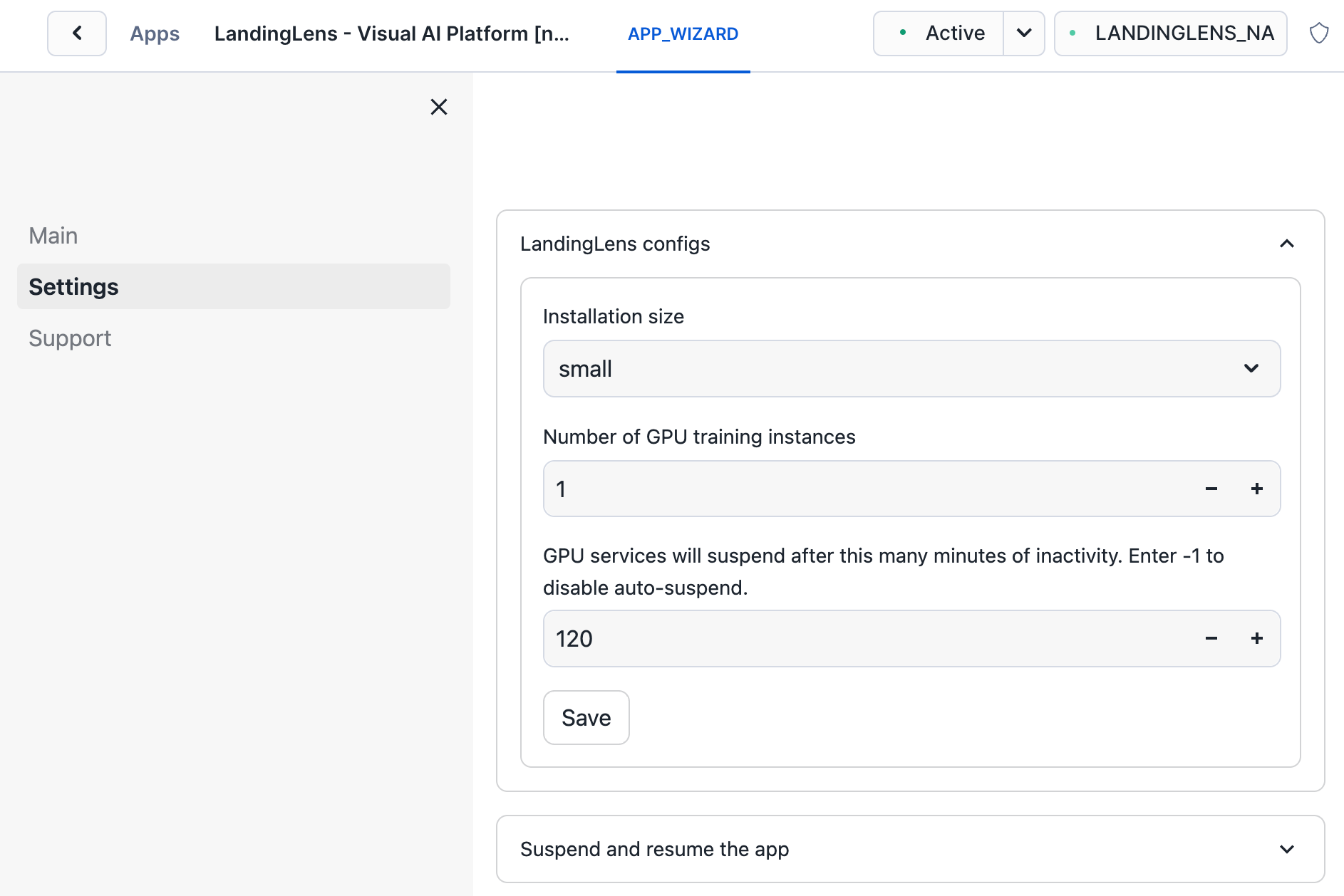

Access the CPU and GPU Settings (LandingLens Configs)

- Open Snowsight.

- Go to Data Products > Apps > LandingLens Visual AI Platform.

- Click Launch App.

Launch App

Launch App - Click APP_WIZARD.

- Click Settings.

- Expand the LandingLens Configs section.

- If needed, update the Installation Size, Number of GPUs, and Auto-Suspend GPU settings.

- Click Save.

Manage the CPU and GPU Resources Used by the App

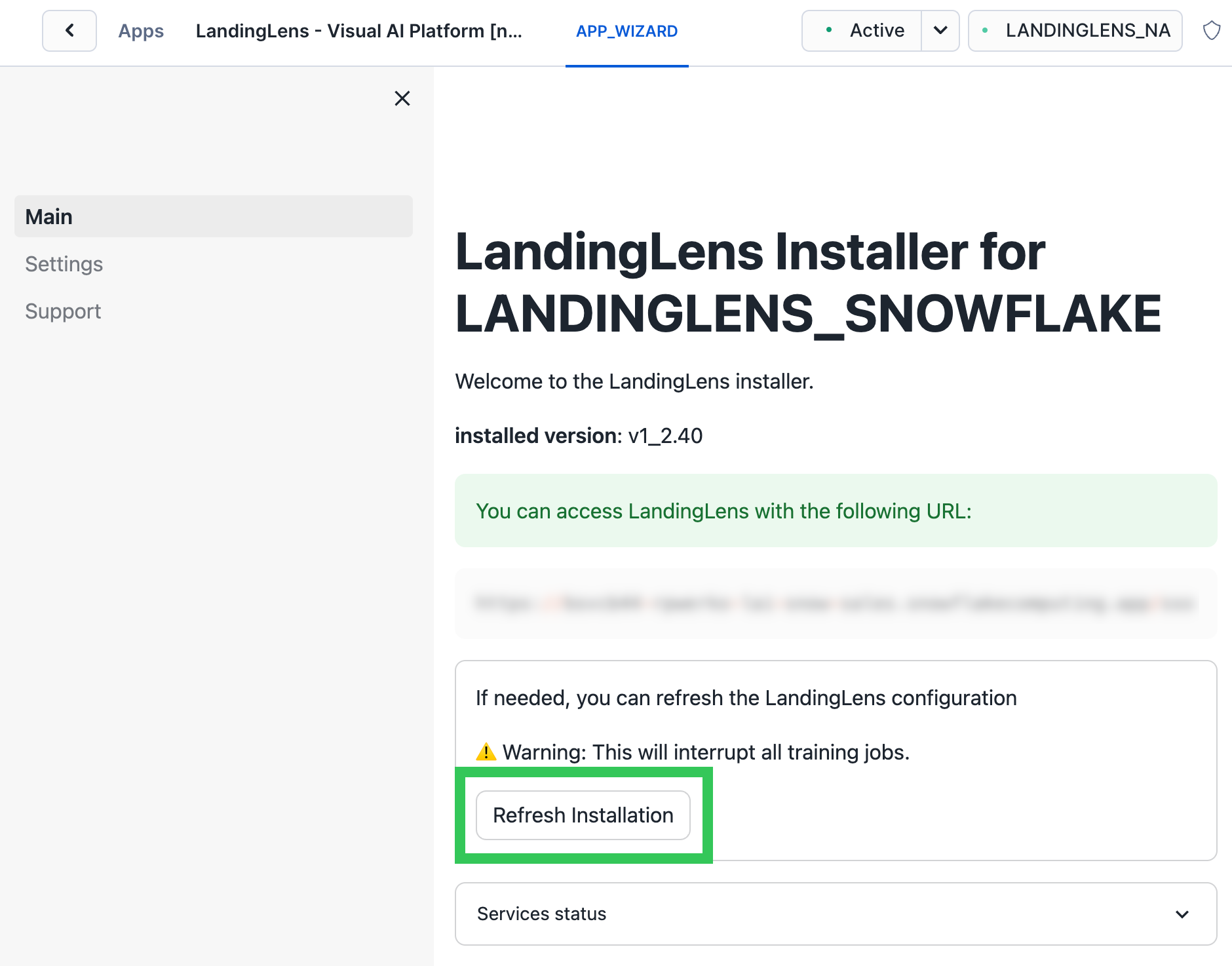

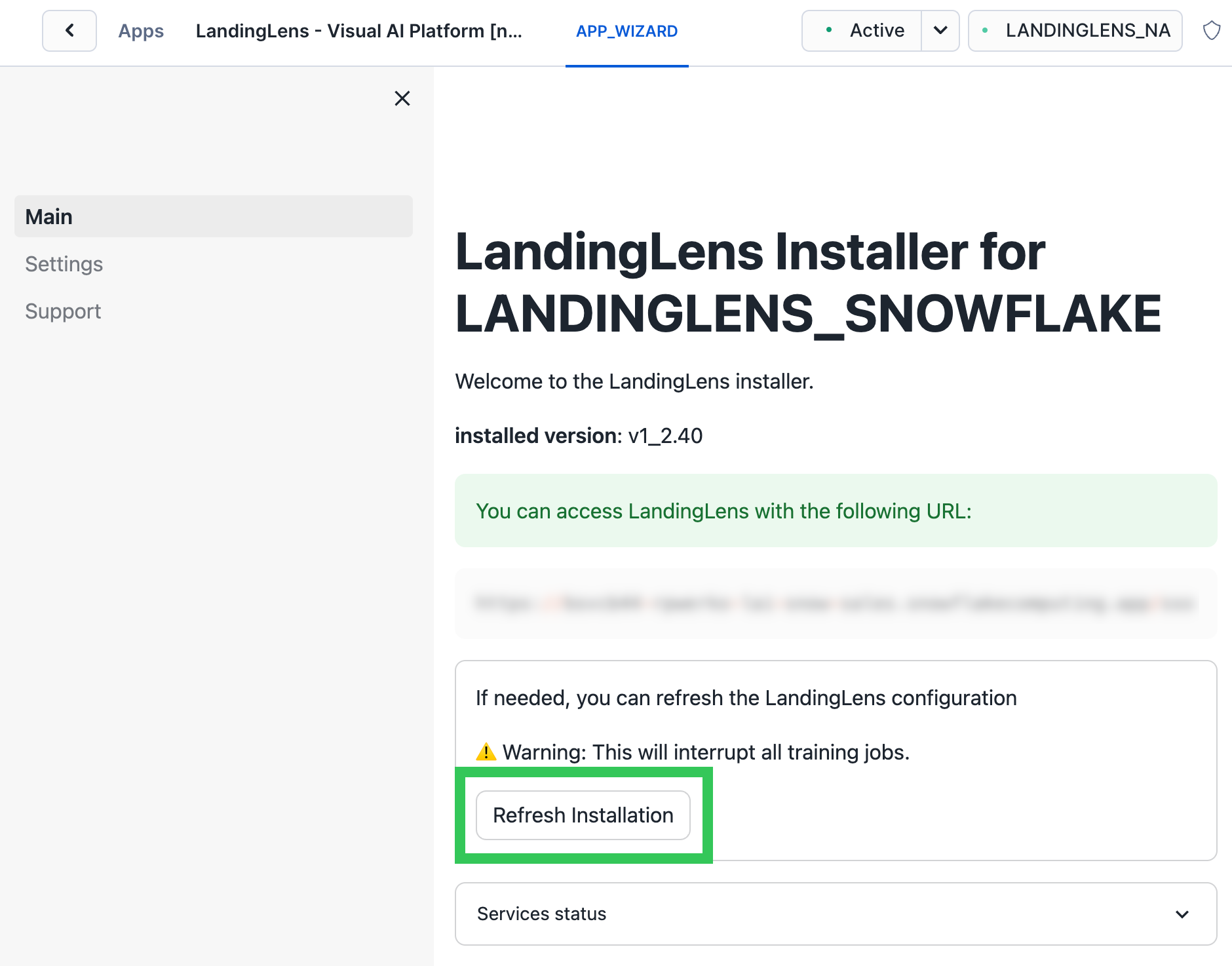

Manage the CPU and GPU Resources Used by the App - Click Main.

- Click Refresh Installation. The installation must be refreshed for the changes to go into effect.

Refresh the LandingLens Installation

Refresh the LandingLens Installation

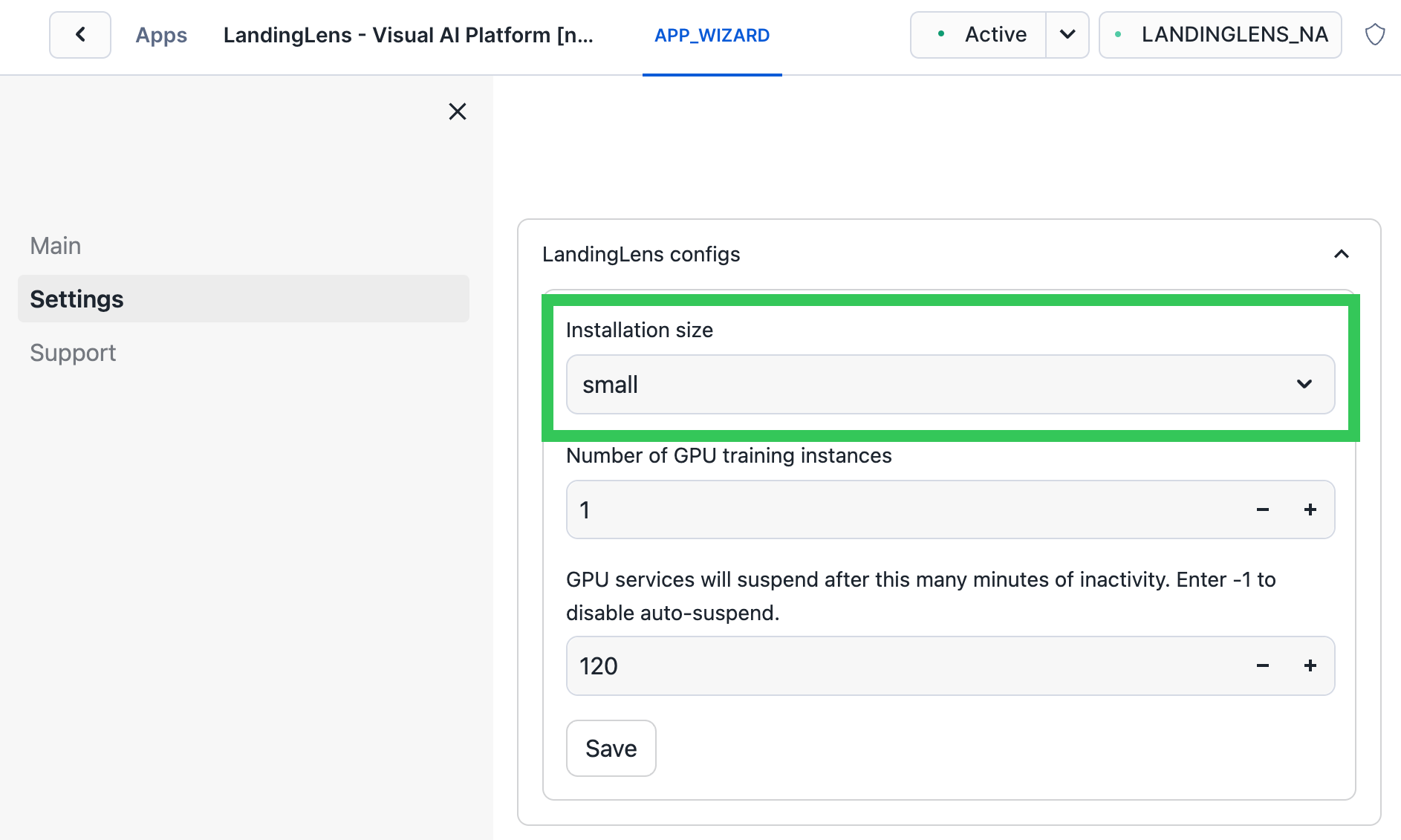

Set the Installation Size (CPU Resources)

In the LandingLens Configs, you can configure the Installation Size of the LandingLens app, which is the number of CPU machines and service replicas used by the app.

The sizes are all relative to the smallest size, which is the POC (the proof-of-concept) size.

Typically, using a smaller size leads to the consumption of fewer credits. We recommend increasing the size if you notice the app working slowly or if you have many users simultaneously using the app, running training, or running inference. If running LandingLens in production, consider using the Medium or Large size.

If you change this setting, you must refresh the installation for the changes to go into effect (click Main > Refresh Installation).

Installation Size of the LandingLens App

Installation Size of the LandingLens App| Installation Size | Description |

|---|---|

| POC | This is the "proof of concept" setting, which is designed for users who want to learn how to use LandingLens and perform small-scale tests. |

| Small | This is 2x the size of POC. |

| Medium | This is 3x the size of POC, and is the default size when LandingLens is installed. |

| Large | This is 4x the size of POC. |

| Xlarge | This is 5x the size of POC. |

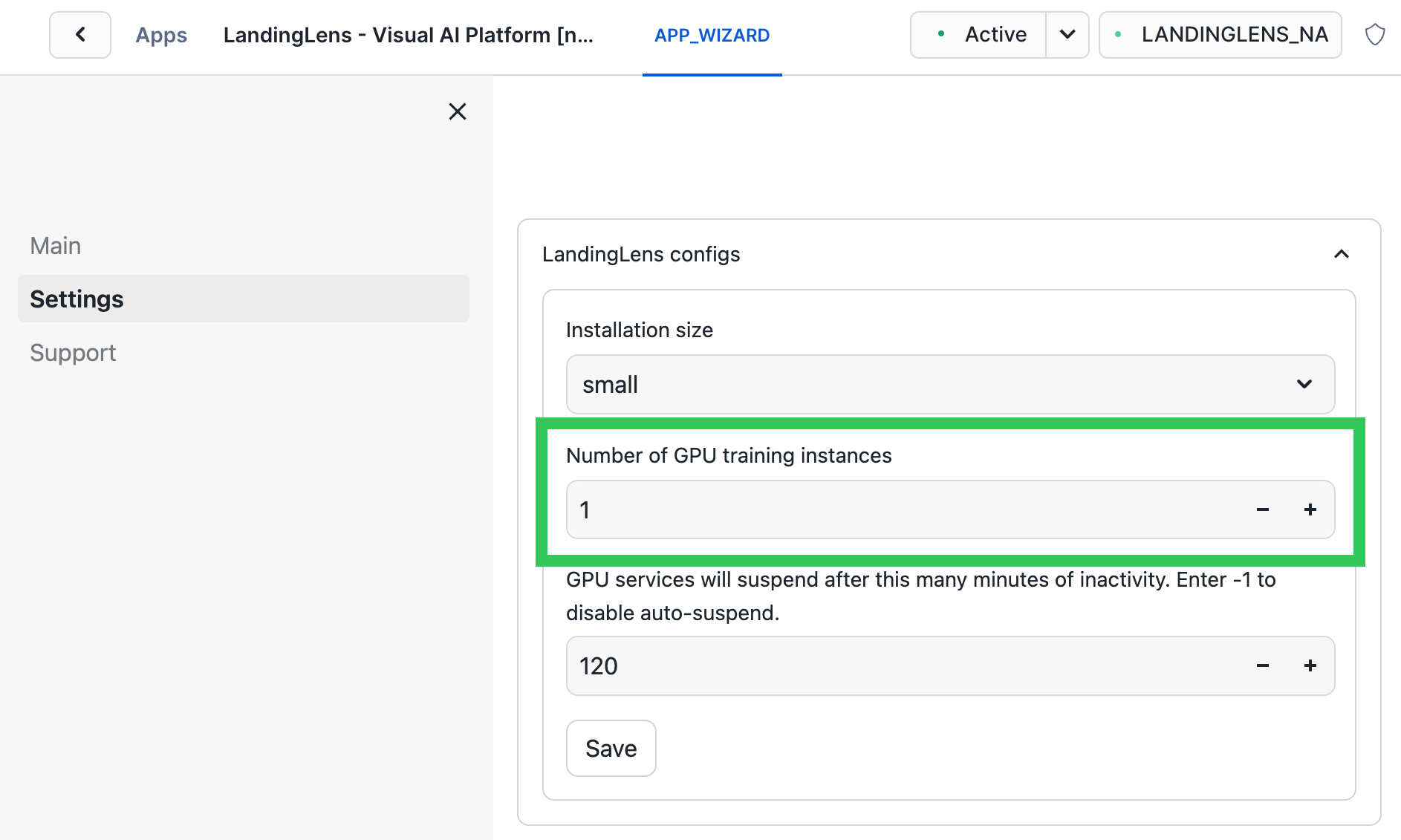

Set the Number of GPU Training Instances

LandingLens uses GPUs during the model training process. By default, one GPU node is allocated for model training, but you can increase the number of GPU nodes in the LandingLens Configs. The maximum number of GPU nodes is five. Typically, increasing the number of GPU nodes increases the rate of credit consumption.

To change the number of allocated GPU nodes, update the Number of GPU training instances field. If more than one GPU node is allocated, Auto-Suspend GPU cannot be enabled.

If you change this setting, you must refresh the installation for the changes to go into effect (click Main > Refresh Installation).

Change the Number of Available GPU Nodes

Change the Number of Available GPU NodesAuto-Suspend GPU

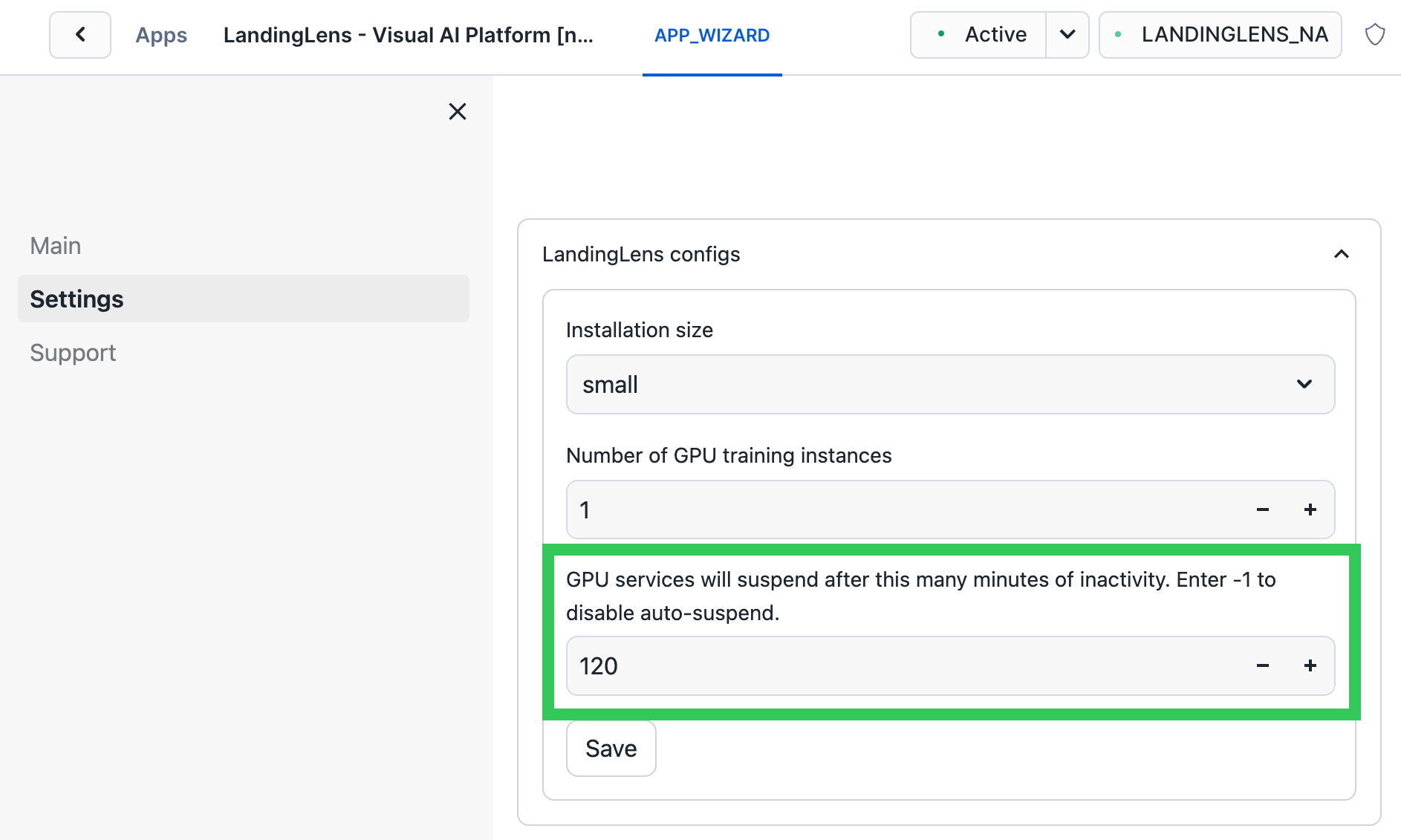

You can configure the app's Auto-Suspend GPU setting to automatically suspend a GPU node after the period of inactivity that you specify. Typically, suspending a GPU node decreases credit consumption when you're not using the app.

Consider the following before configuring Auto-Suspend GPU:

- Auto-Suspend GPU is avaible only when one GPU node is allocated via the Number of GPU training instances setting.

- Using Auto-Suspend GPU does not suspend any warehouses or compute pools.

- If the GPU node is suspended and you train a model, it will take about 6 minutes for the GPU node to re-activate.

- Turning on Auto-Suspend GPU when a GPU node is in use will not interrupt the service using it. GPU nodes will only auto-suspend after the GPU services are idle for the period of time you specify.

Turn On Configure Auto-Suspend GPU

In the GPU Services Will Suspend field, enter the number of minutes of inactivity after which GPU services will automatically suspend. For example, if you enter 5, GPU nodes will suspend after 5 minutes of inactivity.

To turn off Auto-Suspend GPU, enter -1. (This is the default setting.)

Auto-Suspend GPU

Auto-Suspend GPUSuspend LandingLens

You can run a SQL command to suspend the LandingLens app. Suspending the app suspends the underlying compute pools.

When the app is suspended, you can then run another SQL command to resume it. Resuming the app takes about 15 minutes.

Make sure that there are no active model training jobs or file syncs when you suspend an app. Otherwise, those processes will fail.

You can use Snowflake tasks to schedule the suspend and resume tasks to run at specific times.

Prerequisites

- You must be granted the LLENS_PUBLIC application role to run this function.

- The inference function requires the name of your LandingLens instance (

APP_NAME). To locate the name, go to Name of LandingLens Instance.

Suspend the App

Run the following SQL command to suspend the app, where APP_NAME is the name of your LandingLens instance. To locate the name, go to Name of LandingLens Instance.

CALL APP_NAME.core.suspend_services('APP_NAME');Resume the App

Run the following SQL command to resume the app, where APP_NAME is the name of your LandingLens instance. To locate the name, go to Name of LandingLens Instance.

CALL APP_NAME.core.resume_services('APP_NAME');Check Compute Resources

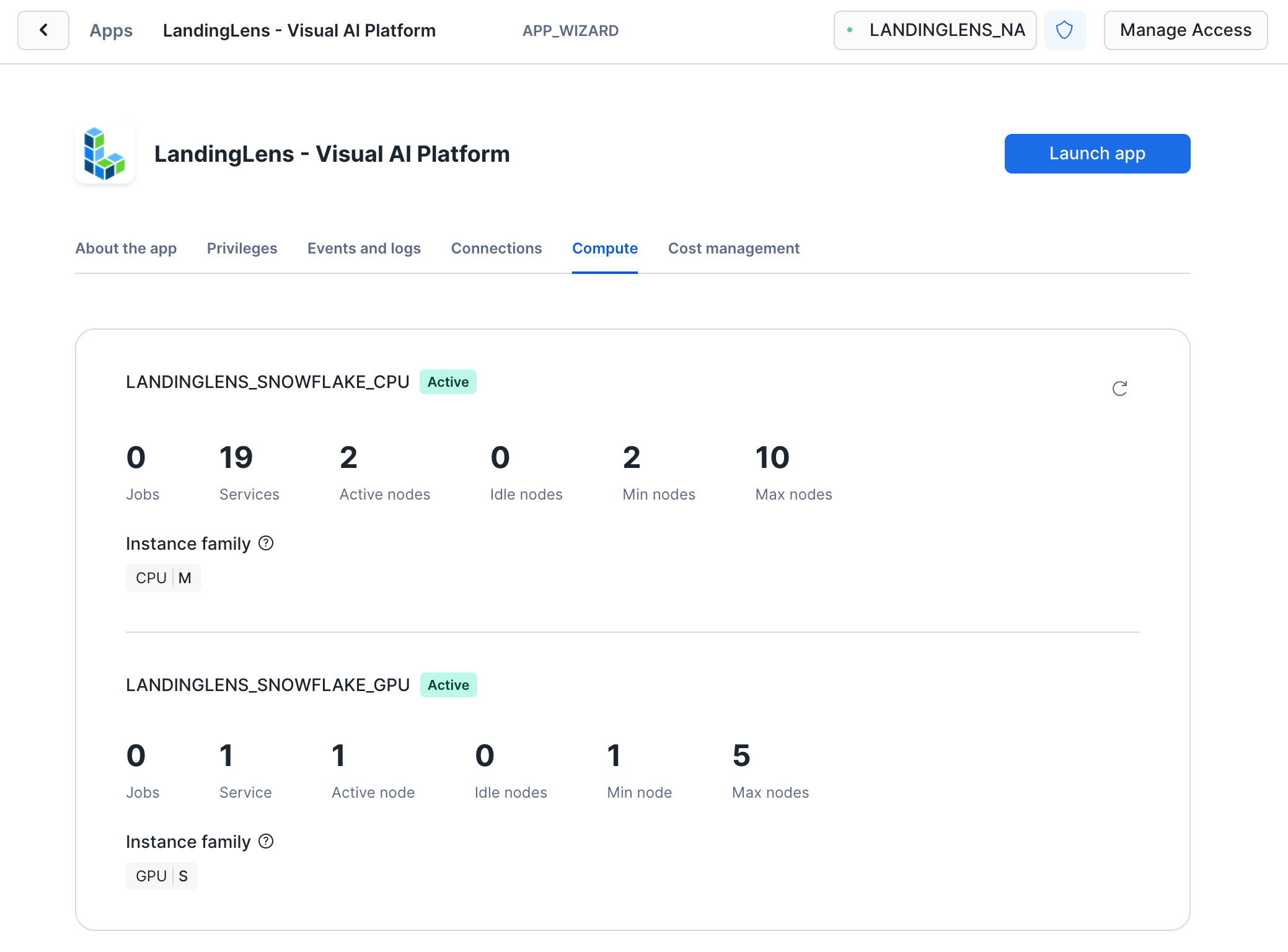

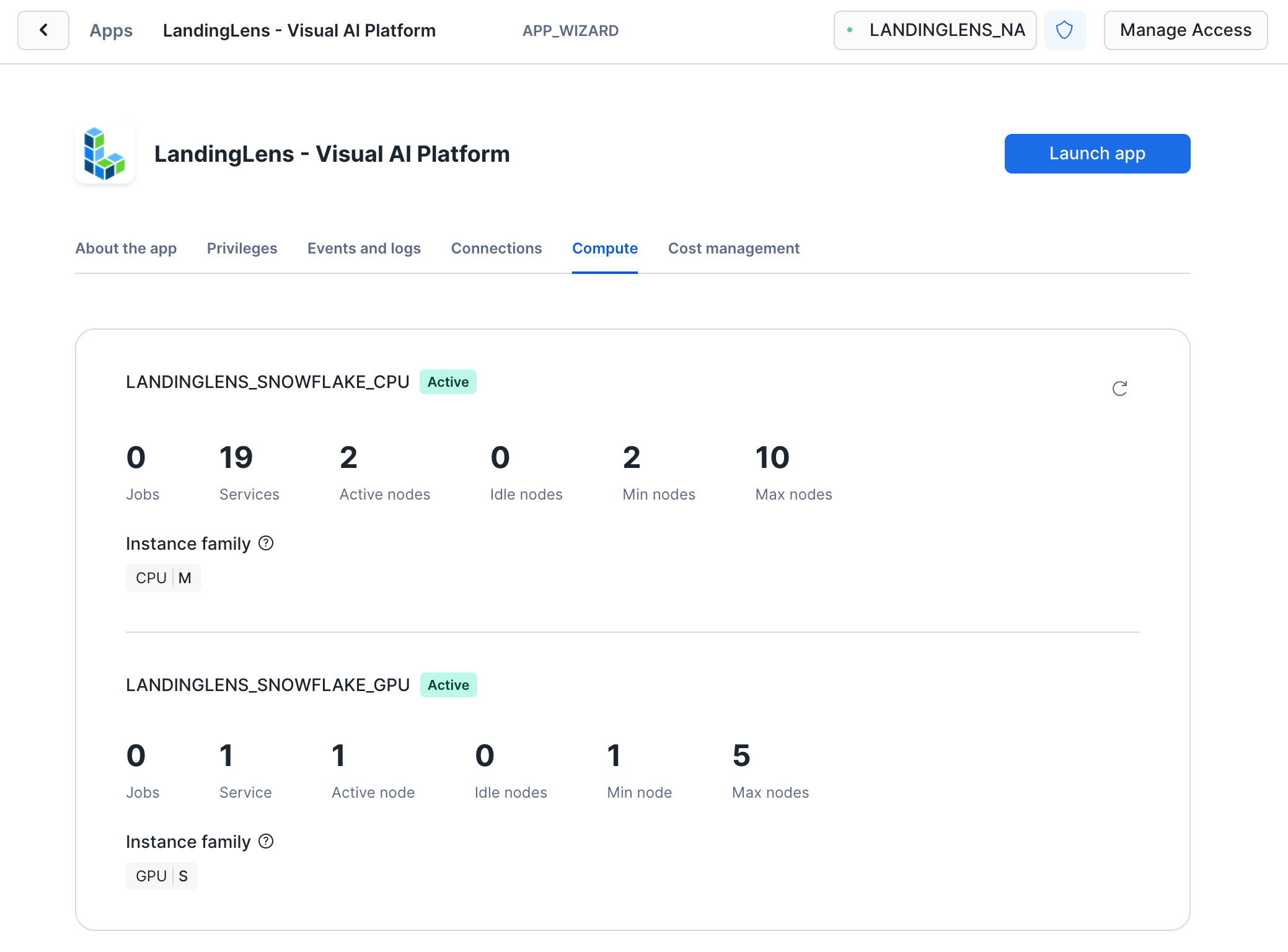

To check the CPU and GPU resources used by the LandingLens app:

- Open Snowsight.

- Go to Data Products > Apps > LandingLens Visual AI Platform.

- Click Compute. The CPU and GPU resources display (LANDINGLENS_SNOWFLAKE_CPU and LANDINGLENS_SNOWFLAKE_GPU).

CPU and GPU Resources

CPU and GPU Resources