- 21 May 2025

- 47 Minutes to read

- Print

- DarkLight

- PDF

LandingLens Release Notes

- Updated on 21 May 2025

- 47 Minutes to read

- Print

- DarkLight

- PDF

This article applies to these versions of LandingLens:

| LandingLens | LandingLens on Snowflake |

| ✓ | ✖ |

May 5, 2025: Embed-Optimized Models

LandingLens now supports building and deploying embed-optimized models, which are lightweight, efficient computer vision models designed to run directly on embedded devices.

To create an embed-optimized model, choose one of the following model architectures when running Custom Training:

- For Object Detection: ODEmbedded-[23M]

- For Classification: ConvNextEmbedded-[16M]

Using these architecture types generates a TensorFlow Lite (TFLite) model ready for embedded deployment. After downloading an embed-optimized model from LandingLens, you can interact with it using TFLite libraries and APIs.

For detailed information and a quickstart guide, go to Embed-Optimized Models.

April 7, 2025: Anomaly Detection

We are excited to announce a new project type in LandingLens—Anomaly Detection!

The Anomaly Detection project type is used to identify deviations from the norm, especially when you have few images of "abnormal" cases. These projects have two built-in classes: Normal and Abnormal, which you assign to images.

Anomaly Detection models train on Normal images to learn their patterns. The model then flags any deviations as potential anomalies.

To learn more, go to Anomaly Detection.

Anomaly Detection Project

Anomaly Detection ProjectFebruary 11, 2025: Updated Confusion Matrix

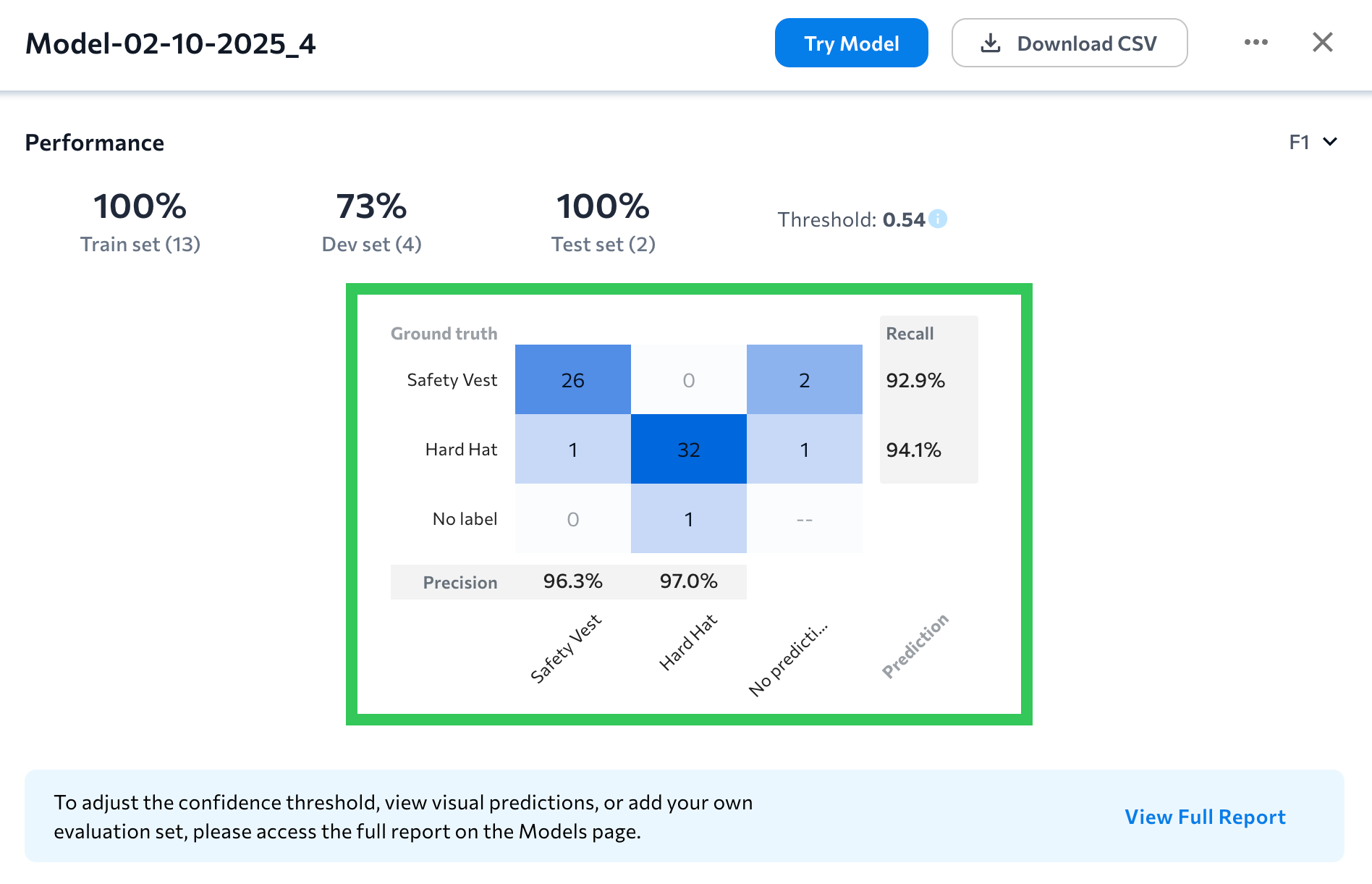

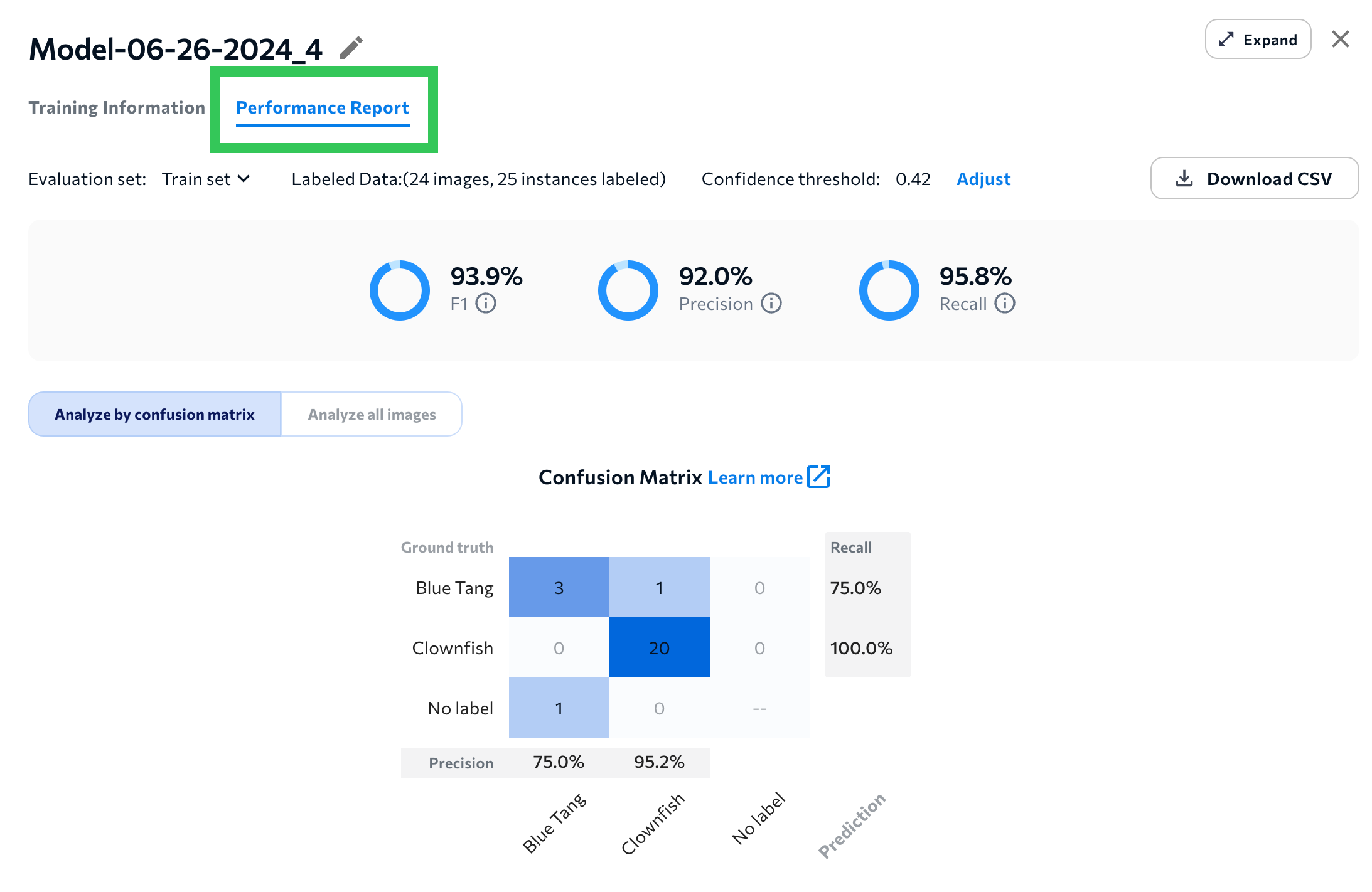

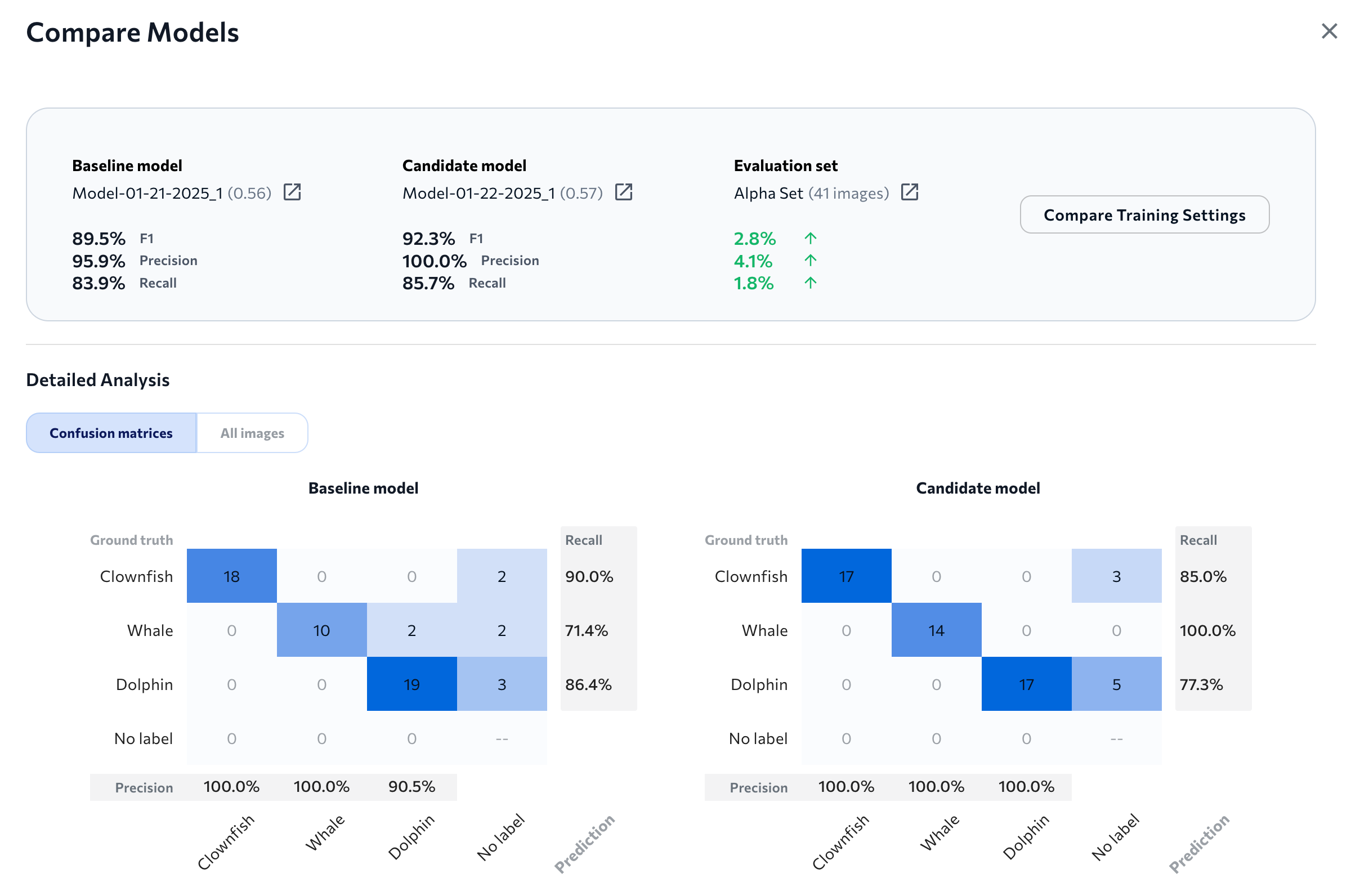

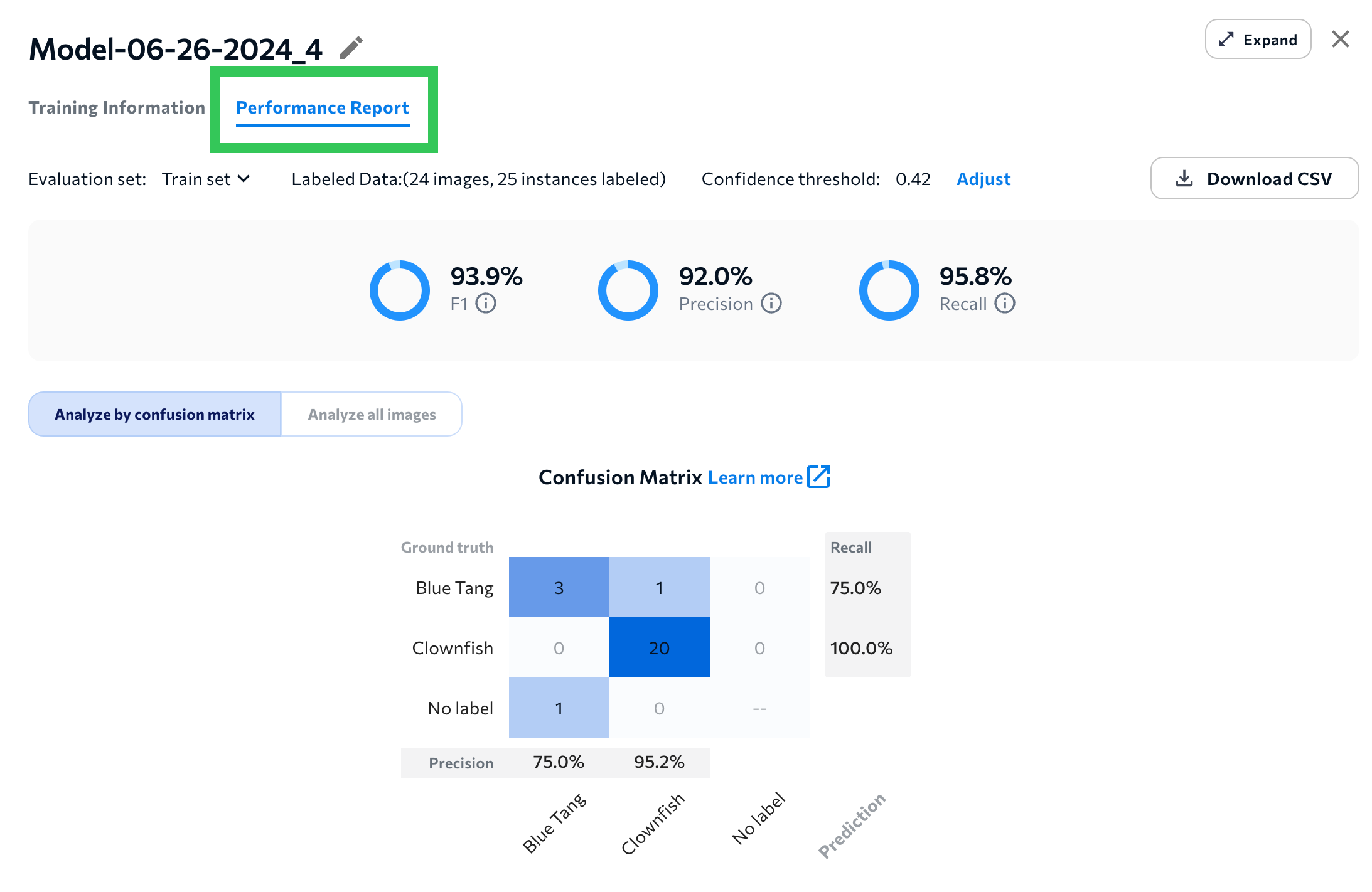

The newest version of LandingLens features a redesigned confusion matrix! The new confusion matrix is a table that shows ground truth labels versus model predictions. This new table format empowers you to quickly see what classes the model is predicting correctly, and which ones it might have trouble identifying.

Each cell shows the count of instances that correspond to particular ground truth class-predicted class pair. For example, in the image below, the model correctly predicted the class "Hard Hat" 32 times and misclassified it 2 times.

Confusion Matrix in an Object Detection Project

Confusion Matrix in an Object Detection ProjectWhere can I see these changes?

All areas that show model performance now include the updated confusion matrix, including:

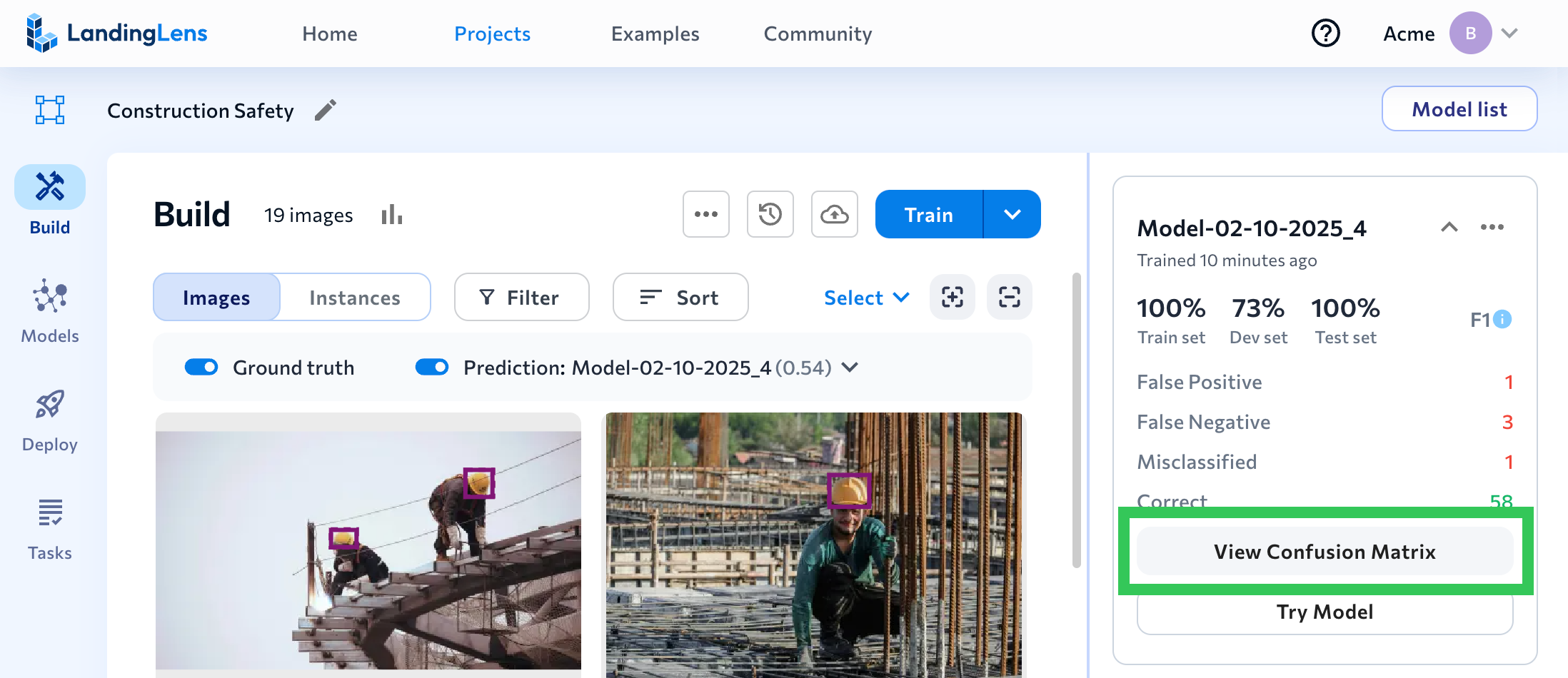

- Build tab: Click View Confusion Matrix on a model tile to see the confusion matrix for the dataset that the model was trained on.

- The Performance Report shows a confusion matrix for the selected evaluation set.

- The Compare Models tool shows side-by-side confusion matrices to show how each model performed on the selected evaluation set.

Learn How to Analyze Models

Watch the following video to learn how to use the updated confusion matrix and other tools to analyze model performance.

December 2, 2024: End Model Training, TIFF Support, and More

We're excited to release these updates to LandingLens:

- Improved workflow for ending model training early

- TIF/TIFF upload support

- Dataset snapshot CSVs now include tags

Changes to Ending Model Training

We've made it easier to end model training early. Previously, model training could only be stopped during the Training & learning step, and LandingLens would evaluate the images in your dataset with the model it had generated up to that point.

Now, you can end model training at any point. The model tiles for these training jobs display the text Manually Ended so that there is a record that model training started, but wasn't completed. To prevent users from accidentlly using incomplete models, LandingLens no longer generates models when training is manually ended.

To learn more about training models, go to Train Models.

End Training Now

End Training Now Model Training Was Ended Manually

Model Training Was Ended ManuallyUpload TIF/TIFF Files to Projects

TIF/TIFF files can now be uploaded to LandingLens projects. However, LandingLens models cannot run inference on TIF/TIFF files.

To learn more about image support, including supported image files and maximum image size, go to Upload Images.

Dataset Snapshot CSVs Now Have Image Tags

When you download a CSV for a dataset snapshot, the CSV now lists any tags applied to the images.

To learn more about dataset snapshots, go to Dataset Snapshots.

Downloaded CSV with Image Tags

Downloaded CSV with Image TagsOctober 31, 2024: Download CSV of Model Performance

We just released a new version of LandingLens with these features:

Download CSV of Model Performance for Select Images

You can now download a CSV of model performance for select images in your Object Detection or Classification dataset. The prediction data in the CSV will be based on the selected model and confidence threshold (if you manually change the threshold, that threshold is used in the CSV).

For detailed instruction on how to download the CSV, go to Model Reports.

Download CSV

Download CSVHide LandingEdge and Docker Deployment Options

July 8, 2024: New Model Analysis Interface & Training APIs

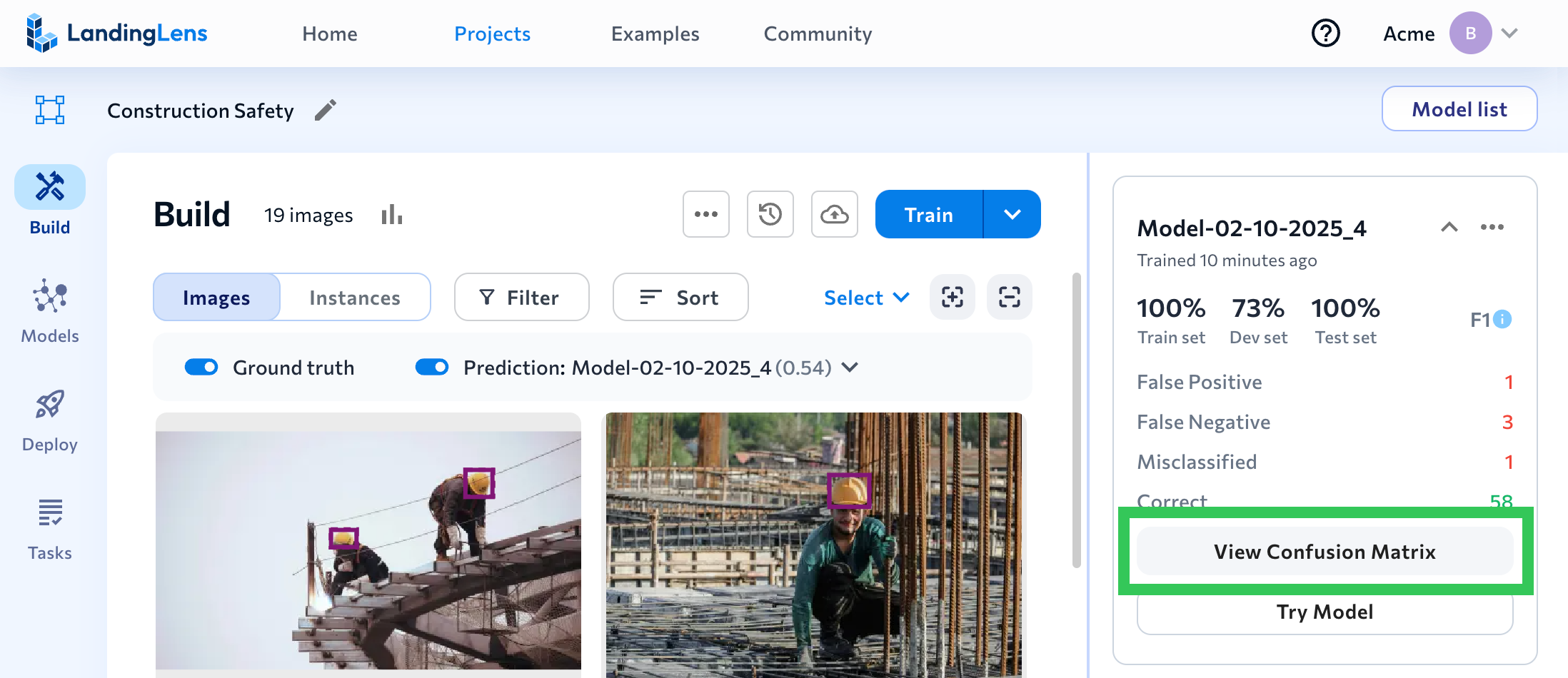

We've streamlined the experience for viewing and analyzing model performance right after training a model! We've moved around a few elements on the Build page and surfaced key model performance metrics. The goal of these updates is to quickly give you the data you need to decide if you need to finetune your model before deploying it.

Here's an overview of the updated model performance tools on the Build page. Want a deeper dive on these features? Check out View Predictions and Model Reports!

| # | Description |

|---|---|

| 1 | Turn on and manage Predictions all in one place! When you turn on Predictions, you can now select the model and confidence threshold you want to see predictions for. Get more details in View Predictions. |

| 2 | Click Try Model to see how the model performs on new images. This is the same as the "Predict" button in previous versions of LandingLens. |

| 3 | Key model performance data now displays on model tiles. You can now see a few model tiles at a time, so that you can compare model performance at a glance. The model tile shows the model's F1 or IoU score for each data split (Train, Dev, and Test) and the number of Correct, False Positive, False Negative, and Misclassification predictions. |

| 4 | Clicking a model tile opens a window with detailed performance data and a confusion matrix. You can toggle between the F1 or IoU, Precision, and Recall scores. Get more details in Model Reports. |

LandingLens APIs

You can now retrieve a model's training metrics and confusion matrix using the LandingLens API. To learn more about how to use these training APIs, see this Training Metrics tutorial.

Model Training Metrics

The training metrics include the Performance, Recall, and Precision scores for the model. Use this endpoint to get a model's training metrics:

/v1/projects/{project_id}/train/{training_id}/results/metrics

Get more information about this API in Get Metrics Summary.

Model Confusion Matrix

The confusion matrix lists the number of correct predictions, false negatives, false positives, and misclassifications. Use this endpoint to get a model's confusion matrix:

v1/projects/{project_id}/train/{training_id}/results/confusion-matrix

Get more information about this API in Get Confusion Matrix For All Splits.

Vision Agent v0.2.79

We've updated and added several tools to Vision Agent! All tools are available in both our repository and the UI-based version of Vision Agent. Have questions about Vision Agent or want to learn more? Check out our dedicated Discord server here!

New tools:

- Florence-2 image captioning: Uses a Florence-2 large model to caption images in two modes: Brief and Detailed.

- Generic object detection: Uses a Florence-2 large model to detect generic objects in images without needing a text prompt.

- Generic image segmentation: Uses a ResNet-50 DETR model to perform panoptic segmentation on images without needing a text prompt.

- Florence-2 visual question answering with context: Uses Florence-2 image captioning and RoBERTa base to answer questions about images with generated text.

- Depth generation: Uses a Depth Anything V2 small model to generate monochrome depth images using relative depth estimation from RGB images.

- Pose generation: Uses ControlNet OpenPose conditioning to generate OpenPose stick images from RGB images.

- Soft edge image generation: Uses ControlNet HED conditioning to generate HED images from RGB images.

- Surface normal generation: Uses MiDaS 3.0 to generate surface normal images from RGB images.

- Template matching: Uses OpenCV-based template matching with rotation to find template images in original images.

Updated tools:

- Extract_Frames: Adds the capability to extract frames from YouTube videos. Vision Agent can take in videos either via YouTube links or local file uploads.

June 6, 2024: Use Label Assist to Improve Model Performance

We just released a new version of LandingLens that includes these two exciting features:

Label Assist

We've introduced a new tool called Label Assist that suggests new labels or improvements to labels! Use Label Assist to speed up the labeling process and help make your labels ("ground truth" annotations) more accurate, so that the next model will have better performance.

Label Assist can spot missing labels and suggest label improvements. For example, if there's a large space between an object and the original bounding box, Label Assist might suggest a tighter label.

Label Assist shows you its suggestions on the image details page as dashed bounding boxes. Click a suggestion to accept it, or click Accept All to accept all suggestions for an image. After reviewing and accepting suggestions, train the model again to include the newest labels in a model. For detailed information, check out the full article here: Label Assist.

Label Assist

Label AssistDownload CSVs from Evaluation Sets

When looking at an evaluation set's Performance Report, you can now download a CSV of information about the images in that evaluation set. The CSV includes several data points for each image, including the labels ("ground truth") and model's predictions. The CSV download is available for Object Detection and Classification projects.

For more information, go to Download CSV of Evaluation Set.

Download a CSV for the Evaluation Set

Download a CSV for the Evaluation SetMay 24, 2024: LandingLens API

We now offer a LandingLens API! The LandingLens API is a RESTful API that allows you to use LandingLens programmatically. You can use the LandingLens API to perform many tasks, including:

- Create projects

- Upload images to projects

- Create classes

- Train models

- Deploy models to cloud-endpoints hosted by LandingAI (cloud deployment).

Access the LandingLens API here.

May 6, 2024: Simplified Pricing Plans, Model Downloads, and More!

We’re simplifying our LandingLens plans to offer more capabilities and an easy-to-understand pricing structure! You can check out all the details on our Pricing page, but the most game-changing updates are that all users can now:

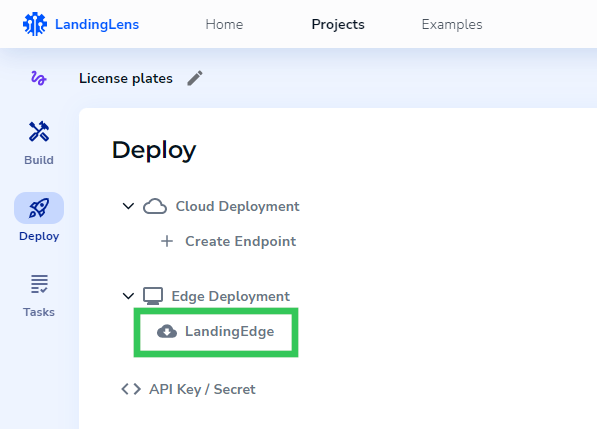

- Use LandingEdge, our edge deployment solution.

- Use Docker Deployment, which empowers you to run model inference programmatically.

- Download models from LandingLens! You can load the models to LandingEdge or Docker to deploy offline, or you can use the ONNX file to deploy the model via your own app.

Keep reading and watch the video below to get more details about these updates and how you can leverage them.

Use LandingEdge & Docker Deployment Today—No Licenses Needed!

In an effort to make it as easy as possible to deploy your LandingLens model, LandingEdge and Docker Deployment are now available to users on all pricing plans.

Plus, we’ve removed the requirement to have a license to run LandingEdge and Docker Deployment. You can now download and run these tools without getting a license from LandingAI.

If you have an older version of LandingEdge and Docker Deployment, download the latest versions to leverage the “no-license” benefit.

Download Models

You can now download your computer vision model from LandingLens! The model is downloaded as a ZIP file that you can load directly into LandingEdge and Docker Deployment if you want to deploy offline.

The downloaded model also includes the ONNX file for the model. ONNX (Open Neural Network Exchange) is an open source format for AI models, so you can use your model in your own custom applications.

Only Enterprise users can use downloaded models for commercial purposes. Models downloaded from the Free and Visionary plans can only be used for noncommercial use.

Workflow for Downloading Models

This new version of LandingLens introduces the concept of “activated projects”. You must activate a project before you can download models from it or deploy models from it using LandingEdge or Docker Deployment. Each pricing plan has a maximum number of projects that can be active at the same time:

- Free: 1 project

- Visionary: 3 projects

- Enterprise: Starts at 5 projects

Projects are activated in a new page called Active Projects. For detailed instructions about how to activate and deactivate projects, go to Activate Projects.

View Activated Projects

View Activated ProjectsAfter you activate a project, you can download as many models from the project as you like. Models are downloaded from the Models tab.

Download the Model

Download the ModelNew Credit Calculation for Custom Training

In addition to changing the LandingLens pricing plans, we’re also changing how credits are charged for Custom Training. Each LandingLens plan includes a set of credits, which are like a form of currency in LandingLens. You spend credits to train models and run inferences.

Until now, training one image cost 1 credit, and running inference on one image cost 1 credit. As of today (May 6, 2024), the credit cost for Custom Training is now calculated differently. The credit cost is now based on the project type and custom training settings selected. In general, increasing any of the settings (like increasing the Epoch) increases the cost to train and run inference.

With Custom Training, the cost to train the model and run inference on that model might be more or less than 1 credit.

After you configure your settings—and before you train the model—LandingLens shows you the exact credit cost it will take to train the model and run inference on it.

.png) Custom Training: Preview the Cost for Training and Running Inference

Custom Training: Preview the Cost for Training and Running InferenceEach Model Can Have a Different Inference Cost

If you run Custom Training multiple times in the same project, each resulting model can have a different cost for running inference. This is because each round of Custom Training can have multiple different settings, each of which impact the cost.

For example, you could have three models with the following inference costs in the same project:

| Model | Training Type | Credit Cost for Inference |

|---|---|---|

| Model A | Fast Training (default training method) | 1 credit / image |

| Model B | Custom Training | 1.62 credits / image |

| Model C | Custom Training | 0.35 credits / image |

April 18, 2024

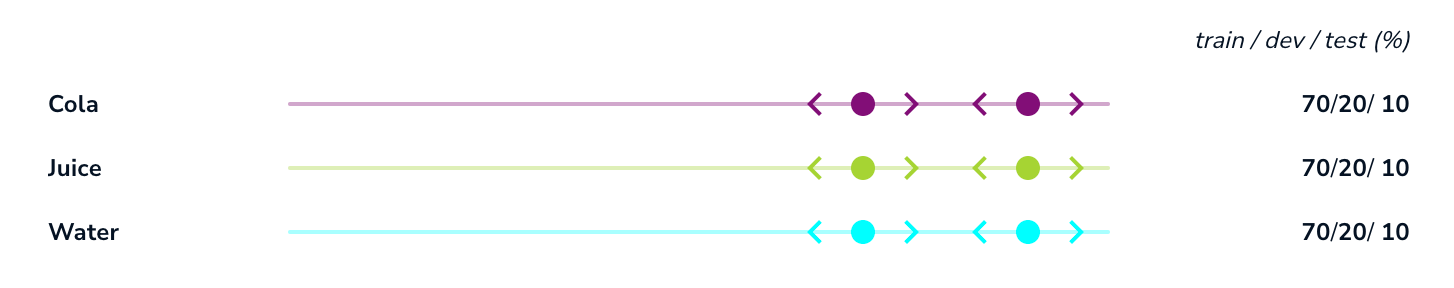

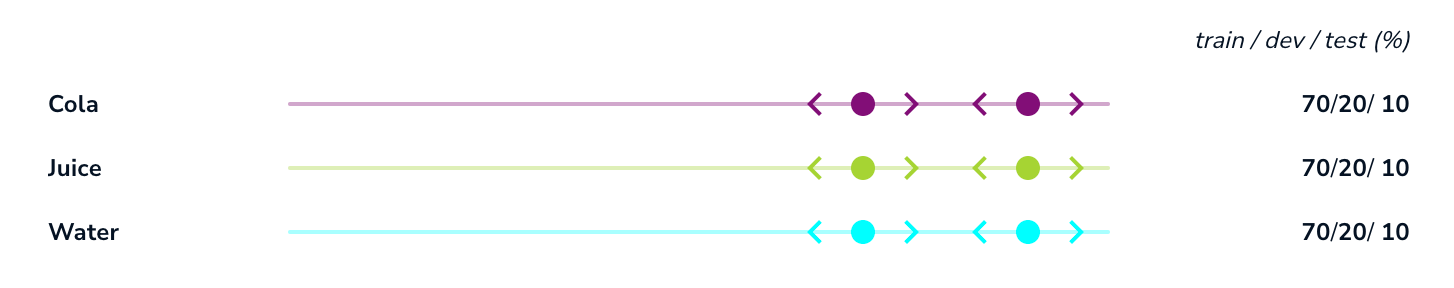

Computer vision models are trained based on sets of labeled images called splits. The three splits are the Train set, Dev set, and Test set. During the model training process, the model uses the splits to learn patterns, validate its predictions, and test its accuracy. Images in each split must be labeled in order for the model to learn what data is and isn’t relevant to each class.

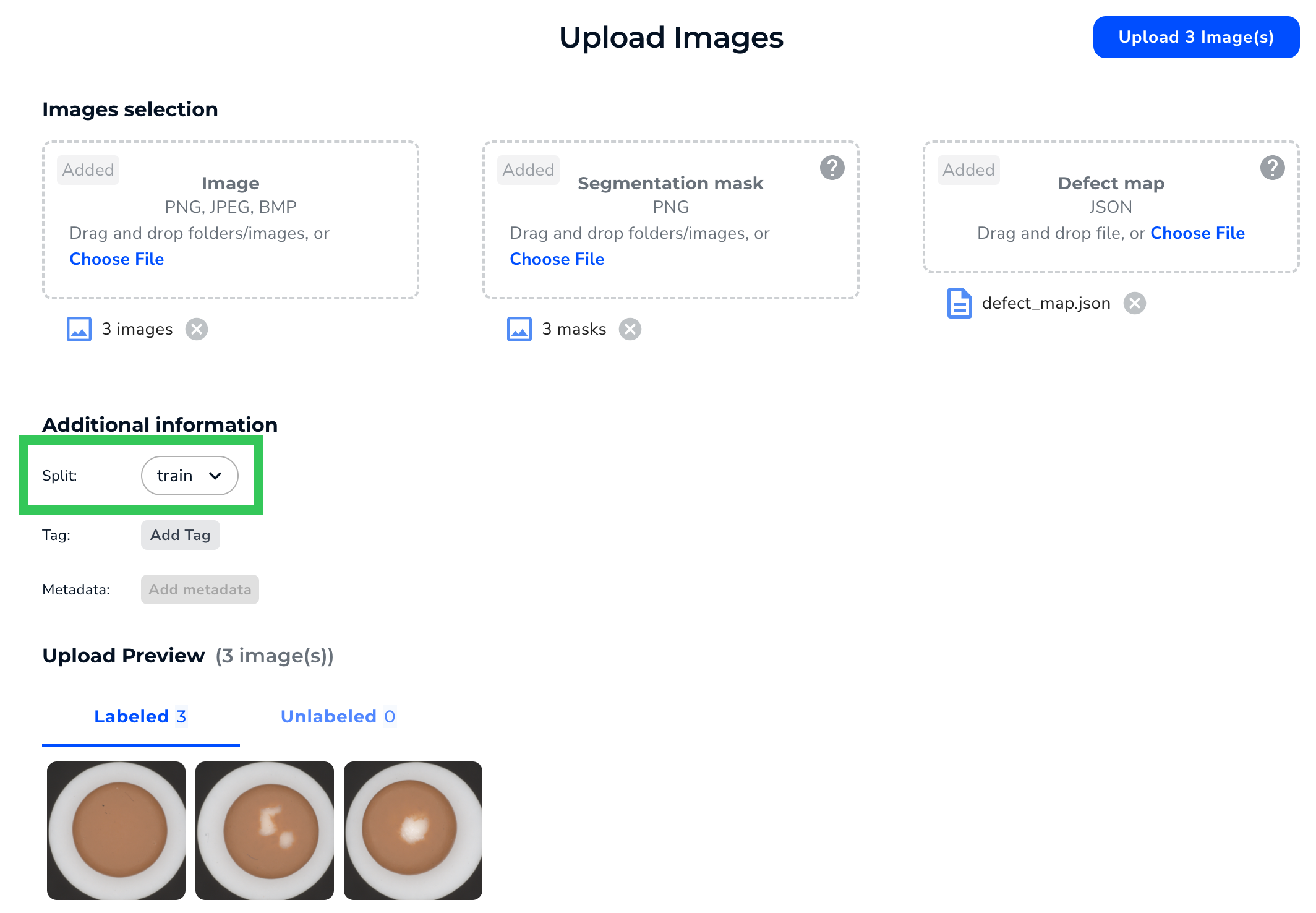

LandingLens offers many ways to assign splits to images, including assigning splits when uploading images. Before this release, splits could be assigned at upload even if some images weren’t labeled. Now, to help you manage splits more accurately, a split can only be assigned at upload if those images are labeled. To learn all about splits in LandingLens, go to Splits.

Select a Split

Select a SplitApril 2, 2024

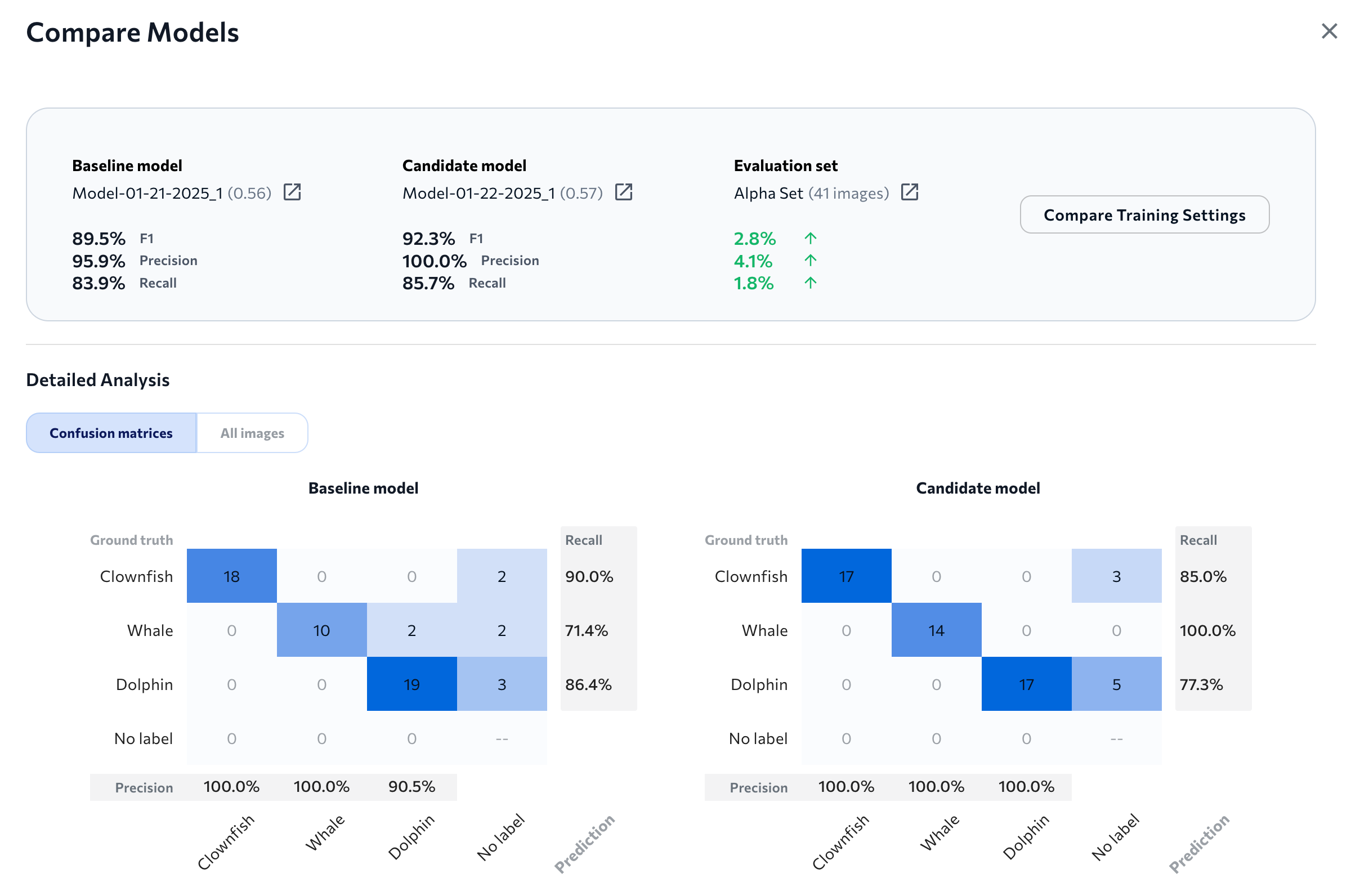

We just released a new version of LandingLens that features a new tool: Compare Models! The Compare Models tool is a great way to evaluate performance on multiple iterations of the model. It can help you identify if you need to improve your labels, datasets, and hyperparameters.

When you run the Compare Models tool, you set a baseline model and a candidate model. LandingLens then shows you if the candidate model performed better or worse for each prediction outcome (False Positive, False Negative, Correct, etc). You can even see a side-by-side comparison of how the baseline and candidate models performed on each image in the dataset. To get the full details about how to use the Compare Models tool, go here.

Model Comparison

Model Comparison

March 18, 2024

A new version of LandingLens is now available! This version includes some small (but mighty!) enhancements that can streamline your workflow. These improvements include:

- You can now train up to 10 models at once. For more information on parallel training, go here.

- We’ve added a safeguard to Custom Training that ensures that crops are only applied after resizes or rescales. For more information about cropping during Custom Training, go here.

- Model names can now include special characters.

Bug Fixes

This release of LandingLens includes the following bug fixes:

- When using the Auto Splittool, the lines for each class align with the percentages on the right side of the page. Previously, the alignment was off.

Sliders in Auto Split Are Now Aligned with the Class Names and Percentages

Sliders in Auto Split Are Now Aligned with the Class Names and Percentages - The Deploy page for Classification projects no longer shows a Confidence Score. LandingLens only uses a Confidence Score for Object Detection and Segmentation projects.

March 12, 2024

In the new release of LandingLens, a Validation Chart now displays on the Training Information tab for a model.

Validation Chart

Validation ChartThe Validation chart is calculated on the Dev split. This chart displays when the model was trained using Custom Training and the Dev split has at least 6 images.

If the model improves during the validation process, the line goes up over time. The line will look slightly different for each project type because each uses a different validation metric:

- Object Detection: mAP (Mean Average Precision)

- Segmentation: mIoU (mean Intersection over Union)

- Classification: F1 score

For more information about a model's Training Information, go to Analyze and Compare Models.

February 29, 2024

The newest version of LandingLens introduces more Model Size options for Custom Training. The Model Size refers to the architecture and number of parameters used to train the model.

We’ve added a third model size option to Object Detection projects: RtmDet-[9M]. This option leads to the fastest training and inference times, when compared to the other two model sizes. This model size uses the RTMDet architecture and 9 million parameters.

For detailed information about creating custom-trained models in LandingLens, go to Custom Training.

February 21, 2024

The latest version of LandingLens features a redesigned process for Custom Training! You now have greater control over the dataset and splits used during the training process. Additionally, the user interface now guides you more clearly through configuring the training settings.

All of the transforms and augmentations from the previous design are still available. Additionally, the new process does not impact any models you’ve already developed.

The following sections highlight some new key points in Custom Training. To learn all the details, go to Custom Training.

New Custom Training Interface

New Custom Training InterfaceSelect a Dataset

In previous versions of LandingLens, the Custom Training process would automatically apply to the dataset currently in the Build tab. Or, you could go to the Dataset Snapshots module, select a dataset, and then start Custom Training.

When you click Custom Training in the new process, you’re now first prompted to select a dataset. This speeds up the process and shows you all of your options in one place.

Select a Dataset

Select a DatasetSet Up Splits

If you choose to run Custom Training on the dataset currently in the Build tab, and those labeled images don’t have splits assigned to them yet, you can assign splits during Custom Training.

You can assign splits based on all images in the dataset or based on each class.

Set Up or Review Splits

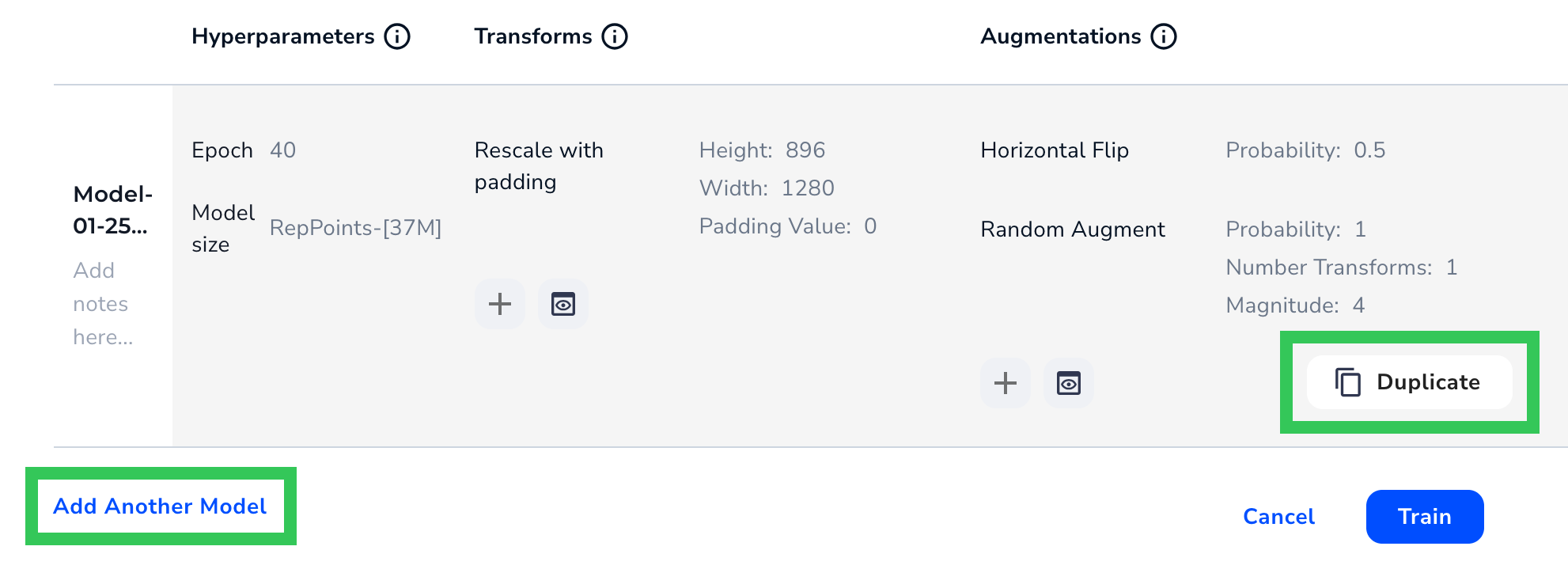

Set Up or Review SplitsTrain Multiple Models for the Same Dataset at Once

You can now configure multiple sets of training parameters for the same dataset at once. This is helpful when you want to see how changing a parameter–like the number of epochs–will affect the model. By setting up all training parameters at once, you don’t need to go through the Custom Training process multiple times.

To add another set of training parameters, click Add Another Model or Duplicate.

Add a Set of Training Settings

Add a Set of Training SettingsJanuary 30, 2024

In this release, we’ve introduced three significant updates to streamline your computer vision model creation process.

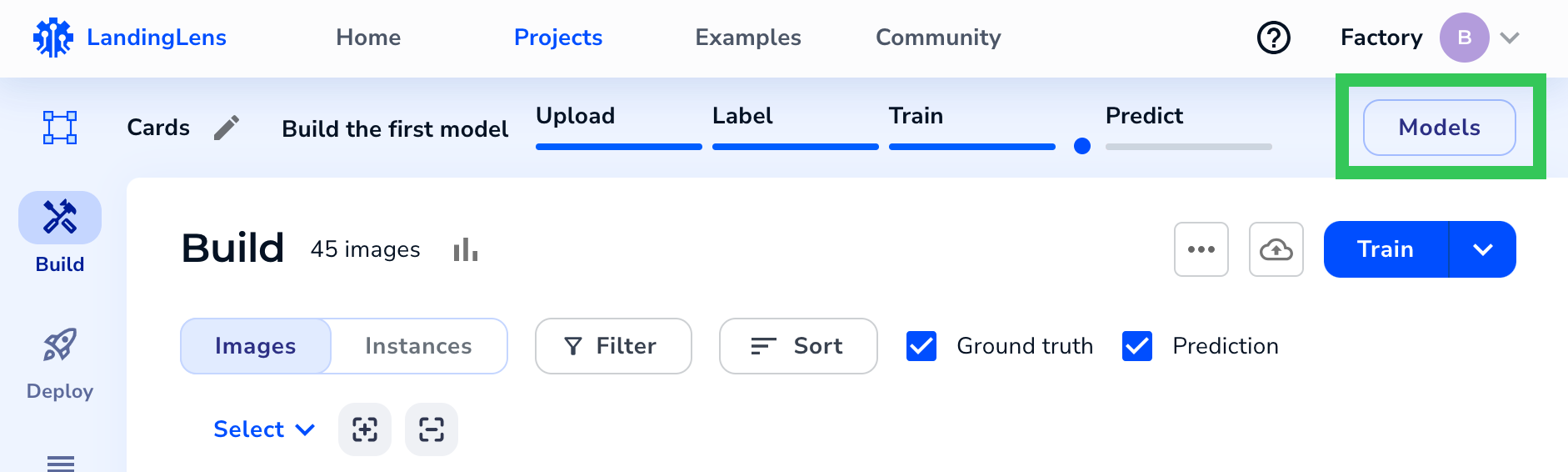

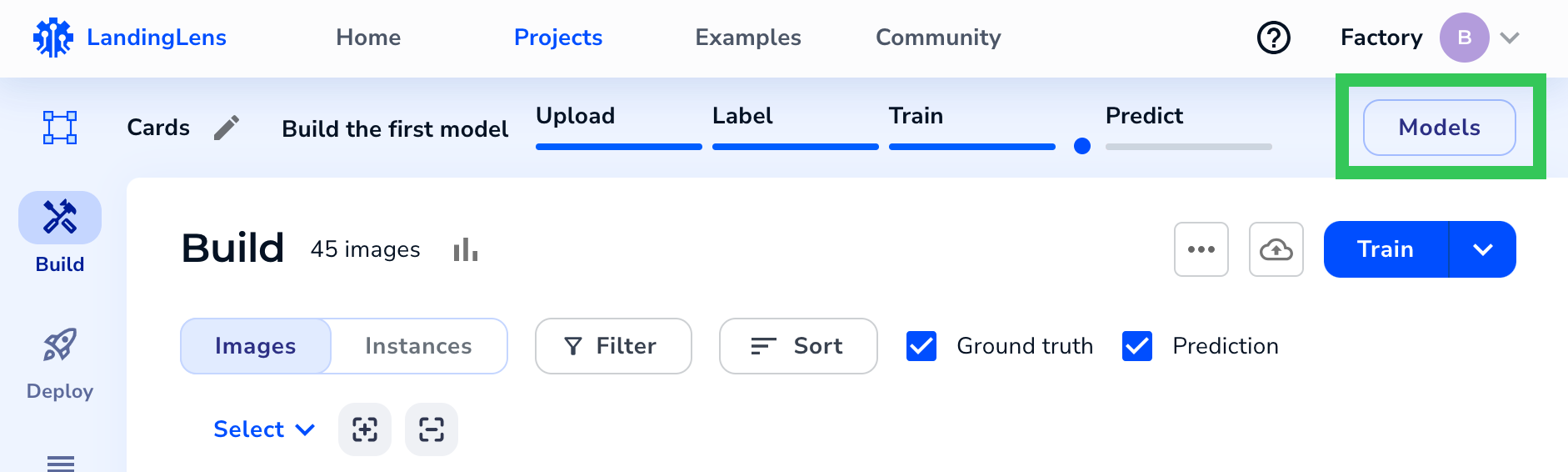

First, we’ve made it much easier to compare and analyze model performance. All models for a project are now centralized on a new page called Models, which allows you to compare model performance across different datasets.

Second, we’ve updated Segmentation projects to offer more flexibility and faster inference speeds.

And finally, we’ve redesigned the labeling experience for Object Detection, Segmentation, and Classification projects.

Keep reading to get more details about these updates!

Compare Models

You can now quickly and easily compare model performance across multiple datasets in a single project.

All trained models for a project now appear in a table on a new tab called Models. By default, you can see how each model performed on its Train, Dev, and Test sets. You can also add existing dataset snapshots—or filtered versions of them—to the table. This matrix empowers you to quickly compare model performance on a variety of datasets. From here, you can also deploy models.

Comparing model performance on the Models tab isn't a required step in LandingLens, but it's a useful technique for identifying your most accurate models. For full details, go to Compare Models.

Compare Model Performance Across Different Datasets on the Models Page

Compare Model Performance Across Different Datasets on the Models Page

Impacts to Cloud Deployment

We anticipate that most users will want to compare mode performance in the Models tab before choosing which model to deploy. Because of this, the Cloud Deployment process now starts from the Models tab. After comparing the models in the table, simply click the Rocket (deploy) icon for the model you want to deploy. The rest of the deployment process is the same as before.

Deploy Models from the Models Page

Deploy Models from the Models Page

We’ve made two additional changes to Cloud Deployment to ensure more consistent comparisons between endpoints:

- Once a model is deployed to an endpoint, that model can’t be swapped for another.

- The Confidence Threshold of a deployed model can’t be changed.

Updated Segmentation Projects

As part of this release, the Segmentation projects in your LandingLens organization have new capabilities, including:

- You can upload up to 10,000 images to a Segmentation project.

- When using Custom Training for Segmentation projects, you can now resize images to be up to 1024x1024px.

- Running inference on models trained via Custom Training will now be faster.

- Updated Model Size options in Custom Training. Keep reading for more information.

Updated Model Sizes in Custom Training

When using Custom Training, you can now choose from these two model sizes:

- FastViT-[14M]: Train and run inferences faster. Uses the FastViT transformer architecture and 14 million parameters.

- SegFormer-[14M]: Capture more complex patterns in the training data. Training and running inferences will take longer. Uses the SegFormer semantic segmentation framework and 14 million parameters.

Model Sizes for Segmentation

Model Sizes for SegmentationRedesigned Labeling Experience

In this release, the label view now has a brand-new look, offering a more modern and intuitive interface while maintaining all existing tools and functionalities for Object Detection, Segmentation, and Classification. For more information, go to Labeling.

Here’s what you can expect the label view page to look like for each project type:

Object Detection

New Labeling Experience for Object Detection

New Labeling Experience for Object DetectionSegmentation

.png) New Labeling Experience for Segmentation

New Labeling Experience for SegmentationClassification

New Labeling Experience for Classification

New Labeling Experience for ClassificationDecember 19, 2023: Train Multiple Models at Once

When one model is training, you can now start training another model! This is helpful if you want to compare performance between multiple datasets or model training settings. Training multiple models at once is sometimes called "parallel training".

Parallel training is available for both the Fast Training and Custom Training methods.

Let’s say you want to build two models for the same dataset using Custom Training. You want one model to use 30 epochs and the other to use 40 epochs. You can start training the second model without waiting for the first one to complete. This capability empowers you to run experiments faster.

To see the status of a model, click the Models drop-down menu. Models that are still training have the status In Progress.

Two Models Are Training at the Same Time

Two Models Are Training at the Same TimeBug Fixes

In previous versions of LandingLens, training a Visual Prompting model sometimes led to inaccurate predictions and this error message:

“Model training failed. Please try training a new model.”

This bug has been fixed, and Visual Prompting models now train correctly.

December 4, 2023

We have great news for our LandingEdge and Docker users! As of today, you no longer need to deploy a model via Cloud Deployment (or by generating a deployable version of a model) to use that model in LandingEdge and Docker.

All models trained starting today will automatically be deployable via LandingEdge and Docker! Each model has its best Confidence Score. To see all models that can be deployed from a project, go to Deploy and click Deployable Models.

Deployable Models

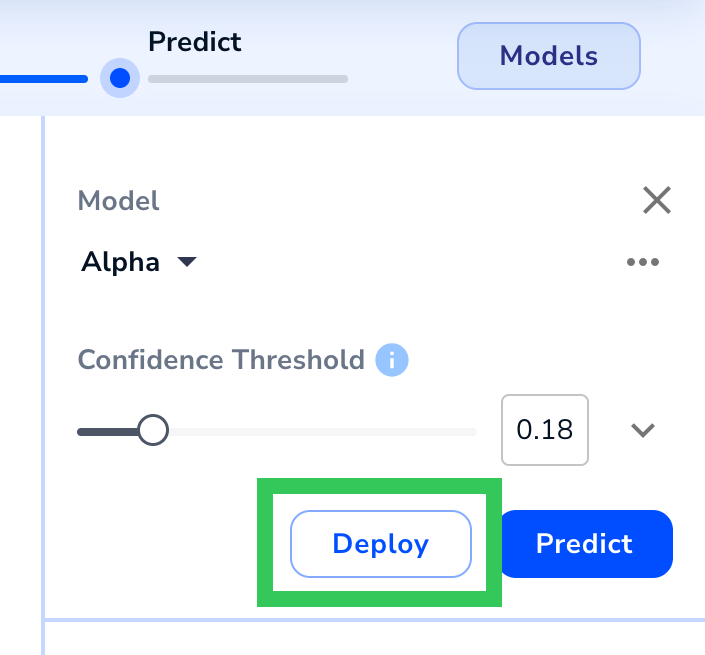

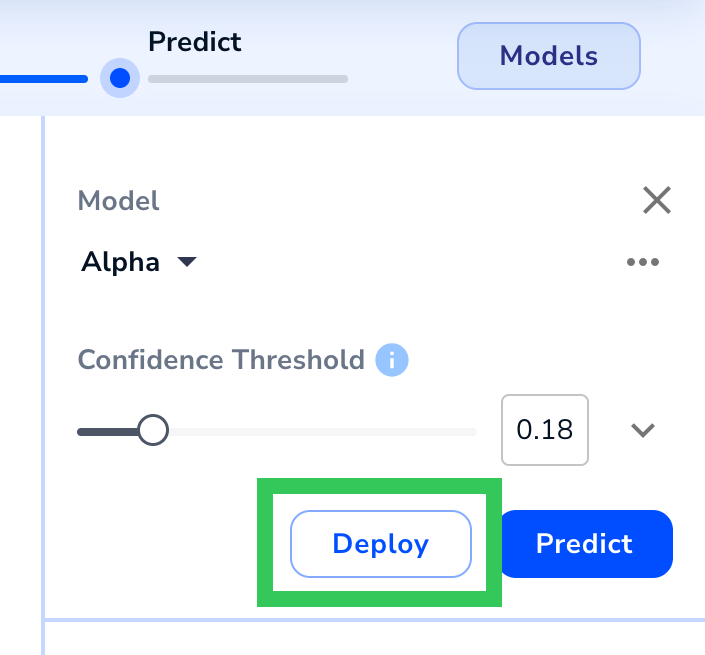

Deployable ModelsIf you want to use a different Confidence Score for a model, or if you don't see your model in the Deployable Models table, you can either deploy it via Cloud Deployment or use the following procedure to generate a deployable model:

- Open the project with the model you want to deploy.

- Click Models to open the Models panel.

Open the Models Panel

Open the Models Panel - Select the model you want to deploy from the Model drop-down menu.

- Use the Confidence Threshold slider to select the Confidence Score you want the model to have.

- Click Deploy.

Deploy the Model after Selecting a Confidence Score

Deploy the Model after Selecting a Confidence Score - Select Self-Hosted Deployments.

- Click Generate Deployable Model.LandingLens creates a deployable model and opens to the Deployable Models table on the Deploy page.

.png) Generate a Deployable Model

Generate a Deployable Model

November 21, 2023

In this newest release of LandingLens, we’ve added more ways to manage your datasets. Here are the highlights:

- You can now save “snapshots” of all or some images in a project, and train models based on those groups of images. You can have multiple snapshots in each project!

- You can add tags (keywords) to images.

- LandingLens automatically saves all models during model training.

- You can now apply split, metadata, and tag information when uploading images to any project type.

To learn more about these features, keep reading!

Changes to Dataset Versioning: Introducing Dataset Snapshots

In recent LandingLens releases, training a model and saving it created a “snapshot” of all the data in that model, including the images, labels, and predictions. You could create a new project from a dataset and even revert back to one. However, datasets were only saved for models, and you couldn’t train based on those datasets.

In this release, we’ve made the dataset versions more flexible, and we’re now calling them “dataset snapshots”, or “snapshots” just for short. Here are some of the things you can now do:

- Create a snapshot at any time! You no longer have to train a model to create a snapshot.

- Create a snapshot based on all the images in your project OR on the specific ones you’ve selected.

- Train a model using only the snapshot.

- Download the Pascal VOC files and class map for a snapshot. This gives you the ability to re-upload the labeled images to LandingLens in the future, if needed.

Plus, you still have the ability to create new projects from snapshots, revert your project to a snapshot, and download a CSV with snapshot information.

For detailed information, go to Dataset Snapshots and watch the following tutorial:

Tag Images

You can now add tags (keywords) to images in your dataset! Using tags helps you organize and filter the images in your dataset.

For example, let's say that you have images from Camera A and Camera B. You can create a tag for each camera and apply the tags to the corresponding images. Then, after you train a model, you can filter by all Camera A images, and see how the model performed on those. You can then do the same for Camera B images.

Tags are project-specific; creating a tag in one project doesn't add it to other projects. You can apply multiple tags to each image.

For detailed information, go to Tags and watch the following tutorial:

All Trained Models Are Now Automatically Saved

When you train a model, LandingLens now automatically saves and names it. This ensures that you can access all versions of your data in the snapshots dashboard and prevents models from being accidentally overwritten.

The default model naming convention is Model-mm-dd-yyyy_n, where n is the number of models in the project trained that day. For example, let’s say you train six models in a project on December 10, 2023. The default name of the sixth model will be Model-12-10-2023_6. You can rename a model at any time.

Default Name for the First Model Trained in a Project on November 18, 2023

Default Name for the First Model Trained in a Project on November 18, 2023Consistent Upload Experience

You can now apply split, metadata, and tag information when uploading images to any project type. Previously, you could only apply splits when uploading information to Segmentation projects. The updated upload page provides a more consistent experience and allows you to apply important information at upload.

Apply Split, Metadata, and Tag Information at Upload to Any Project

Apply Split, Metadata, and Tag Information at Upload to Any ProjectOctober 30, 2023

We've had many requests for this specific feature, and the long wait is finally over—you can now create a new project by copying an existing dataset version! This creates a new project with the selected dataset.

When you copy a dataset, you can even include the existing labels and metadata.

Create a New Project

Create a New Project

For more information, go to Create a New Project from an Existing Dataset or check out the tutorial video below.

October 10, 2023

This newest version of LandingLens gives you more flexibility across the board. In this release, we've added:

- A new way to upload images to your projects—via your smart phone!

- Support for an additional file type—MPO

- A new member type—Owner

- The ability to change a user’s member type

- More transparency for the credits used in deleted projects

For detailed info on each of these updates, keep reading!

Upload Images from Your Phone Directly into a LandingLens Project

You can now upload images from your phone directly into a project in LandingLens. To do this, click the Upload icon, then click Upload from Your Phone.

Generate and scan the QR code, and then take photos or select existing ones. All images are then uploaded into your project. To learn more, go to Upload Images from Your Phone.

Scan the QR Code and Click the Link on Your Phone

Scan the QR Code and Click the Link on Your PhoneLandingLens Now Supports MPO Files

You can now upload MPO (Multi-Picture Object) files to LandingLens. An MPO file contains two JPG files that create a stereoscopic image when overlaid. When you upload an MPO file to LandingLens, LandingLens selects one of the two JPG files.

For more information about supported file types, go to Upload Images.

Owners and Changing Role Types

Before this new version, LandingLens offered two member types: Collaborators and Admins. Once you assigned a member type to a user, you couldn't change their role.

This new version of LandingLens now offers a new role called Owner. Owners have all the permissions as Admins, plus the ability to change a member's role! For example, if Anna was originally added as a Collaborator, but now she needs access to the API Key page, an Owner can "upgrade" Anna to be an Admin.

An organization can have up to three Owners. To learn more information, go to Member Roles.

Make a Member an Admin

Make a Member an AdminTrack Credits for Deleted Projects

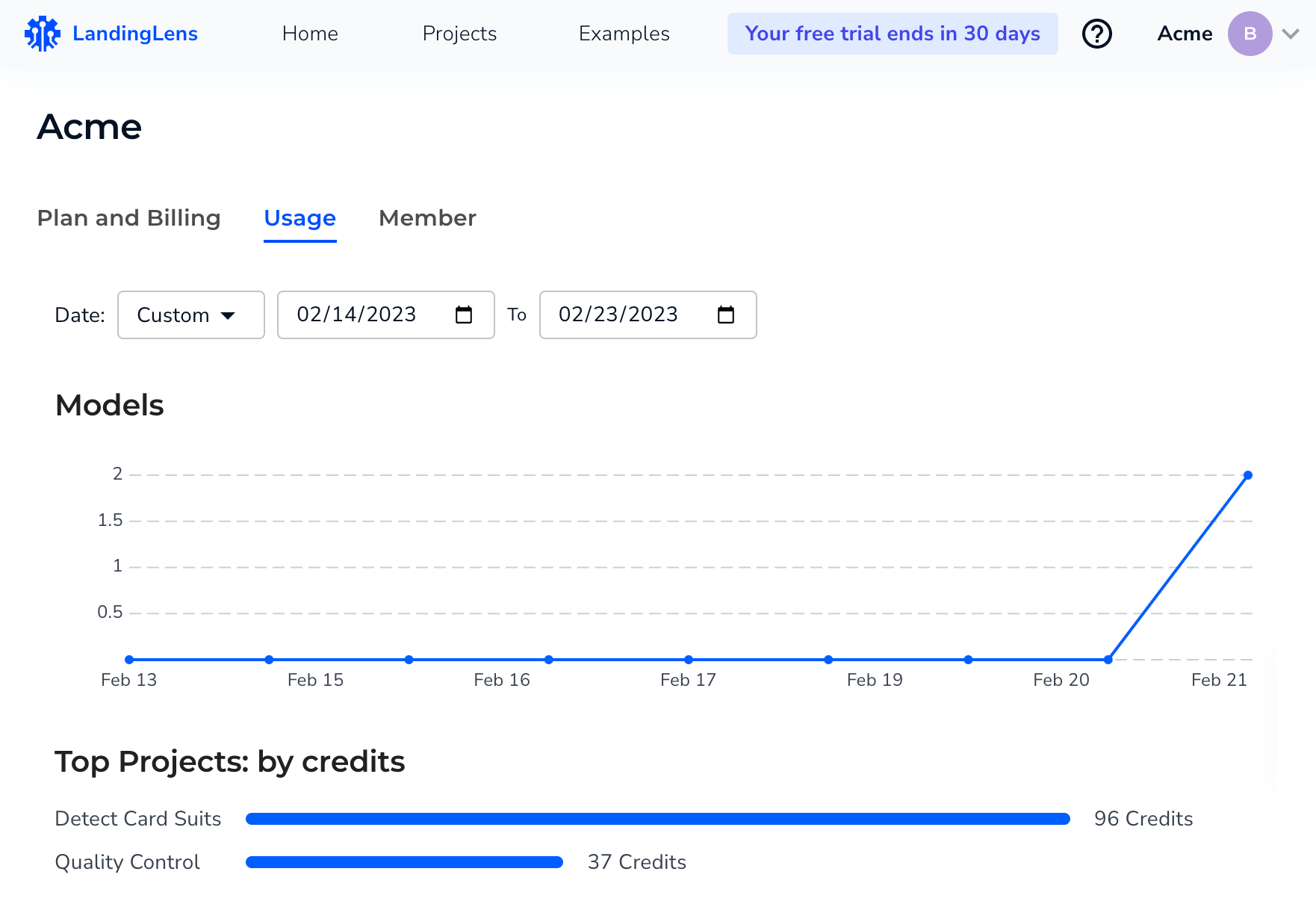

If you delete a project, the credits used on that project are now easier to track on the Usage dashboard.

Previously, if you deleted a project, the Top Projects: By Credits and Top Project: By Images sections would show “(private project)” instead of the project name. Now, if you delete a project, the project name displays, making it easier to understand how credits were used.

The Usage Log continues to show “-” instead of the names of deleted projects, because LandingLens pulls this data from a different place.

For example, let’s say you deleted a project called “Quality Control”. The following screenshot shows how the Usage page would look after deleting the project.

The Usage Page After Deleting the "Quality Control" Project

The Usage Page After Deleting the "Quality Control" ProjectThe following table summarizes the changes:

| Section | Before | After |

|---|---|---|

| Top Projects: By Credits | (private project) | Project Name |

| Top Projects: By Images | (private project) | Project Name |

| Usage Log | - | - |

Bug Fixes

For users on the Classic Flow version of LandingLens: The Job hyperlinks on the Models tab now work correctly.

September 19, 2023

Welcome to the latest release of LandingLens! This update streamlines your project management experience.

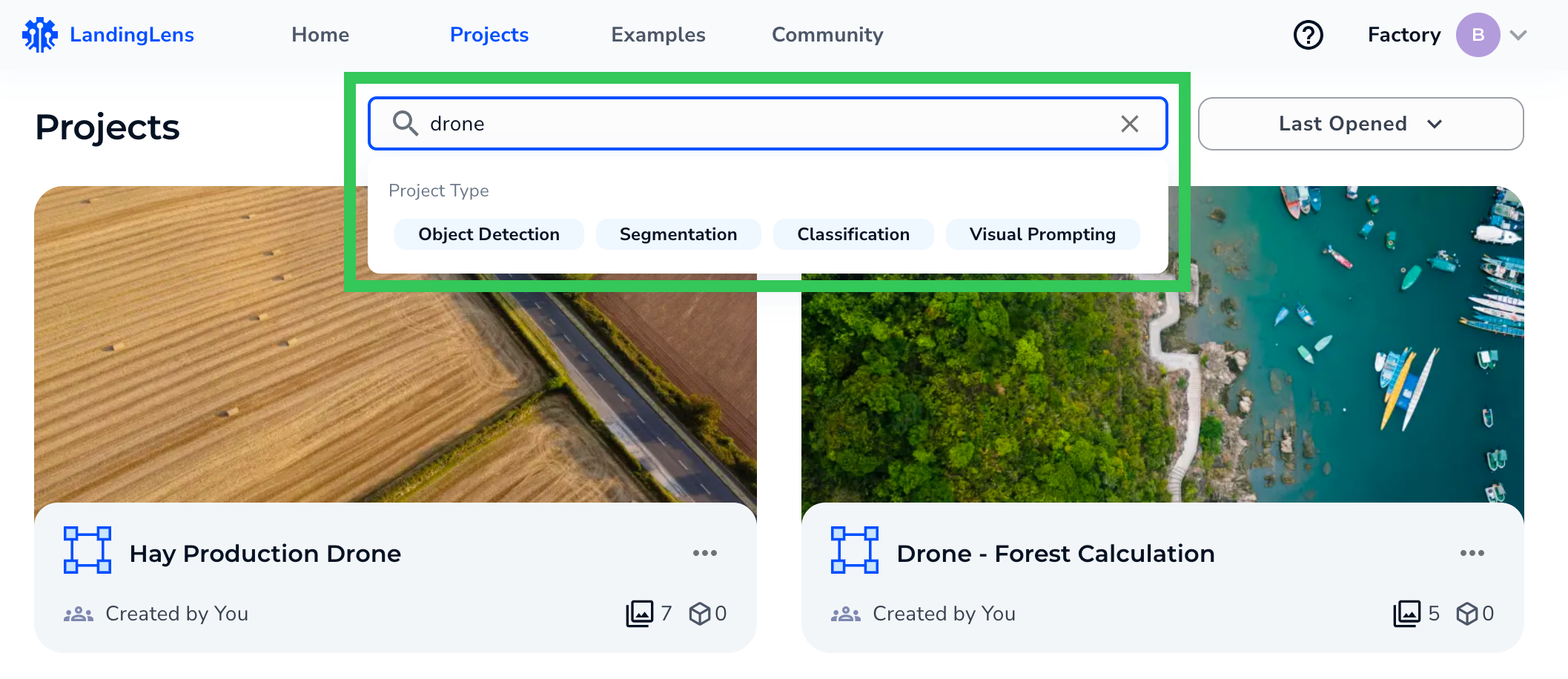

Now, when navigating the Projects page, you can effortlessly filter your search results by project type, providing you with even more refined control over your workflow. You can also now rename, delete, and invite users to projects directly from the Projects page.

Additionally, the ability to delete classes can now be enabled upon request for the Classic Flow version of LandingLens.

For more information, read the full release notes below.

New Features and Improvements

Filter by Project Type

When searching for a specific project on the Projects page, you can now filter by project type. To do this, enter your search term in the search bar and click the project type you want to search for.

For more information on searching for projects, go to Search for Projects.

Narrow Down Your Search

Narrow Down Your SearchMore Ways to Manage Projects

You can now perform these actions directly from a project card on the Projects page:

- Rename the project

- Invite users to the project (only applicable to private projects)

- Delete the project

To access these options, click the Actions icon on the project card. For more information on managing projects from the Projects page, go to Search for Projects.

New Project Actions You Can Access from the Project Page

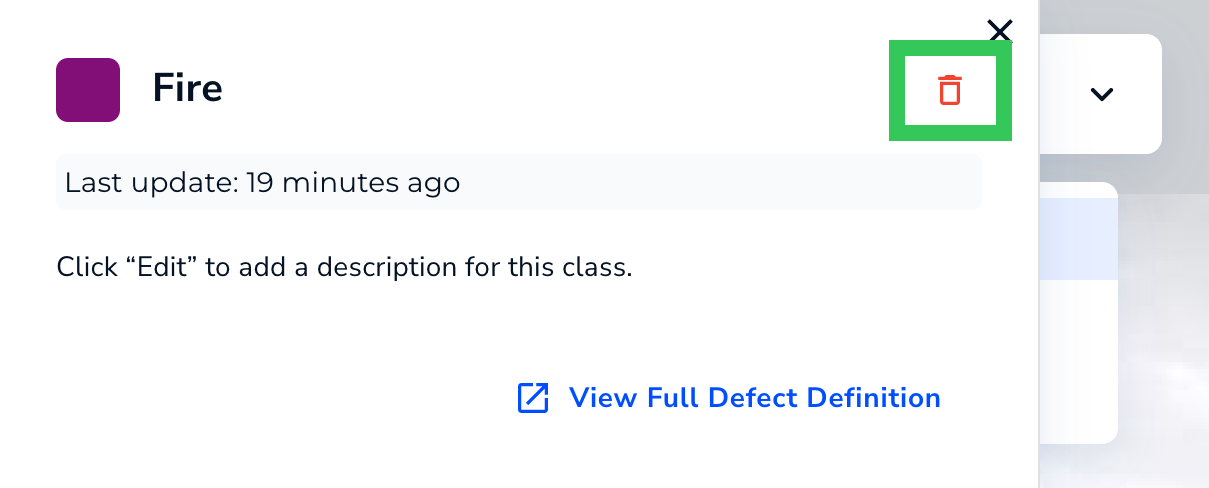

New Project Actions You Can Access from the Project PageClassic Flow Supports Ability to Delete Classes

If you are using the Classic Flow version of LandingLens, the ability to delete classes can now be enabled. To request this feature, contact your LandingAI representative.

For more information about deleting classes, go to Delete Classes.

Bug Fixes

- You no longer need to refresh the page to update how long ago a model was trained. Now, the time is updated automatically.

- If you delete a label or “discard” the name of a class before you create it in a Visual Prompting project, those changes are now saved. Previously, the label or class would still appear.

- We improved the transition from training a Visual Prompting model to seeing the results. Now, the predictions and model information load faster.

August 10, 2023

More Details Now Display on Project Cards

Project cards now display the total number of images in your dataset and trained models. You can hover over the icons to view more information, like the number of labeled images.

%20hover-over-project-card(1).gif)

New Login Page Design

We've introduced a fresh look for the LandingLens login page.

Revamped the Model Sidebar

When you don't have any trained models, the Model sidebar displays a tooltip to help you effortlessly progress through the platform.

The Polygon Shortcut Changed from "P" to "W"

LandingLens is equipped with several keyboard shortcuts. For Segmentation projects, the shortcut for the Polygon tool changed from "P" to "W".

Bug Fixes

- Changed the file naming convention for downloaded CSV files of dataset snapshots. These files are now be named after the model and the date the file was downloaded.

- A few "behind-the-scenes" bugs were fixed.

July 28, 2023

This newest version of LandingLens includes features to make it easier to manage your dataset and deploy your model!

Dataset Snapshots: More Control and Visibility into Your Dataset

With this release, we’re introducing the concept of dataset “snapshots”. Now, whenever you train a model, LandingLens saves your dataset—and the model’s predictions—as a “snapshot” that you can continue to access in your Project. With dataset snapshots, you can:

- Revert to an earlier dataset

- Download a CSV of information about the dataset

- See when the dataset information is gathered during the training process

We’ve also redesigned the model pop-up window to make it easier to navigate all the model information and reports.

Revert to an Earlier Dataset

You can now revert your Project to use an earlier dataset. For example, let's say that you trained a model, and later added more images and labels. After training the model a second time, you see that the first model performed better, so you want to go back to that dataset. You can do this by reverting the dataset.

Revert to the Selected Dataset

Revert to the Selected Dataset

For detailed instructions, go to Revert to a Saved Dataset.

Download a CSV of Dataset Information

In Object Detection and Classification projects, you can now download a CSV of information about the dataset. The CSV includes several columns of data, including Project Name, Image Name, Split.

Download the CSV

Download the CSVFor detailed instructions, go to Download a CSV of Dataset Data.

Changes to the Model Training Process

When you click Train, the first step in the model training process is now to gather and save the images and data currently in your Project. This information is part of the “dataset snapshot” that you can later revert to.

This step displays as Taking a snapshot of your data in the Model panel.

Taking a Snapshot of Your Data

Taking a Snapshot of Your DataFor more information about the model training process, go to Train Models.

Changes to the Model Information Pop-Up Window

We’ve redesigned the Model pop-up window to make it easier to navigate. The pop-up is now organized into multiple tabs.

The Model Pop-Up Is Now Organized by Tabs

The Model Pop-Up Is Now Organized by TabsMobile Inference Support in Segmentation and Visual Prompting

You can now use Mobile Inference on Segmentation and Visual Prompting Projects. Previously, it was only available for Object Detection and Classification Projects.

Streamlined Deployment Workflow from Build Page

We’ve streamlined the workflow for deploying a model from the Build page. When you click Deploy, you can now simply enter the model name and select your endpoint.

Deploy the Model

Deploy the Model Name the Model and Select an Endpoint

Name the Model and Select an EndpointDownloadable Pascal VOC Files Now Available for Classification Projects

Before, downloadable Pascal VOC files were only available for Object Detection and Segmentation projects. Now, we've made this feature available for Classification projects.

Bug Fixes

- Selected images moved to a different split will no longer remain selected.

- You no longer need to refresh the browser to save a model.

- Users in classic flow can resume deploying from the Models page.

- Fixed multiple issues related to deleting classes.

- Fixed multiple issues related to creating an endpoint with a name that already exists in that project.

- Changed the copy of the "Defect" filter to "Class".

- Fixed the login process for SSO.

July 5, 2023

Smart Labeling

We are thrilled to announce Smart Labeling, a new tool that helps you label objects in Segmentation Projects faster and with more accuracy.

When Smart Labeling is enabled, simply hover over the object you want to detect, click, and press enter. Smart Labeling automatically detects the shapes you want to select and applies your selected Class!

To learn more, check out Label with Smart Labeling.

Smart Labeling

Smart Labeling

New API Key System

Up until this release, LandingLens required both an API Key and API Secret to be used to run inference. After evaluating our user experience and current industry standards, we decided to eliminate the use of the API Secret. As of this release, LandingLens generates and requires only an API Key!

All existing API Key and API Secret pairs will continue to be valid, and all applications using those credentials will continue to work.

You can create multiple API Keys. This gives you more flexibility and control over who can run inference with your deployed Models. Additionally, you can revoke and delete API Keys.

For more details, check out API Keys.

.png) API Keys

API KeysNew Way to View the API Command for Deployment

We now offer a new way to get the API call on the Deploy page. Now you can click the Actions icon and select View API Command.

.png) New Way to View the API Command for Deployment

New Way to View the API Command for DeploymentBug Fixes

- If you delete a Class, you can now create a new Class with the same name as the deleted Class.

- When using the QR code for Mobile Inference, some users got this error: “Missing user record with apikey or user id: -1”. The issue that caused this error has been fixed.

- If a Class was deleted in a Classification Project, the model metrics were also deleted for that Project. The issue that caused this error has been fixed.

June 22, 2023

Improvements

We've added these improvements to help you manage your credits and pricing plan:

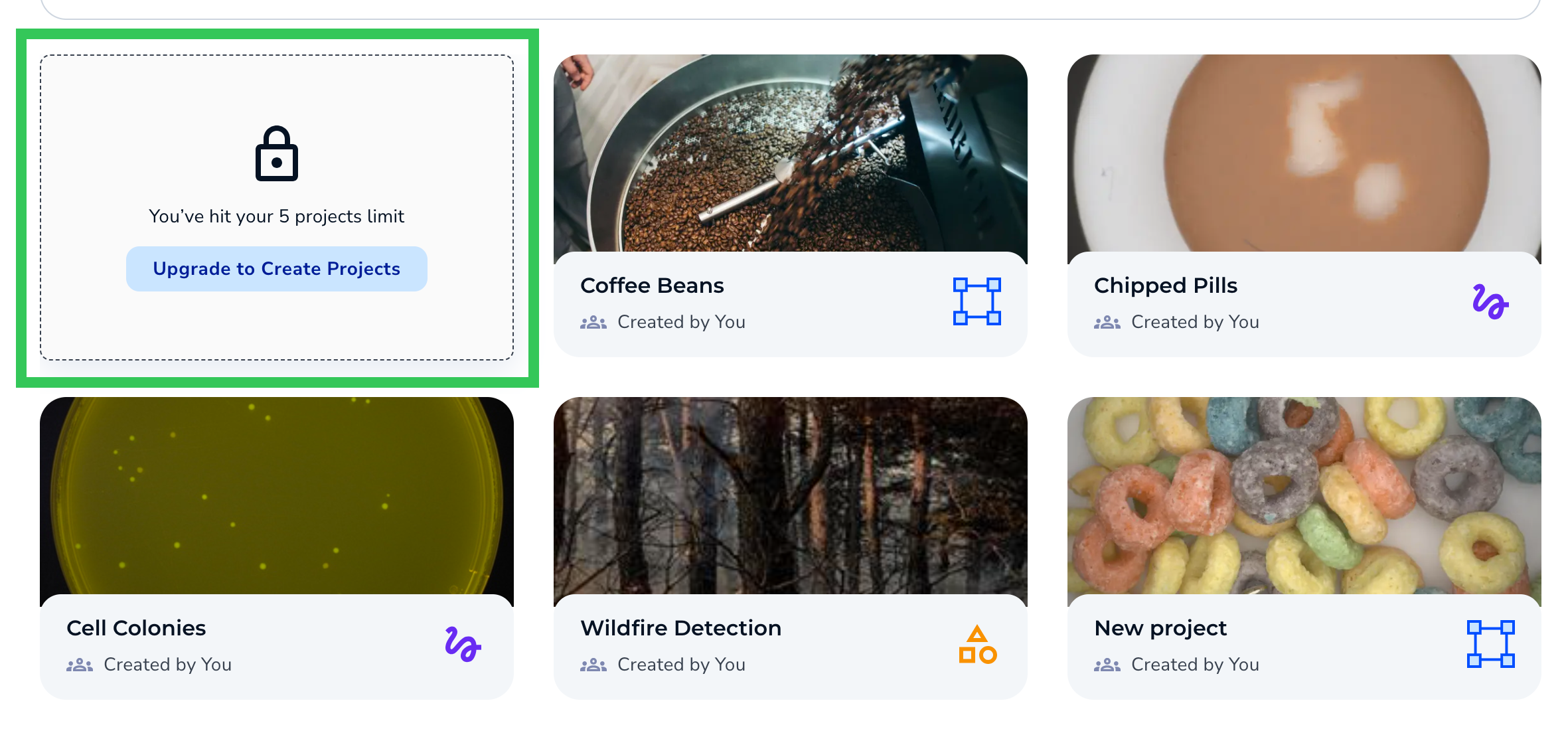

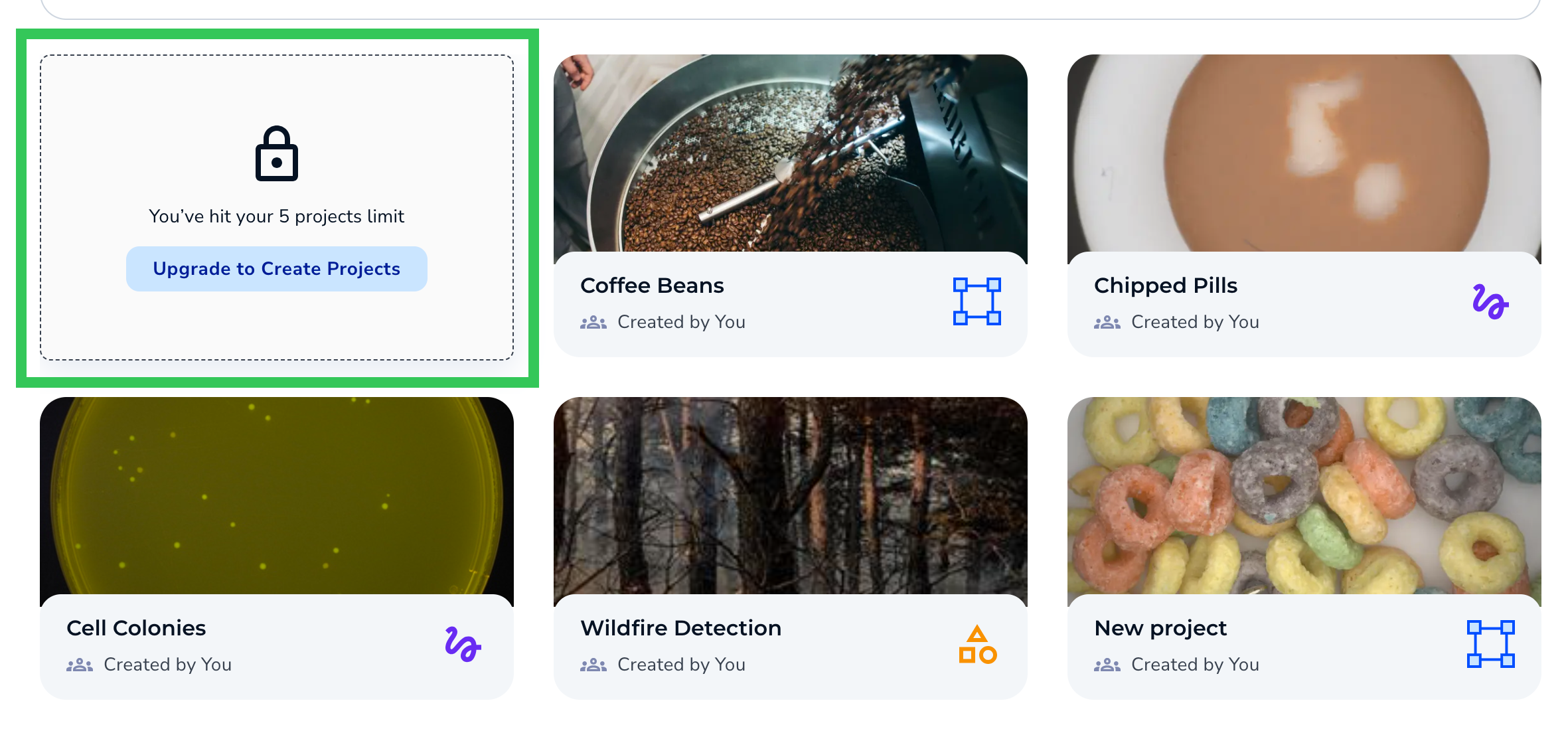

- If you reach your Project maximum, LandingLens now alerts you with a new tile. The Project maximum applies to the current number of Projects, so you can delete an existing Project to create another one. Or, if you want an unlimited number of Projects, upgrade to a Visionary or Enterprise plan.

Notification When You Reach the Project Maximum

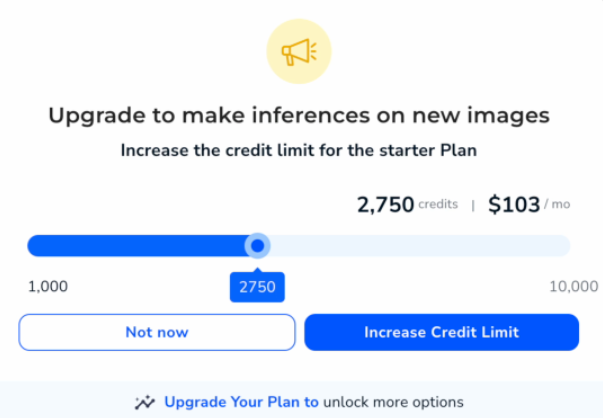

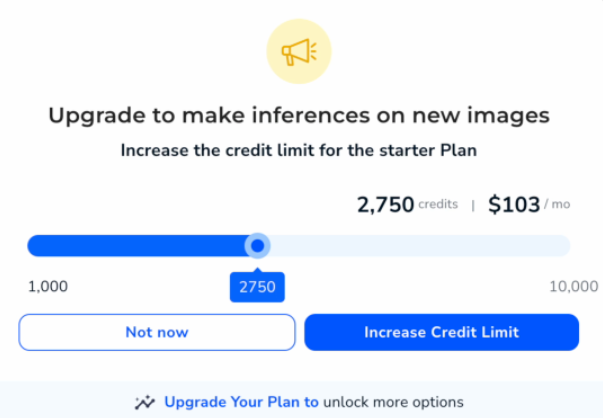

Notification When You Reach the Project Maximum - If training a Model or running inference will put you above your credit maximum, LandingLens now alerts you with a pop-up that guides you through the next steps.

Notification When You Reach the Credit Maximum

Notification When You Reach the Credit Maximum

Bug Fixes

If you have a long Model name, it’s now shortened when it displays in the user interface. Hover over the name to see the full name. Previously, long Model names would attempt to wrap to a second line, but the top of the text would get cut off.

Hover to See the Full Model Name

Hover to See the Full Model NameJune 14, 2023

We're thrilled to unveil the latest release of LandingLens! This version empowers you with more ways to run inference on the computer vision Models you create in LandingLens. You can now use your smartphone to capture images and immediately see your Model’s predictions. Additionally, all users can now download a 14 day free trial of LandingEdge!

We’ve also streamlined the process to develop Models by expanding our Workflow Assistant to new Project Types and making it easier to end the Model training process.

Learn more about these updates below!

Use Your Model on a Smartphone with Mobile Inference

LandingAI is excited to share that we’ve developed a new feature—Mobile Inference! This feature empowers you with an additional way to use your LandingLens Model in the real-world. For detailed information, go to Mobile Inference.

After you develop your Model and deploy it to an endpoint, you can then generate a QR code by clicking the QR code icon.

Generate a QR Code

Generate a QR Code

Scanning the QR code with your phone opens the LandingLens app in your browser.

.png) Scan the QR Code with Your Smartphone

Scan the QR Code with Your SmartphoneFrom there, you can take a photo or select an image from your phone. The app sends the photo to your deployed Model, and your Model runs inference on it. The Model’s predictions then display immediately on your phone!

Take or Select a Photo

Take or Select a Photo.PNG) See Predictions and Object Count on Your Smartphone

See Predictions and Object Count on Your SmartphoneThe images and predictions are also saved back to LandingLens, so that you can review all results in a centralized location. You can also share the QR code with your colleagues, so that you can all collaborate on running inference.

.png) See Results in LandingLens

See Results in LandingLensClearly-Defined Deployment Options

We’ve added more visual guides to the Deploy page to help you choose the right deployment method for your use case.

For example, when you first click Deploy, you’ll see two options for how to use your model.

- Cloud Deployment: Click to create an endpoint, which is a virtual location that hosts your Model. You can then send images to the Model via API requests, or use a new feature called Mobile Inference to run your Model on your smartphone (more info on that below).

- Edge Deployment: Click to deploy your Model via LandingEdge, the LandingAI application that connects to your edge devices.

Create an Endpoint for Cloud Deployment

Create an Endpoint for Cloud DeploymentDownload a Free Trial of LandingEdge

You can now download a free trial of LandingEdge directly from LandingLens!

If you haven't deploy a Model yet, open a Project to the Deploy page and click Download LandingEdge. Then follow the on-screen prompts to complete the download. For detailed information, go to LandingEdge Overview.

Download LandingEdge

Download LandingEdgeIf you’ve already deployed a Model via Cloud Deployment, click LandingEdge.

Download LandingEdge

Download LandingEdgeWorkflow Assistant

We added the Workflow Assistant to Segmentation and Classification Projects. The Workflow Assistant includes a progress bar at the top of your Project that guides you through all the steps of developing a computer vision Model, from uploading images to your dataset to using your Model to make predictions.

Workflow Assistant in a Classification Model

Workflow Assistant in a Classification ModelWhen you hover over the Workflow Assistant, it nudges you in the right direction by “shaking” the buttons you need to click to move to the next step. For example, after you’ve labeled at least 10 images, the Train button shakes, letting you know that you have enough data to create your first Model.

Hover Over the Workflow Assistant to "Shake" What You Need to Click Next

Hover Over the Workflow Assistant to "Shake" What You Need to Click NextEnd Training Early

We’ve provided you with more on-screen guidance about what happens if you end the Model training process before it’s completed.

Model training can only be ended at Step 3: Training & Learning, which is when the model training process actually begins. The Stop Training button is now End Training Now.

End Training Now

End Training NowIf you end Model training early, LandingLens completes its current round (epoch) of training and evaluates the images in your dataset with the Model it had generated up to that point. The Model may not be as accurate as it would be if training had completed. For detailed information, go to Train Models.

Large Image Support

When using Custom Training in LandingLens Enterprise, you can now resize images in Object Detection and Segmentation Projects to be up to 36MP. For detailed information, go to Custom Training.

Bug Fixes

We fixed a bug in Agreement-Based Labeling that allowed you to filter by users who weren’t assigned any tasks. Now, you can only filter by users who have been assigned tasks.

June 5, 2023

What’s New

To make it easier to understand some of the features, we updated some of the user interface text throughout LandingLens.

Bug Fixes

- We fixed an issue that caused Labeling Tasks to show that a negative number of images needed review. Now, the number of images under review is calculated correctly.

- We fixed a bug that caused the Class colors in Predictions to be different from the actual colors assigned to those Classes.

May 24, 2023

What's New

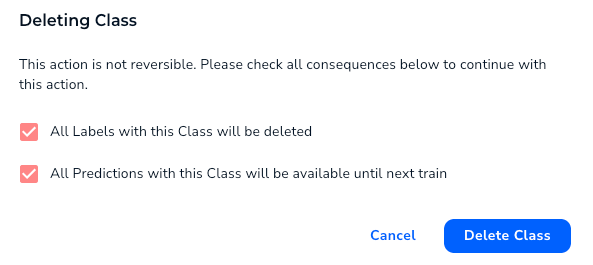

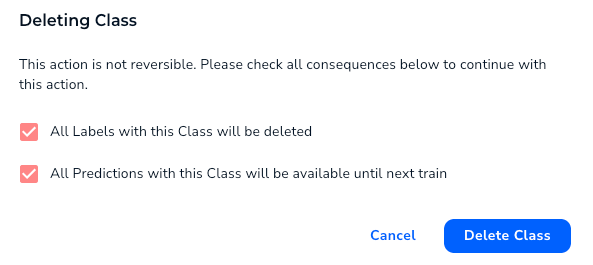

- When you delete a Class, LandingLens now shows you a pop-up that lists the impacts of deleting a Class. This empowers you to make an informed decision and helps prevent accidentally deleting Classes. For more information, go to Delete Classes.

Delete Class

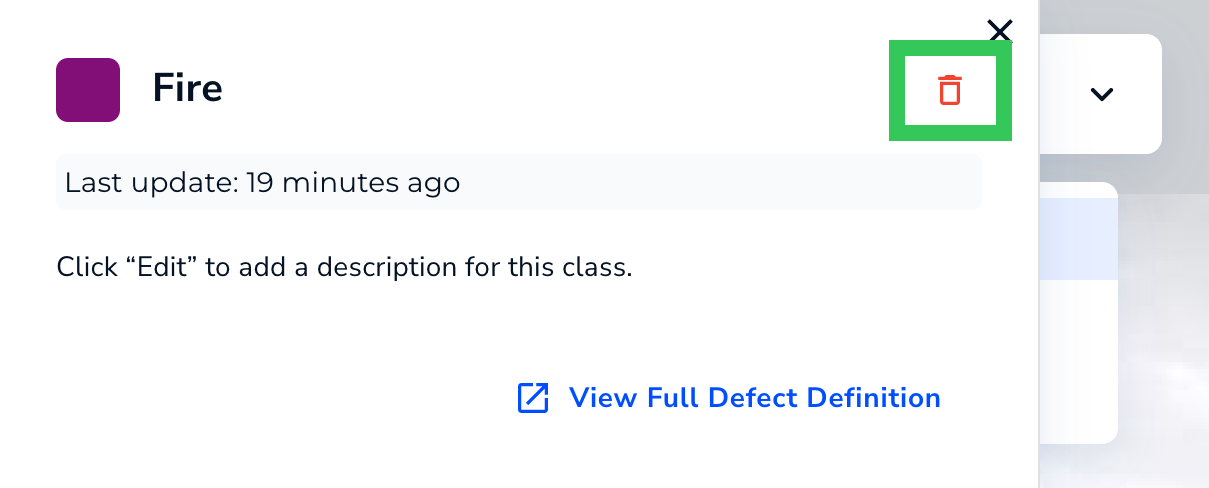

Delete Class - You can now delete a Class when labeling images. For more information, go to Delete Classes.

Trash Icon

Trash Icon - We improved the experience with labeling images in Classification Projects. Previously, if you selected a label for an image on the Build tab, LandingLens would have to refresh the page to save the change. Now when you select a label, LandingLens saves the change without refreshing the page.

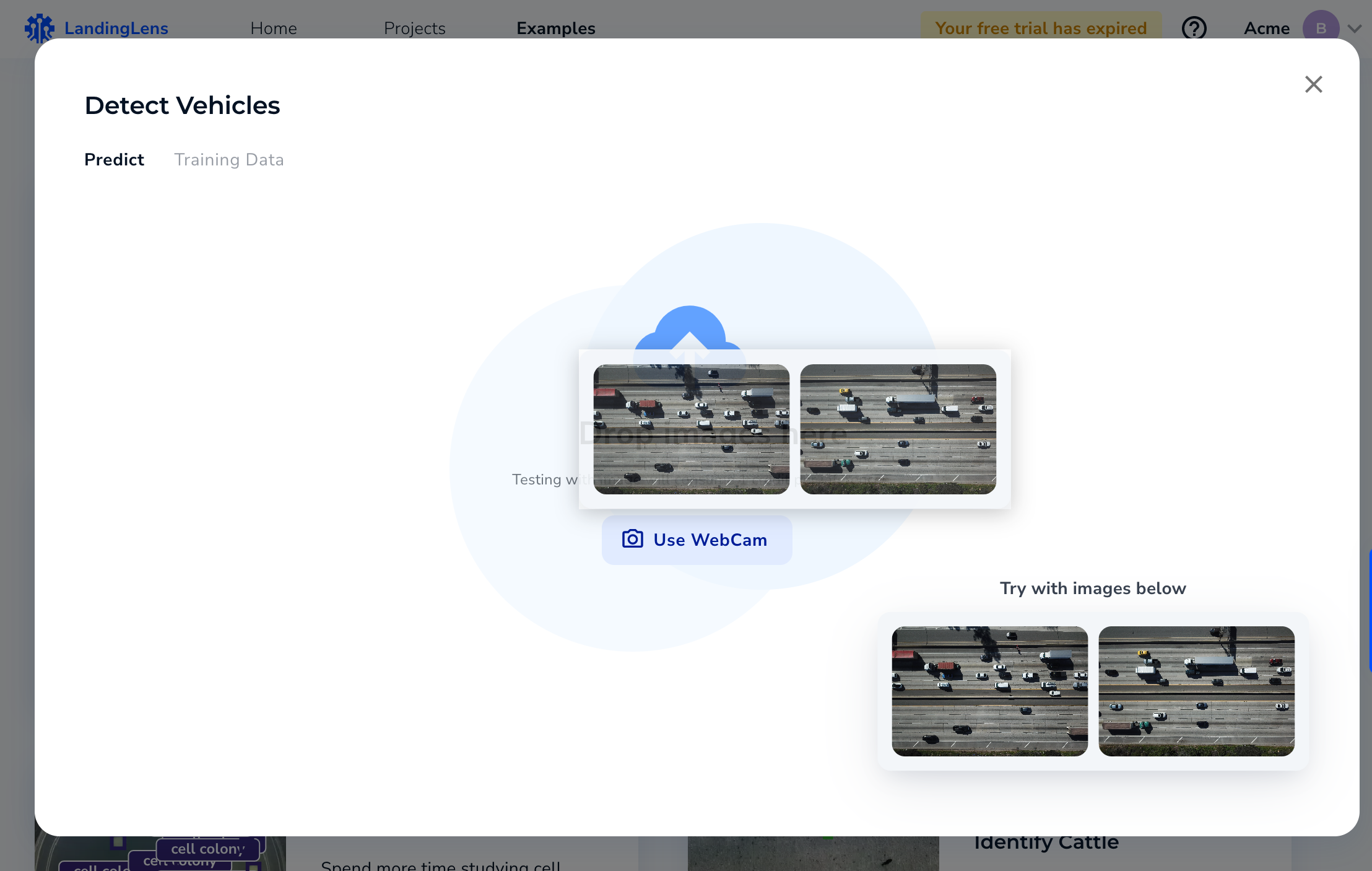

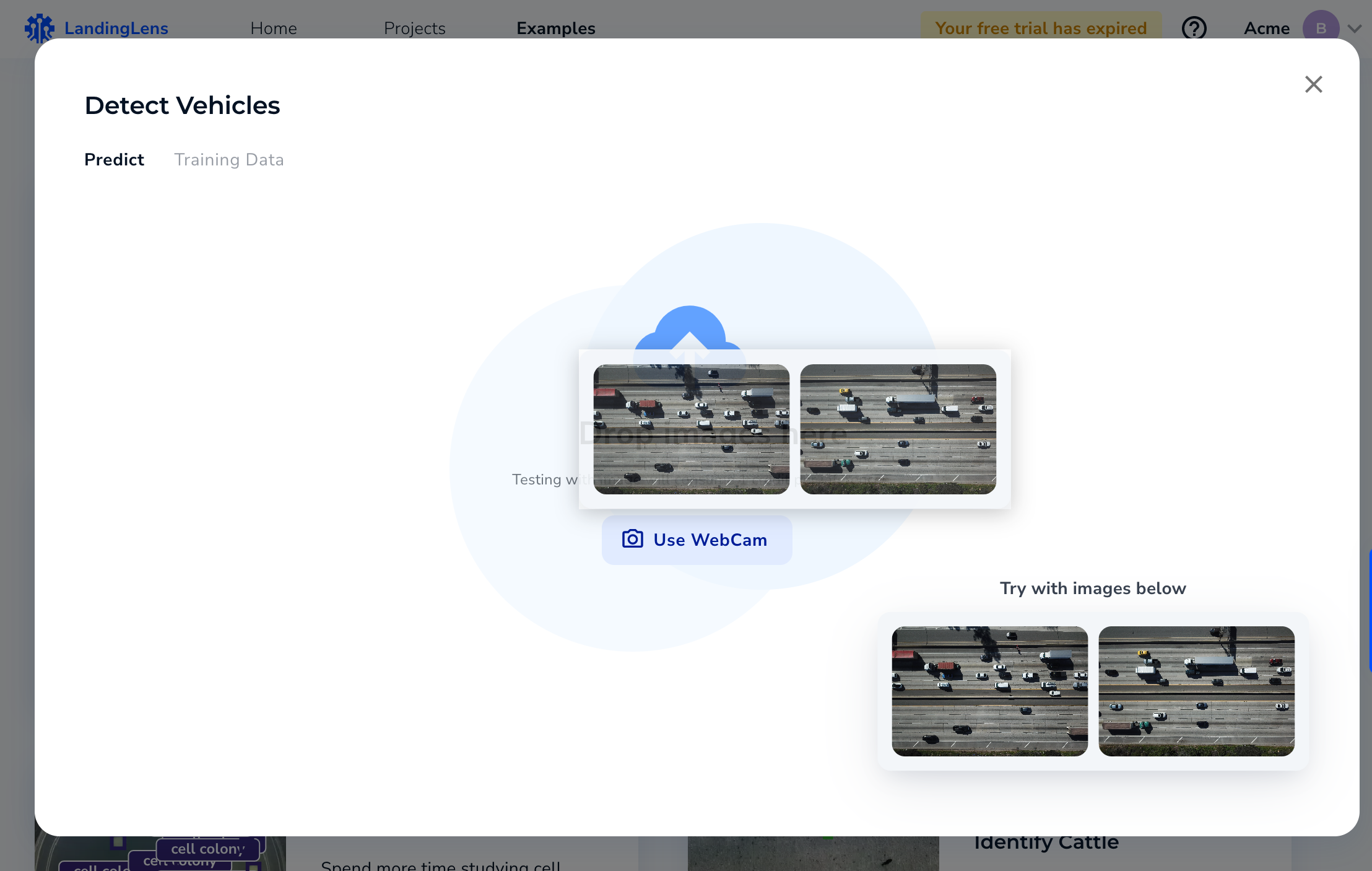

- We made it easier to upload sample images in our Example projects. Previously, you had to click the sample images to upload them. Now you can also drag and drop them.

Drag and Drop Images to Upload Them

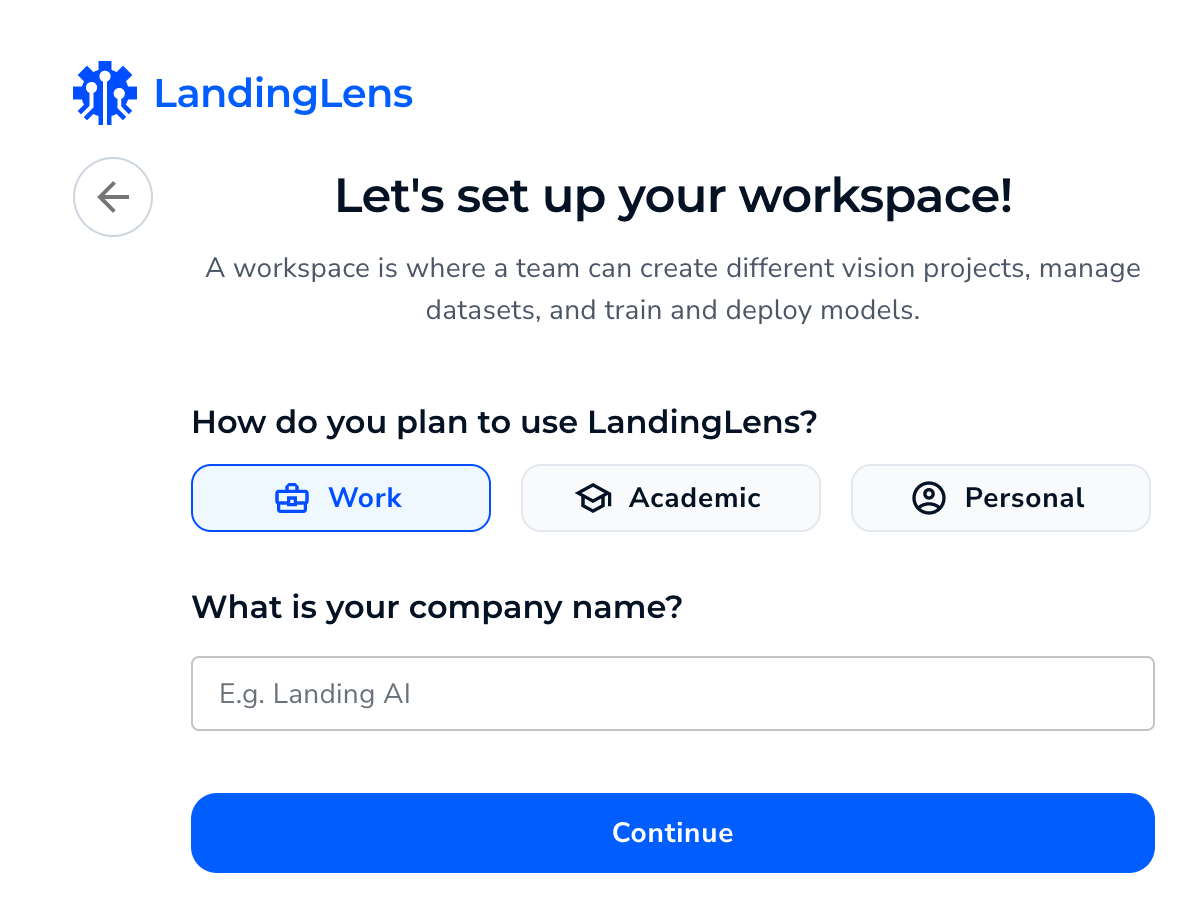

Drag and Drop Images to Upload Them - We updated the process for creating a new organization in LandingLens. You’ll now be prompted to name your organization and select your use case: Work, Academic, or Personal.

Select How You Plan to Use LandingLens

Select How You Plan to Use LandingLens

Bug Fixes

- LandingLens now prevents you from creating two Classes with the same name. Previously, it was possible to reuse the same name but with different capitalization (for example, “Scratch” and “scratch”).

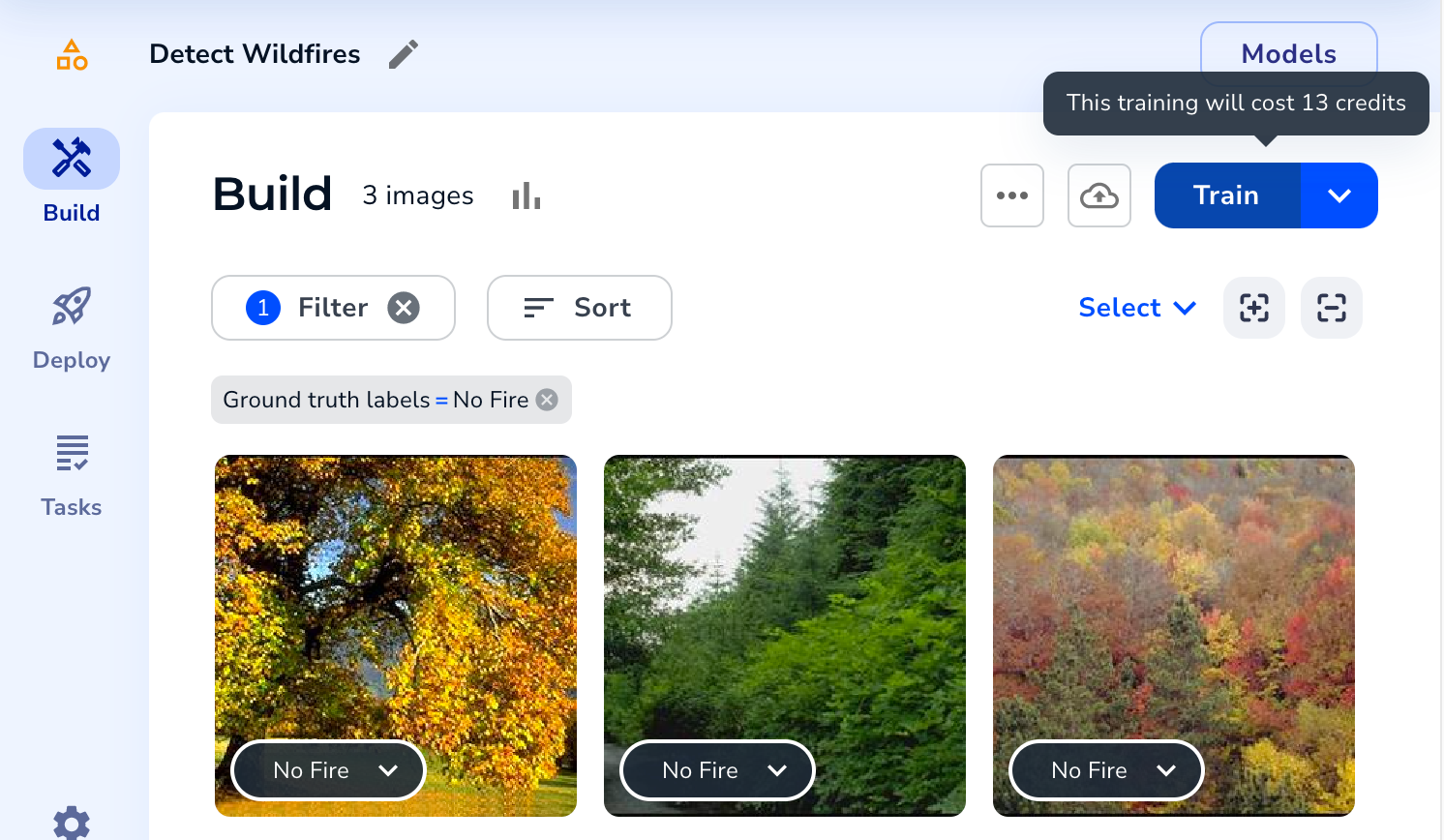

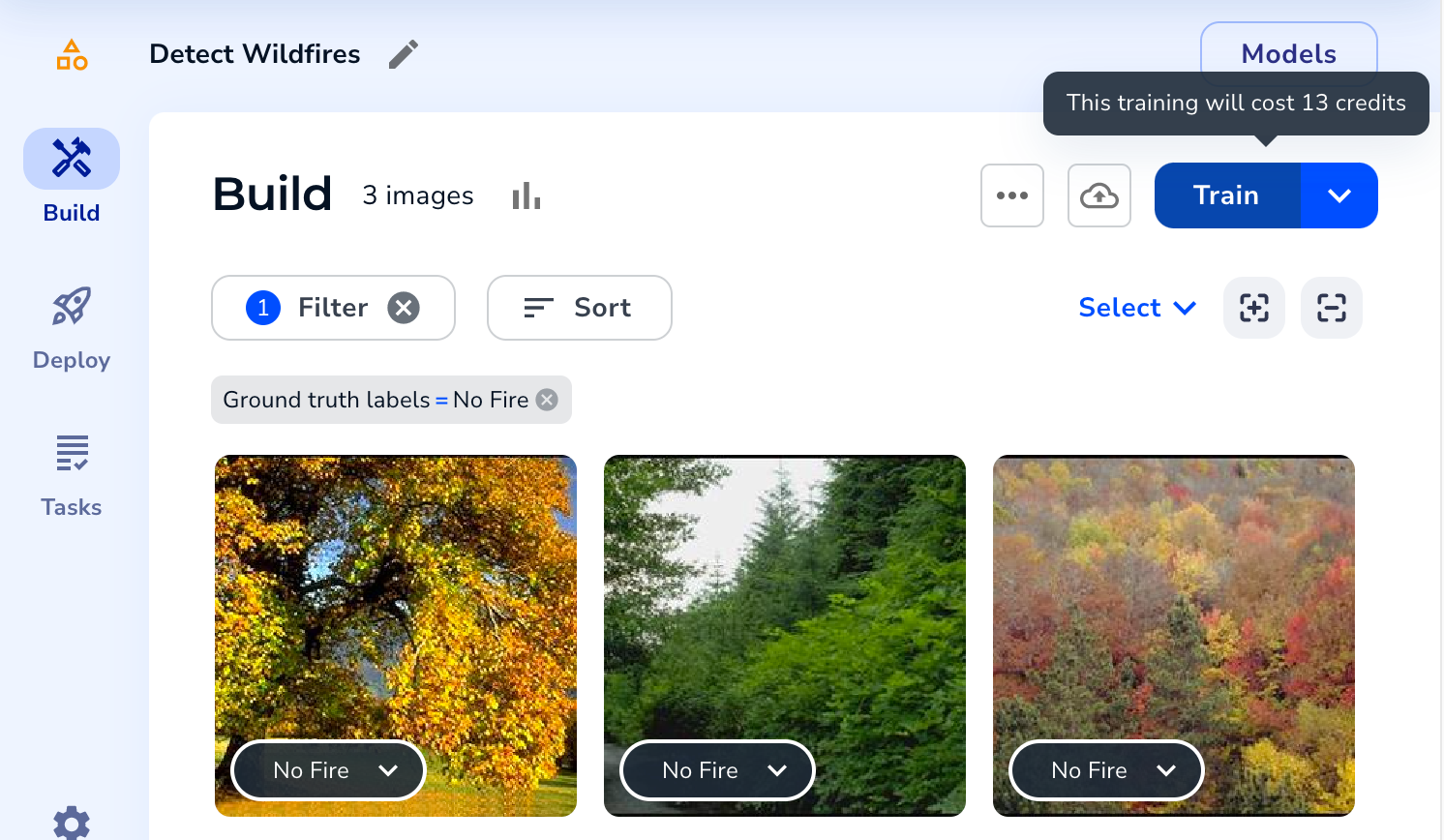

- When you hover over the Train button, a tooltip shows you how many credits the training process will cost. It costs 1 credit to train each image. LandingLens trains a model based on all images in your Project, not just the images that are filtered to display. Previously, the tooltip calculated the cost based on only the filtered images. How, the tooltip now correctly calculates the cost to include all images.

For example, the Project in the image below has 13 images. A filter is applied which causes only 3 images to display. However, the tooltip correctly states that training the Model cost 13 credits. The Training Tooltip Calculates the Cost

The Training Tooltip Calculates the Cost

May 16, 2023

The ability to delete Classes has been re-enabled. It was temporary disabled due to some reported issues. The LandingAI team has resolved these issues.

When you delete a Class, all labels on images for that Class are also removed. If you delete a Class after training your Model, train it again. For more information about how to delete Classes, go to Delete Classes.

Delete a Class

Delete a ClassMay 15, 2023

The Check Label Quality feature has been re-enabled. It was temporary disabled due to some reported issues. The LandingAI team has resolved these issues.

May 4, 2023

What's New

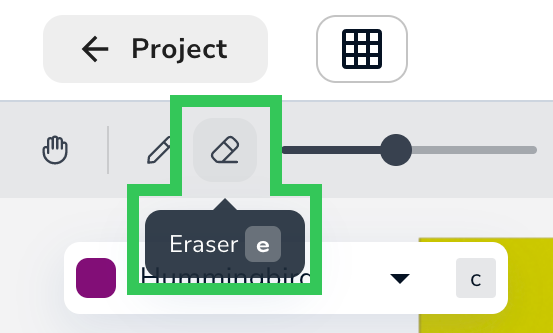

- We added a keyboard shortcut for the Eraser tool in Visual Prompting. You can now press E to select the Eraser tool.

Press "E" to Select the Eraser

Press "E" to Select the Eraser - Members that are Collaborators can now access other Organizations that they are members of in LandingLens. Users can only access Organizations that they are Admins or Collaborators of. For more information about accessing Organizations in LandingLens, go to Switch Organizations.

Bug Fixes

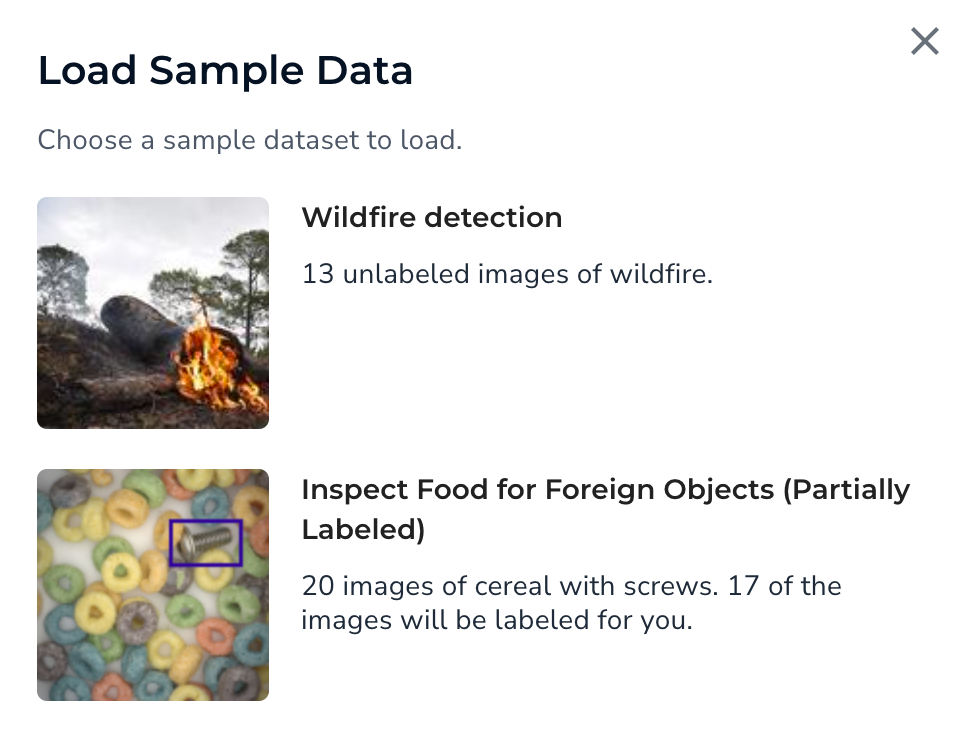

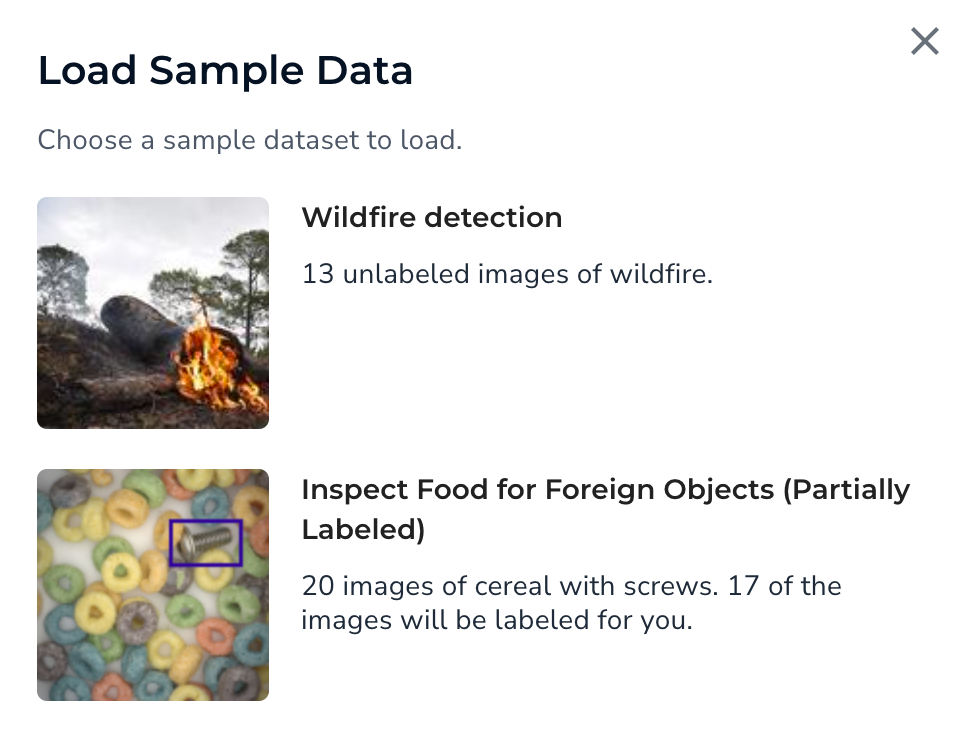

- The Load Sample Data pop-up window was briefly too narrow, which caused the text to overlap. This pop-up window has now been resized to fit all the text correctly.

Load Sample Data Pop-Up Window

Load Sample Data Pop-Up Window - If you add labels to an image in Visual Processing, your changes are now saved if you select another image before clicking Run.

- The keyboard shortcuts for changing the Brush size and Eraser size in Visual Processing now work correctly.

Other Updates

The Check Label Quality feature is temporary disabled due to some reported issues with this tool. We will notify you in the Release Notes once these issues have been fixed and the feature re-enabled.

April 25, 2023

LandingAI is thrilled to announce the Beta release of an innovative, new capability in LandingLens: Visual Prompting.

Visual Prompting is a paradigm shift in the field of computer vision. You label only a few small areas of an object in a few images, and the Model almost immediately detects the whole object in all of your images.

Visual Prompting Workflow

Visual Prompting has a highly iterative—and yet fast—workflow. After you upload images to your Project, start labeling one. Use the Brush “paint” a small area of the first object you want to detect. When prompted, enter a name for the object. This is your first Class.

Label Your First Object

Label Your First ObjectThen, use the Brush to paint a small area of another object you want to detect. This is your second Class. If you only want to detect one object type, that’s okay—simply name your second Class “Background” or something similar. You can have multiple Classes, but create at least two to get started.

Label Your Second Object

Label Your Second ObjectThen click Run! The Model shows you its Predictions in just a moment, in both the image you labeled and the other images in your dataset. In most cases, the Predictions won’t be perfect at first. Not only is that okay, that’s expected. You can now fine-tune your labels.

The Predictions Display in Seconds

The Predictions Display in SecondsGet Started with Visual Prompting

You can create a Visual Prompting Model in seconds and deploy in minutes. You don’t even need a background in computer vision, machine learning, or any type of AI!

To learn more details, check out our full support article on Visual Prompting. Start using Visual Prompting today by signing up for a LandingLens free trial!

April 21, 2023

Today’s release of LandingLens includes a few behind-the-scenes bug fixes.

We also included a workflow improvement that will keep your Projects running smoothly. In the past, it was possible to create a Project, create a Class, and then change the Project Type (for example, from Object Detection to Segmentation). That would confuse the backend, because the label infrastructure for each Project Type is very different.

The newest version of LandingLens prevents you from changing the Project Type after creating Classes. If you want to use a different Project Type, simply delete the Project and create a new one.

Before: You Could Change the Project Type After Creating Classes

Before: You Could Change the Project Type After Creating Classes

Now: You Can’t Change the Project Type After Creating Classes

Now: You Can’t Change the Project Type After Creating ClassesApril 5, 2023

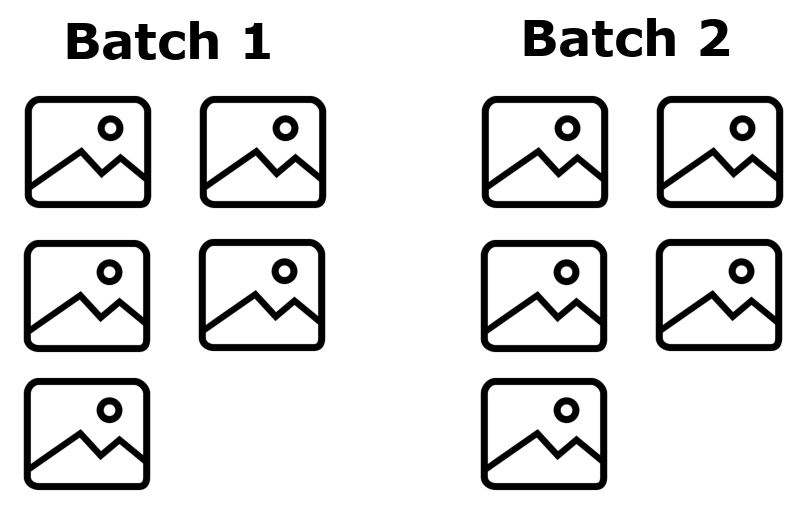

Labeling Tasks in Segmentation Projects are now assigned to users in batches of five images. For example, if you create a Labeling Task with 10 images and assign it to a user, that user will get two batches of five images each.

Labeling Tasks Are Grouped into Batches of Five Images

Labeling Tasks Are Grouped into Batches of Five ImagesWhen the user opens a Labeling Task, they will see five images. When they submit that batch, the second batch of five images becomes available.

When You Finish a Batch, LandingLens Gives You the Next One

When You Finish a Batch, LandingLens Gives You the Next OneLimiting each batch to five images allows LandingLens to process each batch quickly.

March 30, 2023

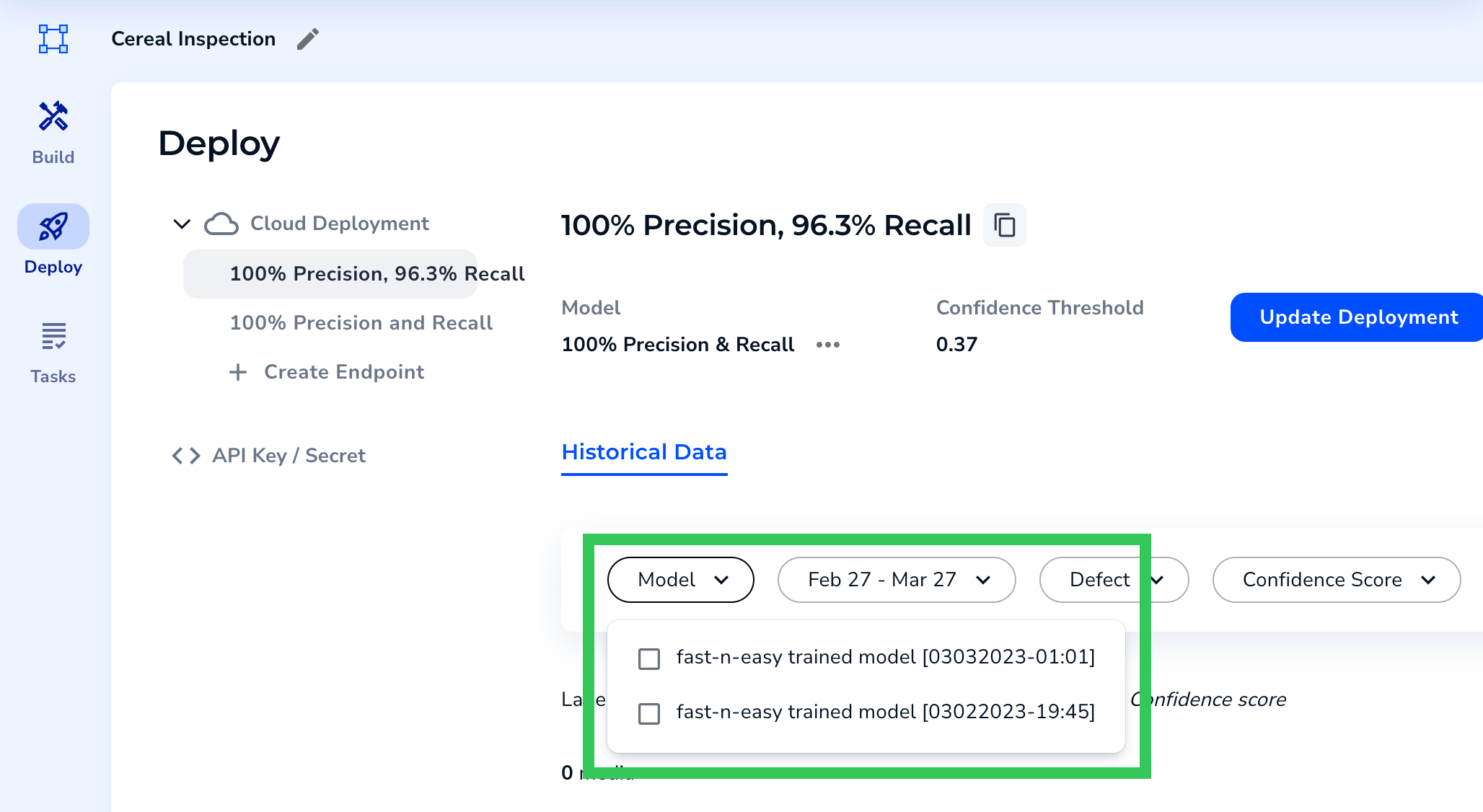

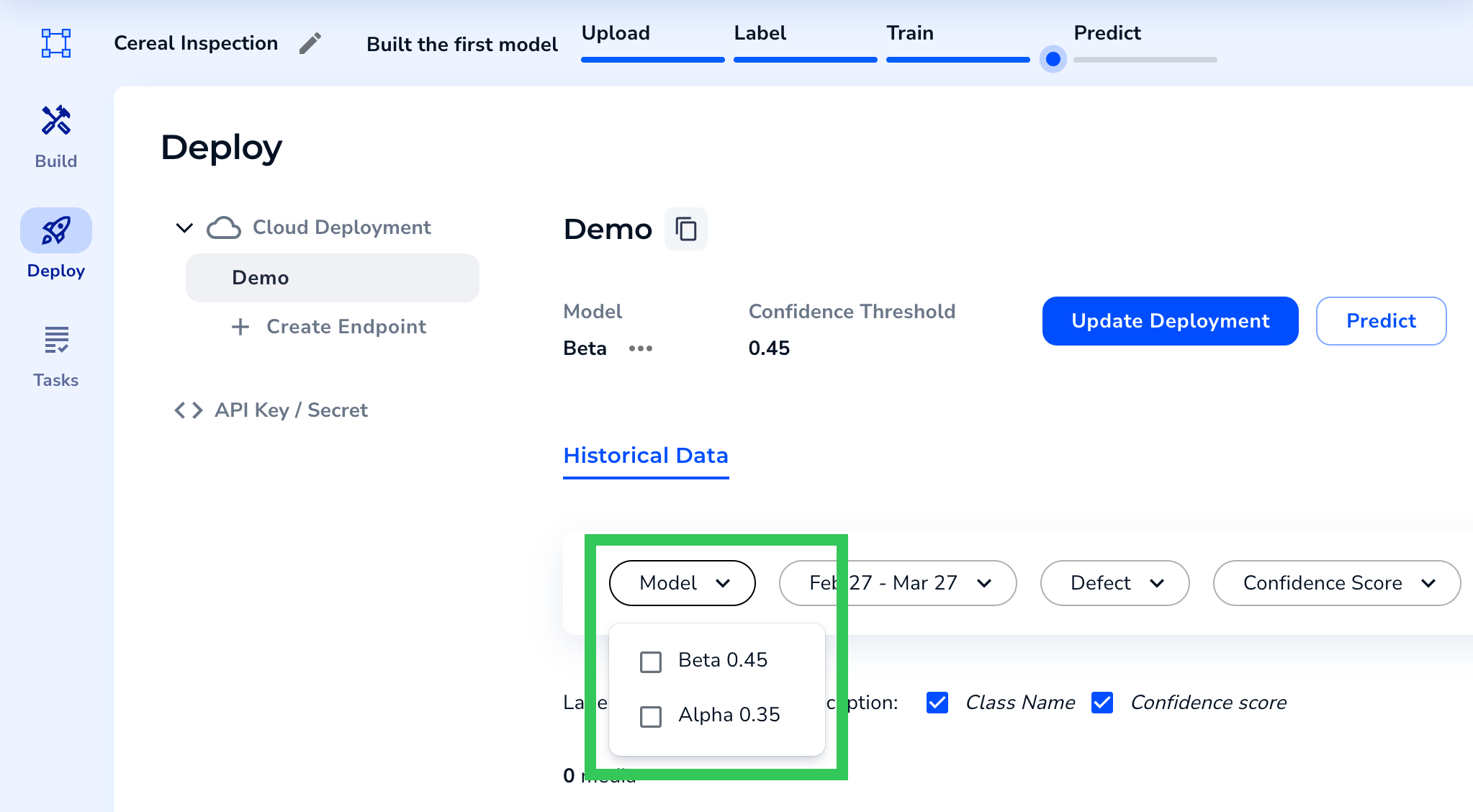

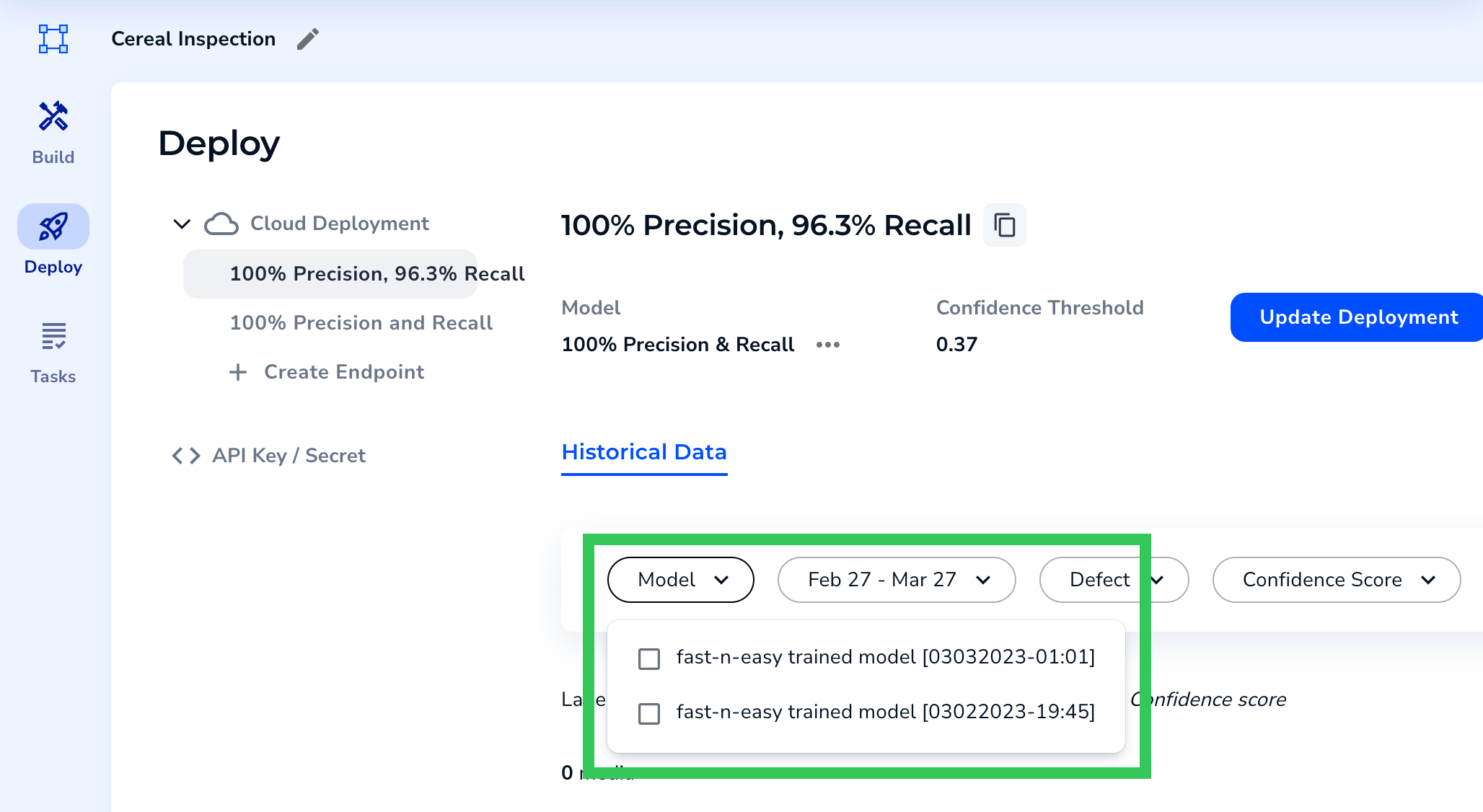

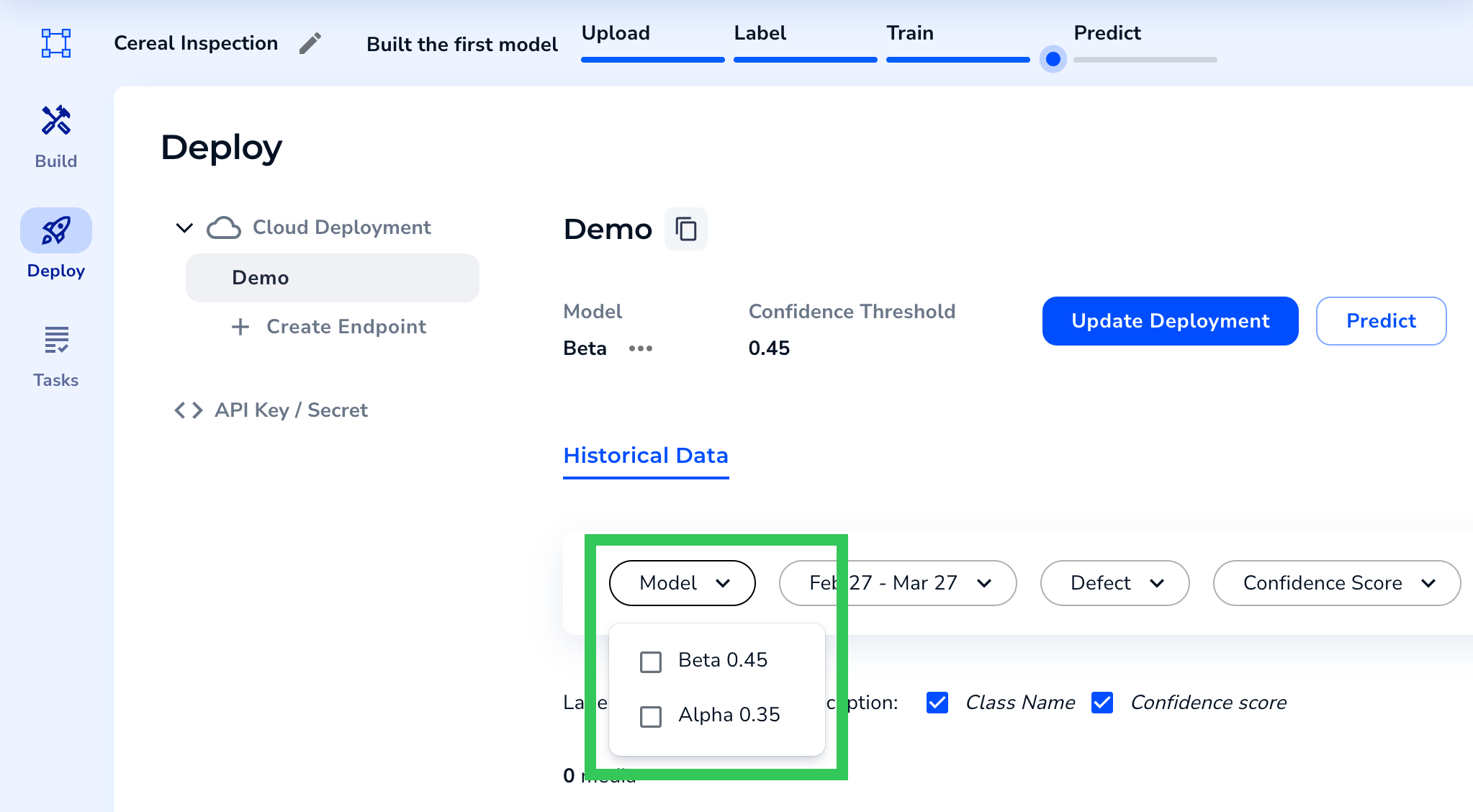

- Previously, the “internal” names for Models displayed in certain areas of the user interface. This included the Historical Data section in LandingLens, and the Model drop-down menu in LandingEdge. Now, the Model name and your selected Confidence Threshold display, so that you can easily choose which Model you want to use. The following images show how the Model names look before and after this update.

Before

Before After

After - We’ve made it easier to upload images to new Projects! Now you can click anywhere below the Project Types to open the Upload pop-up window. Previously, only clicking the Drop to Upload icon would open this pop-up.

Click Below the Project Options to Upload Images

Click Below the Project Options to Upload Images - We’ve changed how Model training runs in the LandingLens backend. This change isn’t visible in the user interface, and doesn’t impact the speed or accuracy of your Model.

March 22, 2023

We updated a few things in the background to optimize the LandingLens experience. We are continuing to work on adding new features, and we can't wait to share them with you soon!

Additionally, we updated a few articles:

- Cloud Deployment

- LandingEdge Specifications Sheet

- How many images do I need to upload for model training?

March 16, 2023

What's New

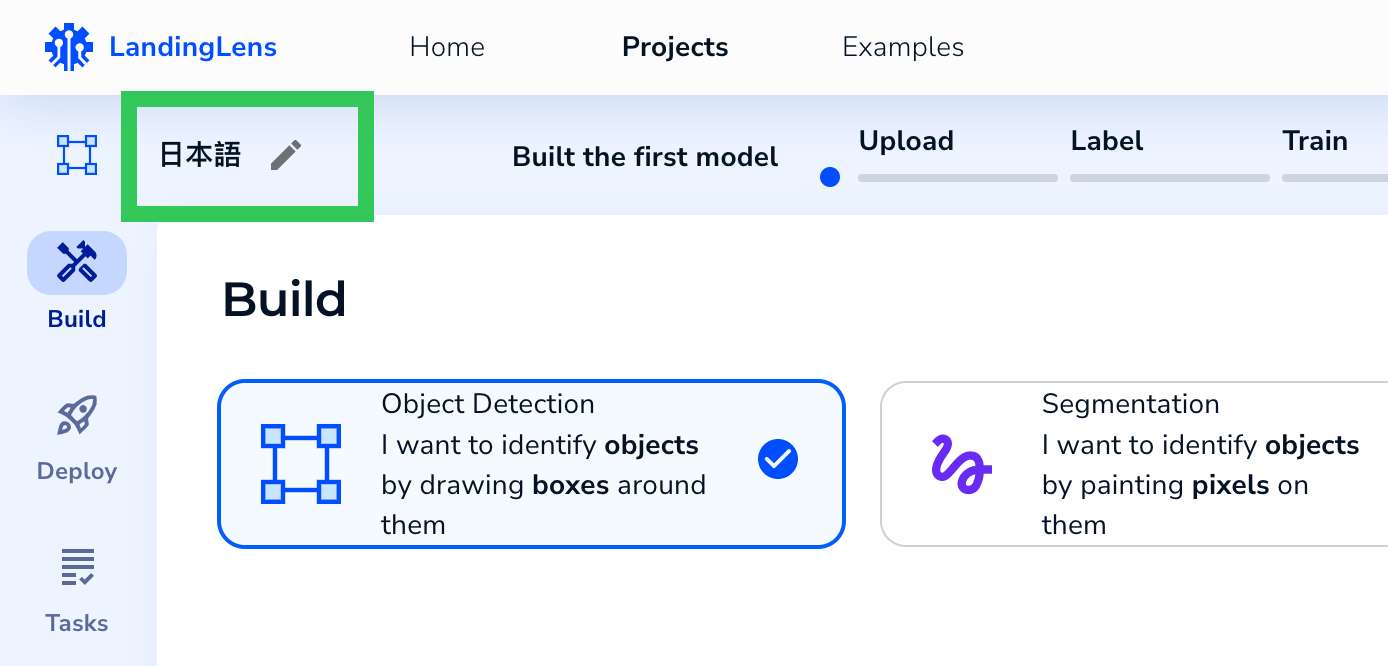

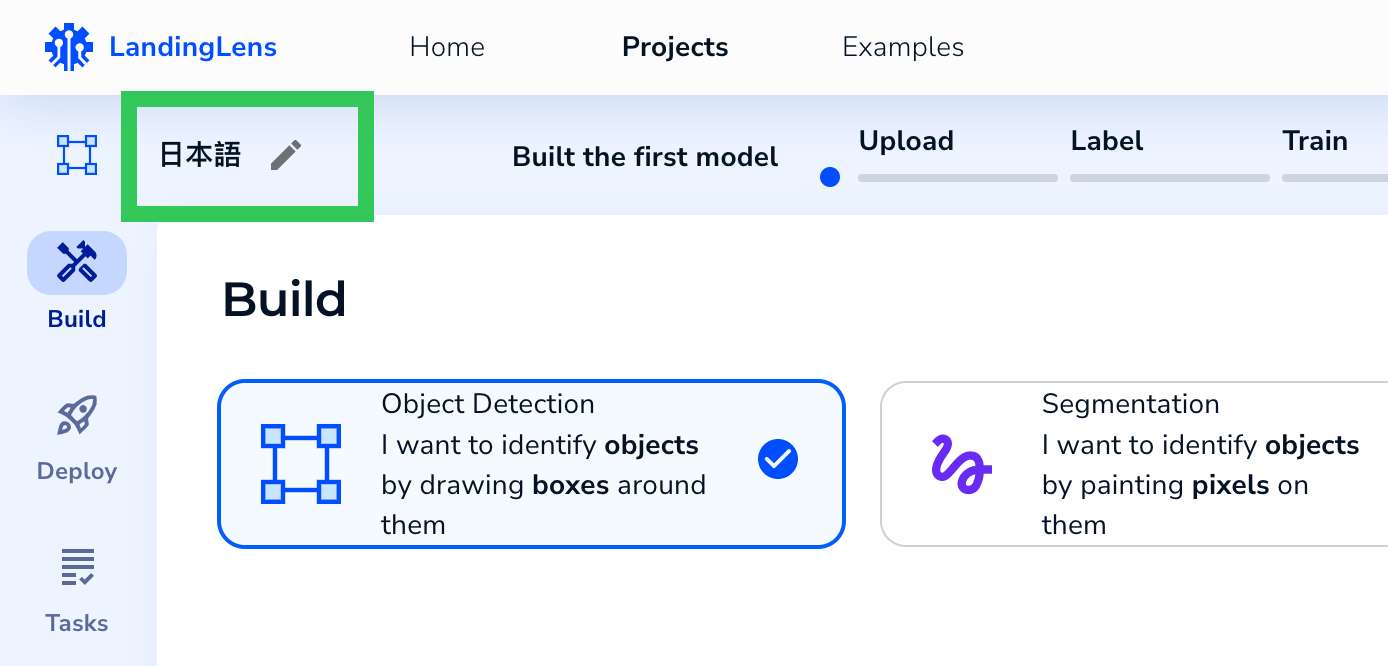

- You can now include Chinese, Japanese, and Korean characters in your Project name.

Project Name in Japanese

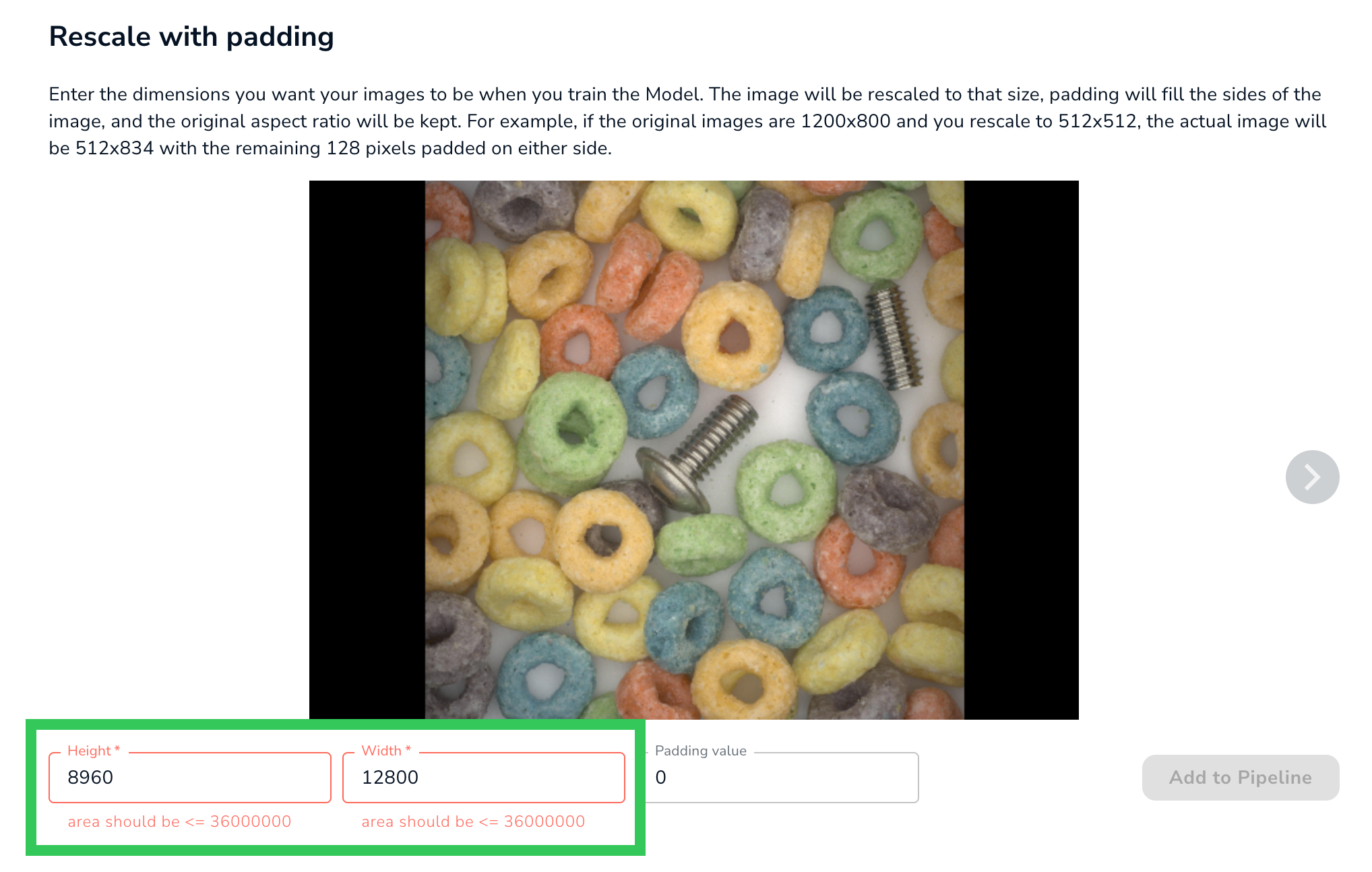

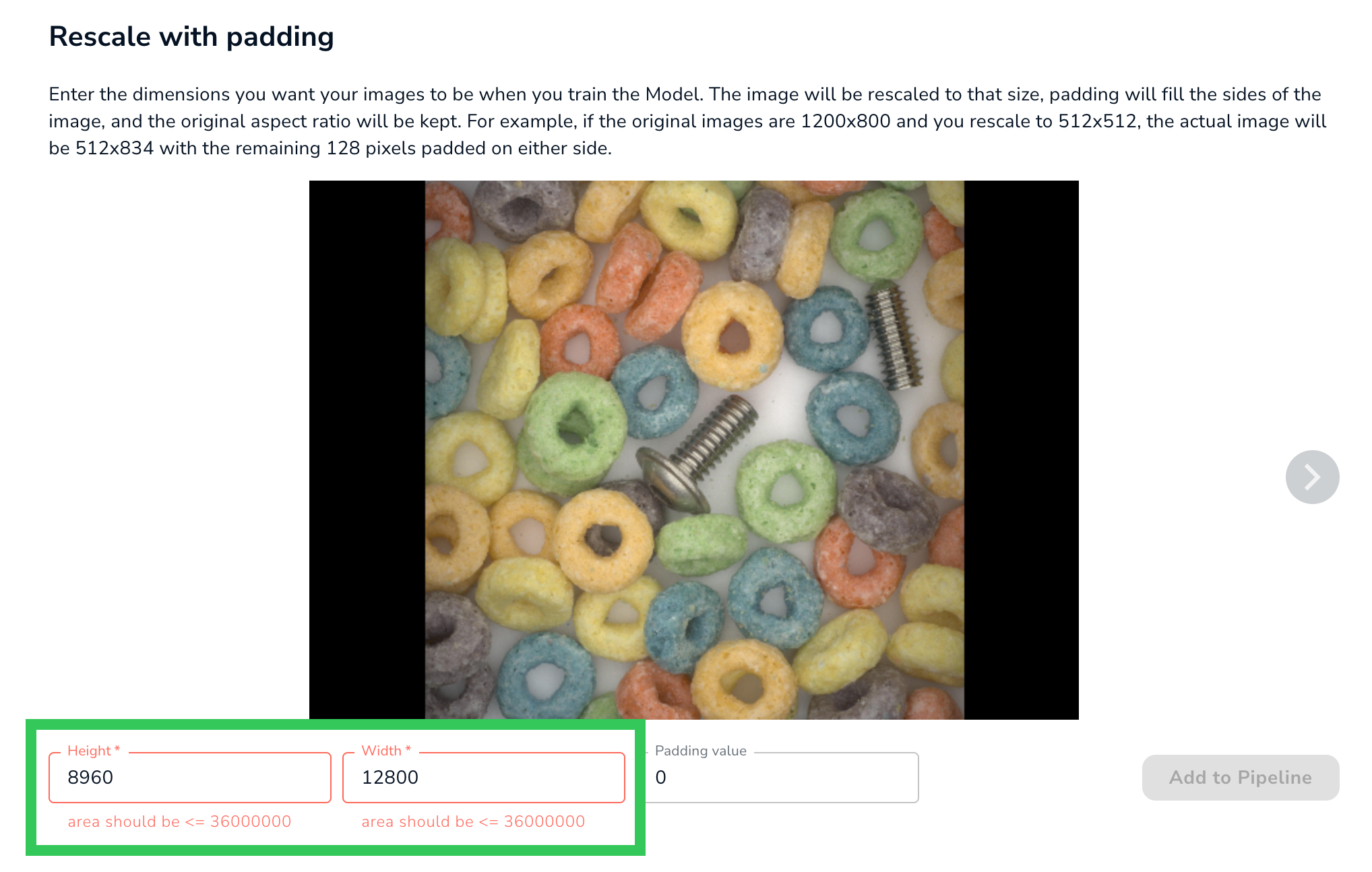

Project Name in Japanese - If you rescale images for custom Model training, LandingLens now provides text to ensure that your image fits within the minimum and maximum area constraints. For more information on custom training, go to Custom Model Settings.

LandingLens Shows the Maximum Area for a Rescaled Image

LandingLens Shows the Maximum Area for a Rescaled Image

Bug Fixes

- Previously, if you selected a Model on the Deploy page and then opened the Build page, that same Model would be selected. Now, selecting a Model on the Deploy page doesn’t change the Model shown elsewhere.

- Previously, if you added Data Augmentation to custom Model training and then deleted those settings, the Data Augmentation would still be applied. This issue has been fixed. If Data Augmentation is deleted, it won’t impact training.

- We fixed a bug that occurred when trying to upload images from a WebCam to some Classification Projects.

March 10, 2023

We discovered that naming a Class “Ok” could cause issues when training a Model in Object Detection and Segmentation Projects. To ensure that your Model is trained correctly, LandingLens no longer accepts “Ok” (or any variation of its capitalization) as a Class name for these Project Types. You can continue to use “Ok” as a Class in Classification Projects.

February 22, 2023

Announcements

- We now offer multiple pricing plans for LandingLens! There is something for teams of all sizes. Whether you’re an AI hobbyist or a manufacturer that needs an AI solution to provide QA on your production line, we have a plan for you. Check out these new plans on our Pricing page.

- We’ve launched LandingPad, a community for LandingAI users. Looking for tips or insights about using LandingLens? Interested in learning more about specific use cases? Then check out LandingPad!

LandingPad

LandingPad

What’s New

- You can now upgrade your pricing plan directly in LandingLens! Not sure what you need or want to discuss your use case? You can still contact our Sales team at any time.

To change your plan, click the User Menu in the top right corner, and select Plan and Billing. Then click Change Plan..png) Change Plan

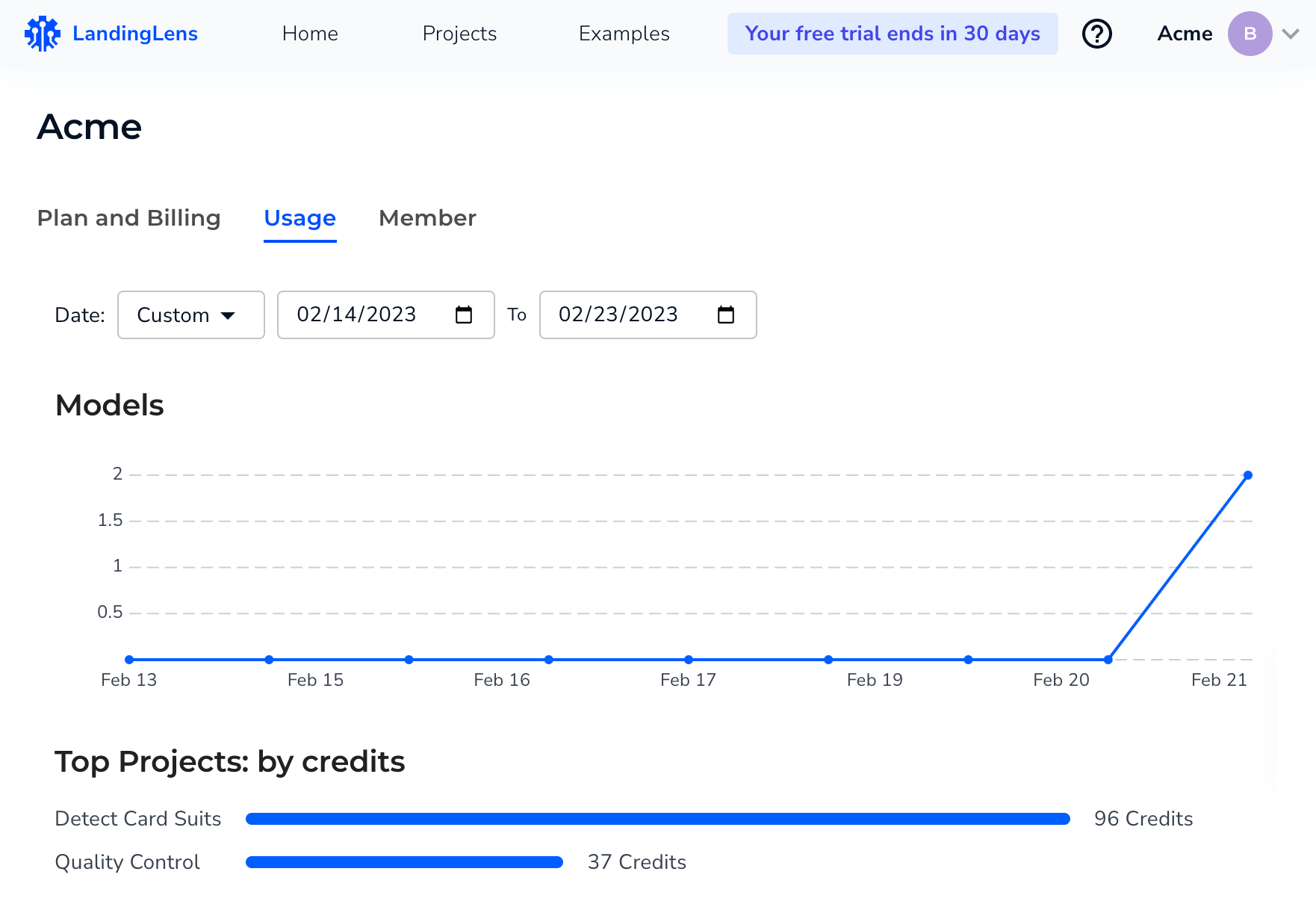

Change Plan - You can now track credit usage metrics in the new Usage dashboard. To view the dashboard, click the User Menu in the top right corner, and select Usage.

Usage

Usage - You can now upload sample images to a Project directly from LandingLens. To do this, create a new Project and click Load Sample Data.

.png) Load Sample Data

Load Sample Data

Bug Fixes

- You can now see the total number of filtered images.

- We fixed a bug that hid images when switching from Instance View to Image View.

February 12, 2023

We've Got a New Look

Today we released several new features and changes to the look and feel of LandingLens.

- Login page: You may have noticed we spruced up the login page with a new design.

Login Page

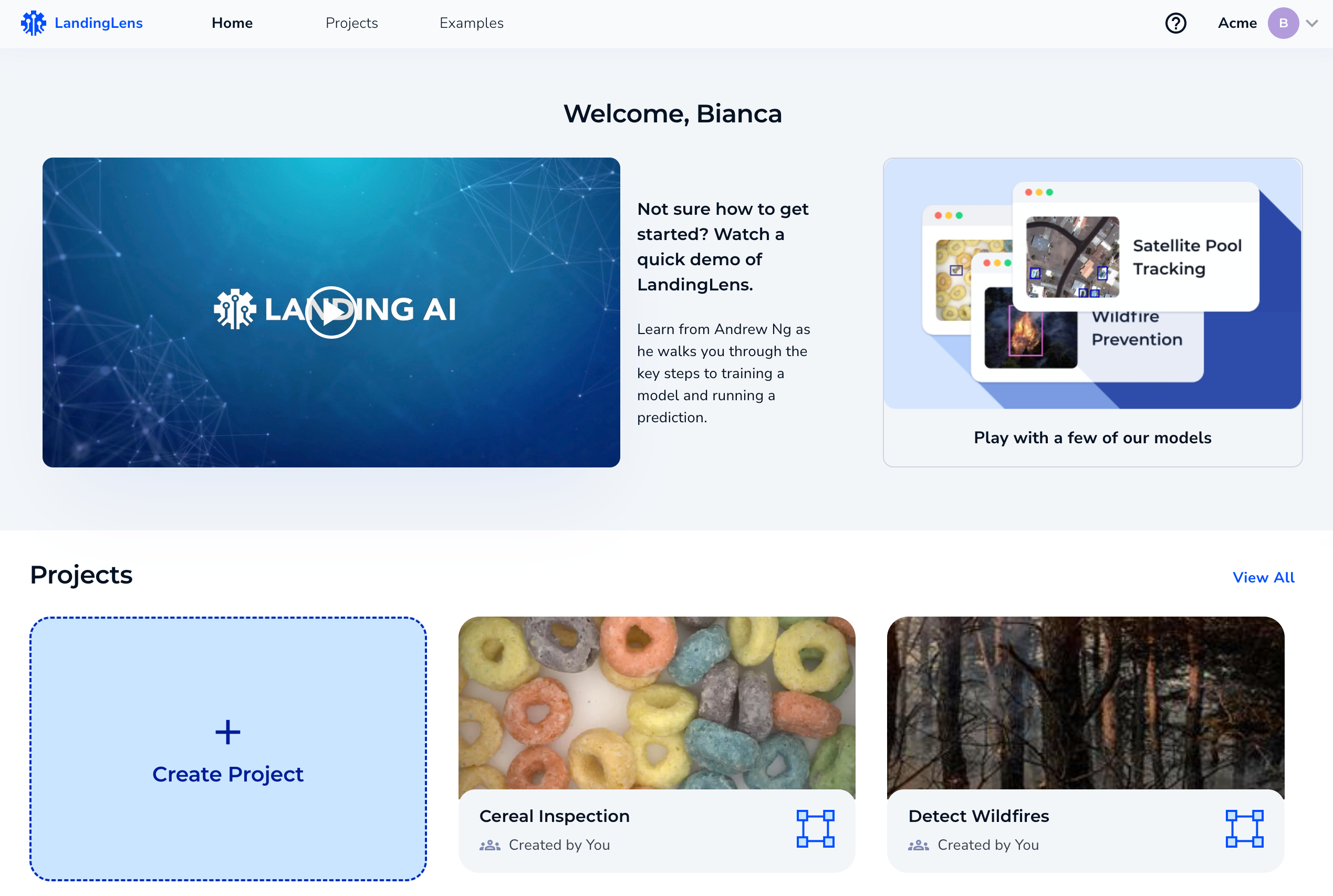

Login Page - Home page: The new home page will be the new core page, where we will provide you with organization information, important updates, and access to your most recent projects.

Home Page

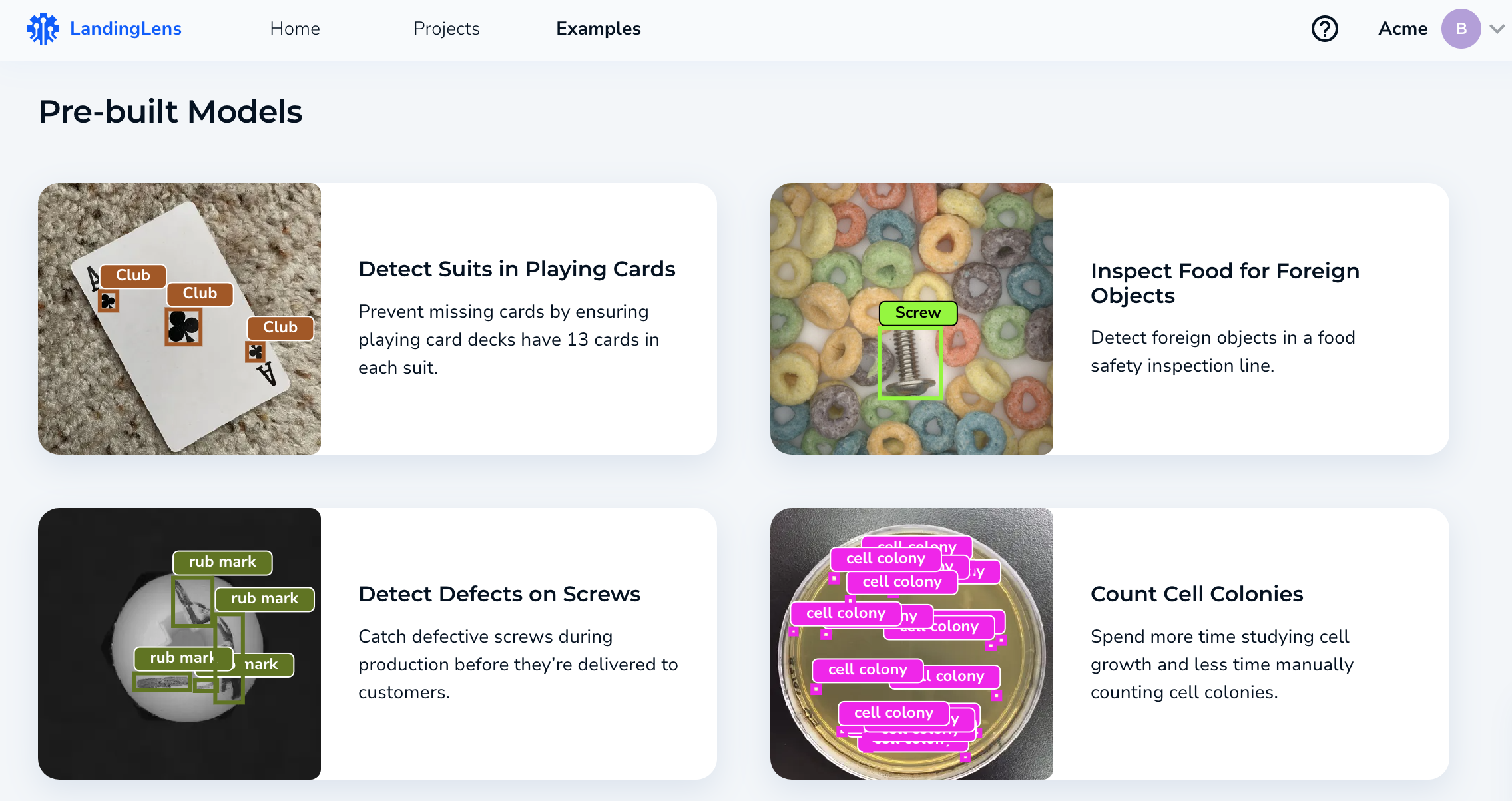

Home Page - Pre-trained examples: We have included some examples of how LandingLens can be used. Go to Examples, and check out some of our pre-trained models!

Examples

Examples

Deployment Page

We've got a new look and experience for the Deploy page. We have improved the flow and experience of the Deploy page by adding easier navigation across your various devices (now called "endpoints") and access to the historical data associated with each endpoint. For more information, go to Cloud Deployment.

Deploy Page

Deploy PageHere are some highlights:

- Endpoints (previously known as Devices) can be found under Cloud Deployment in the navigation bar on the left. You can deploy each endpoint with a trained Model and fixed Confidence Threshold. You can update your Model and Confidence Threshold by selecting Update Deployment.

- The Predict tool allows you to quickly drag and drop an image to run inference and test your Model.

- Images sent to the endpoint through the API integration will show up under Historical Data, just like before.

- You can find your API Key and API Secret conveniently located in the navigation bar on the left.

January 20, 2023

What's New

We've upgraded how default training works for Classification models to improve the performance of these models.

Bug Fixes

Previously, when a model training job failed, an error would display, and users could not retrain the model. We fixed this so users can retrain models.

January 17, 2023

Announcement

The Support Center URL changed from https://support-landingai.document360.io/ to https://support.landing.ai/.

What's New

- LandingLens now displays a workflow assistant to guide you through creating your first Object DetectionProject. Hover over the workflow assistant to read more information about your current step.

Refer to the Workflow Assistant for Help

Refer to the Workflow Assistant for Help - LandingLens introduces a new feature called "Predict". Predict allows you to quickly upload new images to your Model so that the Model can make predictions on those images. Predict streamlines the Run Live feature so you can deploy Models faster.Note:"Predict" is currently only available for trained Object Detection Models.

Drag and Drop Images for Your Model to Make Predictions

Drag and Drop Images for Your Model to Make Predictions

Bug Fixes

Fixed: Users can resume exporting training datasets.

.png)

.png)

.png)