- 28 May 2024

- 2 Minutes to read

- Print

- DarkLight

- PDF

Python Tutorial: Detect Suits in Playing Cards

- Updated on 28 May 2024

- 2 Minutes to read

- Print

- DarkLight

- PDF

LandingAI offers code samples in Python and JavaScript libraries to help you learn how to effectively deploy computer vision models you’ve built in LandingLens.

This tutorial explains how to use the Detect Suits in Poker Cards example in the Python library to run an application that detects suits in playing cards. In this tutorial, you will use a web camera to take images of playing cards. An Object Detection model developed in LandingLens (and hosted by LandingAI) will then run inference on your images.

This example is run in a Jupyter Notebook, but you can use any application that is compatible with Jupyter Notebooks.

Requirements

Step 1: Clone the Repository

Clone the LandingAI Python repository from GitHub to your computer. This will allow you to later open the webcam-collab-notebook Jupyter Notebook, which is in the repository.

To clone the repository, run the following command:

git clone https://github.com/landing-ai/landingai-python.gitStep 2: Open the Jupyter Notebook

Open Jupyter Notebook by running this command in your terminal:

jupyter notebookIn Jupyter Notebook, open the landingai-python repository you cloned.

Navigate to and open this file: landingai-python/examples/webcam-collab-notebook/webcam-collab-notebook.ipynb.

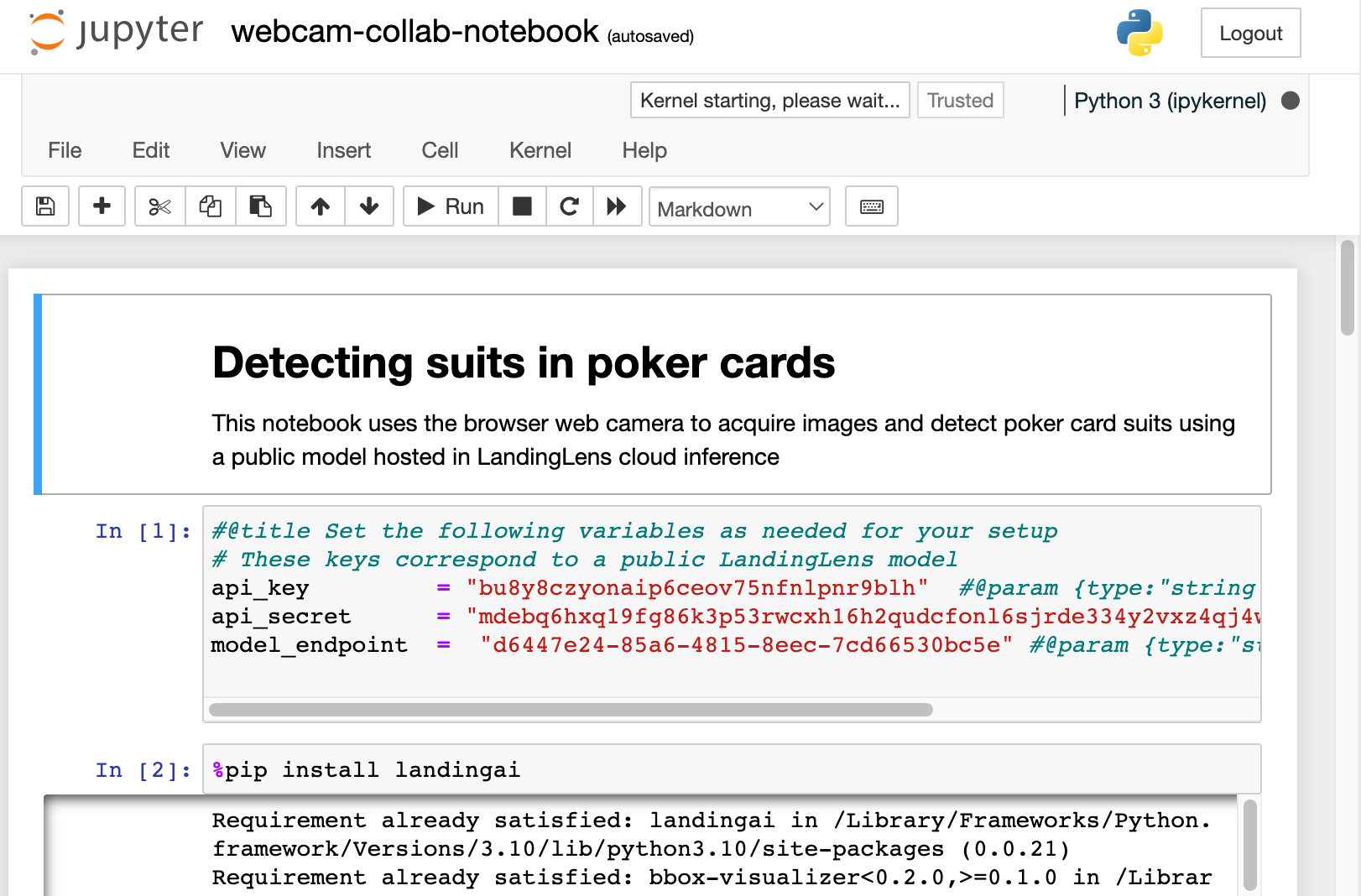

Jupyter Notebook File for the Example

Jupyter Notebook File for the Example

The notebook opens in a new tab or window.

Opened Example File

Opened Example FileStep 3: Start Running the Application

The notebook consists of a series of code cells. Run each code cell, one at a time.

An asterisk (*) displays in the pair of brackets next to a code cell while that code executes. When the code has run, the asterisk disappears.

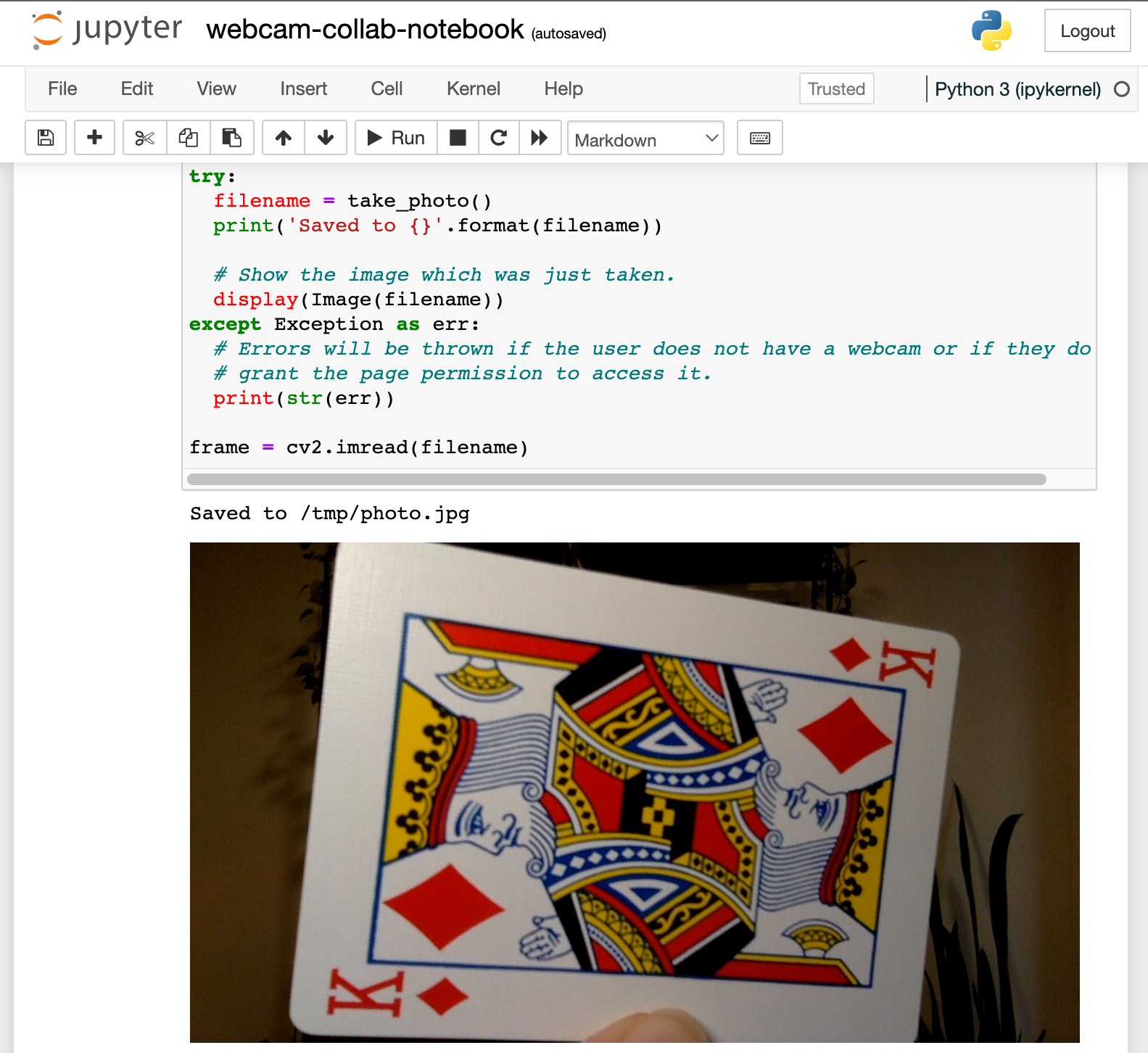

Step 4: Take a Photo with Your Webcam

When you run the Acquire Image from Camera code cell, the application turns on your webcam. Hold a playing card up to the webcam and press Spacebar to take a photo.

Your webcam turns off, and the image displays below the code cell.

Your Photo Displays Below the Code Cell

Your Photo Displays Below the Code CellStep 5: Run the Model and See the Predictions

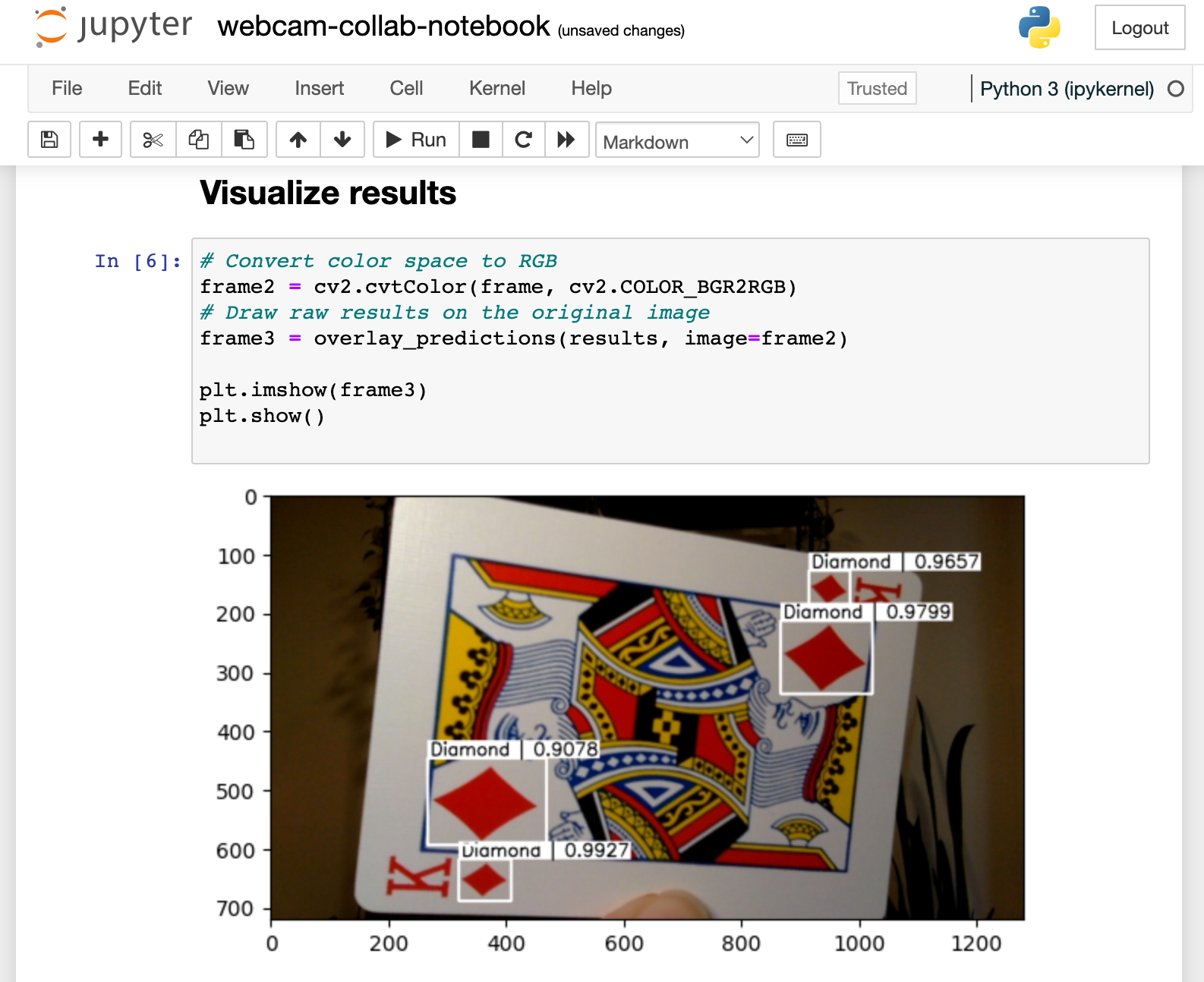

Continue running the code cells.

Running the Run the Object Detection Model on LandingLens Cloud code cell initiates the model in LandingLens to run inference on your image.

Then, when you run the Visualize Results code cell, an image displays below the cell. This is your original image, with the predictions from the LandingLens model overlaid on top. The prediction includes the bounding box, the name (Class) of the object detected, and the Confidence Score of the prediction.

For example, in the screenshot below, the model identified that the card has several Diamonds.

The Model Detected the Suits in the Image

The Model Detected the Suits in the ImageStep 6: Count How Many Objects Were Detected

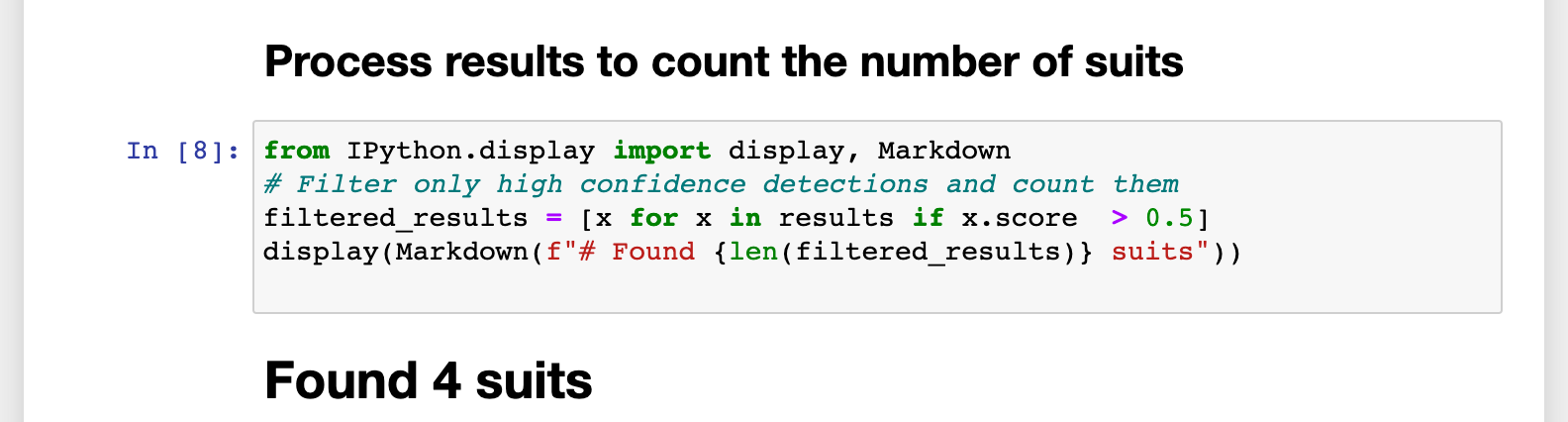

Run the next code cell, which is Process Results to Count the Number of Suits. When you run this code, the application counts how many objects were detected that had a confidence score higher than 50%. The count displays below the code cell.

For example, in the screenshot below, the application counted four Diamonds that met the threshold criteria.

The App Detected Four Objects (Suits)

The App Detected Four Objects (Suits)Step 7: Make Your Own Application

Congrats! You have now successfully run a LandingLens model and made predictions! You can now customize the code cells to create your own application!

.png)