- 20 Dec 2022

- 2 Minutes to read

- Print

- DarkLight

- PDF

Analyze Models

- Updated on 20 Dec 2022

- 2 Minutes to read

- Print

- DarkLight

- PDF

Models Report

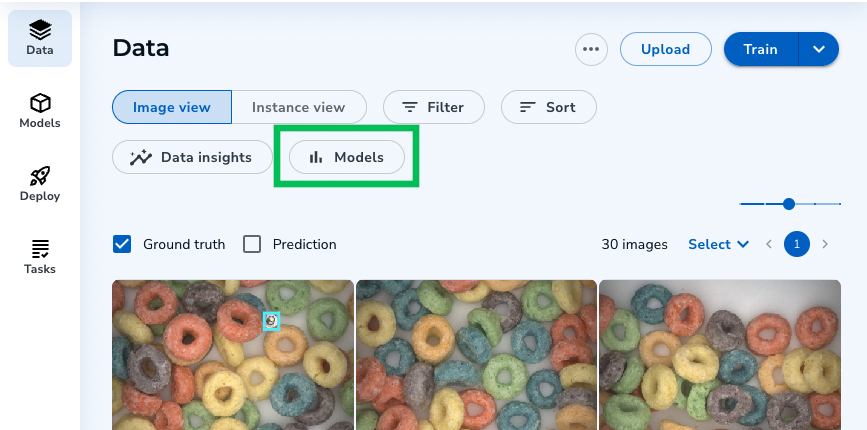

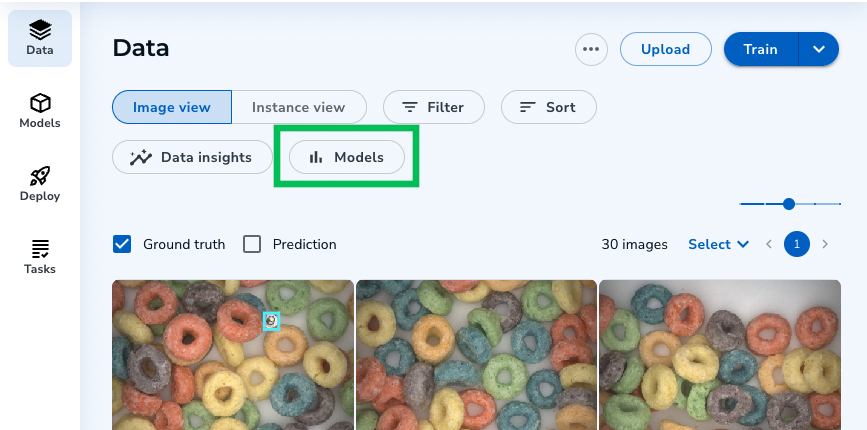

LandingLens offers a report that allows you to dive deeper into the metrics to see how well your Model performed. This report is only available after you have trained a Model. To view the Models report:

- Open the Project you want to generate a report for.

- Click Models.

Models

Models

The Models report has categories:

Model Report

Model Report Performance

The Performance section can help you understand how well your Model is performing by showing the metrics of two different perspectives: Precision and Recall.

- Precision: The percentage of accurate (or "precise") Predictions out of the total number of Predictions made. For example, if an image has 9 cats and the Model detects 10, the Precision is 90%.

- Recall: The percentage of accurate Predictions (or "recall") out of the total number of actual Predictions. For example, if an image has 10 cats and the Model detects 8, the Recall is 80%.

.png) Performance

Performance Confidence Threshold

The Model outputs a Confidence Score for each prediction, which indicates how confident the AI is that its prediction is correct. The Confidence Threshold section lets you view how changing the Confidence Threshold impacts your Model's Precision and Recall (displayed in the Performance section). Refer to the Confidence Score when iterating your Models.

By default, LandingLens displays the Confidence Threshold with the best F1 score for all labeled data.

To change the Confidence Threshold, enter a value between 0 and 1 in the field provided. The value automatically saves.

.png) Change the Confidence Threshold

Change the Confidence Threshold The Confidence Threshold section also includes a graph of the prediction sorting metrics. To see this graph, click the downward-facing arrow to expand the section.

.png) Expand the Confidence Threshold

Expand the Confidence Threshold Confidence Threshold Graph

Confidence Threshold GraphThe Confidence Threshold graph displays the following information:

- True Negative (TN): The AI's prediction correctly ("true") found no objects. For example, the AI correctly predicted that there was no screw.

- True Positive (TP): The AI's prediction correctly ("true") identified an object ("positive" identification). For example, you labeled a screw, and the AI also labeled that screw.

- False Negative (FN): The AI's prediction incorrectly ("false") found no objects ("negative" identification). For example, the AI predicted there were no screws. However, you labeled there was a screw.

- False Positive (FP): The AI's prediction incorrectly ("false") found an object when there was not one ("positive" identification). For example, the AI predicted that there was a screw. However, there was no screw.

- Misclassified (MC): The AI predicted an object but selected the wrong Class. For example, the AI predicted a screw, but the object is a nail.

Results

The Results section displays these categories:

- Correct Predictions are where the AI's prediction matches your Ground Truth Labels. For example, you labeled a screw, and the AI found that screw.

- Incorrect Predictions are where the AI's prediction did not match your Ground Truth labels. For example, you labeled a screw, but the AI did not.

These categories include these metrics:

- Ground Truth represents the labels you or another Labeler applied to an image. In other words, Ground Truth refers to the "true" identified objects. (The Model refers to the Ground Truth when it learns how to detect objects.)

- Prediction is the type of Class the AI detected.

- Count is the number of instances (labels) that falls under that category. You can click the number to filter by that category and view the relevant instances.

Results

Results